I am also going to go into a more detail on how the API loads data into essbase and what to be aware of.

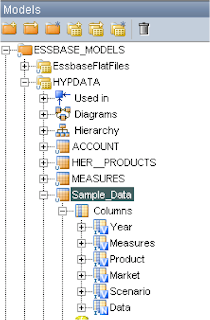

To start with you need your source and this time I am going to use a pre-populated database table, the process is nearly identical if you are going to use a flat file as a source obviously you just need a different source DataStore.

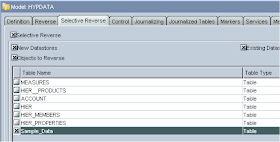

As always the next step is to create a reversed DataStore of the db table.

Once you have selected the table and then clicked reverse it should create the new DataStore.

The target is going to be the essbase database Sample.Basic, the DataStore was created in a previous blog

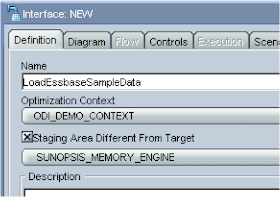

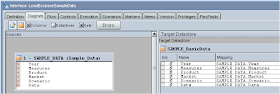

The interface can now be created.

In the diagram tab, drag the SQL table DataStore to the source and the Essbase data DataStore to the target, as my source column names are the same as the target everything is automapped.

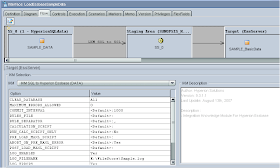

In the flow section make sure you have the IKM set to “IKM SQL to Hyperion Essbase (DATA)”

Understanding some of the options are important when using this IKM.

CLEAR_DATABASE :- This will execute the following calculation script commands for block storage cubes (CLEARBLOCK ALL, CLEARBLOCK UPPER, CLEARBLOCK NONINPUT), for ASO cubes cubes you can only select ALL and it will clear all data.

MAXIMUM_ERRORS_ALLOWED :- If this is set to 0 it will ignore any errors such as a member not found and keep trying to load.

COMMIT_INTERVAL :- This number will be the amount of records of data that are sent to essbase in a chunk. Setting this amount to a large value can cause performance issues if you have any records that get rejected, I will go into this in more detail shortly.

RULES_FILE :- You don’t have to use a rules file with this IKM but an important thing to note is that without a rules file the data will always be sent as “overwrite” and you will need to use a rules file if you want to add to existing data.

Executing the interface will then load the data into essbase, any data which couldn’t be loaded will be logged in the error log file.

This is all fine but recently there was a post about the performance of using ODI to load data into essbase, as I always want to know how it all works and I am never satisfied until I know so I went about looking into behind the scenes of the data loading.

When an interface is run it calls upon a specific knowledge module depending on the integration, the hyperion KMs then call on Java classes to do all the work, these classes are contained in the jar files in the oracledi\drivers directory.

The classes also rely on the essbase Java API. When a dataload is run a chunk of the source data is grabbed, the amount of data in each chunk depends on the value you have set in the COMMIT_INTERVAL option, the default being 1000 records.

What happens now is the data is streamed in using the API until all the records have been processed and the data is then committed to essbase. This process is repeated until all the chunks of source data have been processed.

The methods used in the Java API are :-

beginDataLoad - which starts the update to the esssbase database.

If you look in your essbase application log file you can see when this command has been called.

Received Command [StreamDataload] from user [hypadmin]

sendString - which sends a string of data to the essbase database.

endDataload - commits all the data that has been sent from each sendString method.

This process works fine unless there are some invalid records e.g. records with members that don’t exist in the dimension it is being loaded against.

The data will not be rejected until the endDataload method is called, so say you have the commit interval set to 1000, 1000 records of data will still be sent to essbase and it will be all rejected if any of it is invalid.

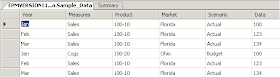

I updated my source data to send a record of “Florida1” instead of “Florida”, this is what you will see in the essbase app log.

Sun Jan 11 13:17:18 2025]Local/SAMPLE/Basic/hypadmin/Error(1003014)

Unknown Member [Florida1] in Data Load, [999] Records Completed

[Sun Jan 11 13:17:18 2025]Local/SAMPLE/Basic/hypadmin/Error(1003050)

Data Load Transaction Aborted With Error [1003050]

To compensate for this the error in the data the code then tries to load each record one by one from the start of the chunk until it has processed all the records in the chunk.

So if you have the commit interval as 1000 then it will try and load each of them 1000 records one by one until it has processed the 1000 records and then goes back into the stream loading of the next chunk, if it hits another error then it will go into single record update again.

If you look at the log the interface produces you will see if enters single record loading.

2025-11-01 14:44:51,375 DEBUG [DwgCmdExecutionThread]: Error occured in sending record chunk...Cannot end dataload. Analytic Server Error(1003014): Unknown Member [Florida1] in Data Load, [13] Records Completed

2025-11-01 14:44:51,375 DEBUG [DwgCmdExecutionThread]: Sending data record by record to essbase

This adds an extremely large overhead in the processing time and it is a really inefficient way of processing data, hopefully one day there will be some updates to the Java code that take into account some of these issues. Until then it is something to watch for and you will have to play around with the commit interval to find the fastest processing time or try and make sure your data is clean.

Hopefully that gives you an insight into what is happening when loading data, you should also be able create your own essbase dataload interfaces.