Today I will be looking at scheduling your integrations using the Operator and also how to execute them over the web using the java invocation API.

Ok, you have your integration all up and running though you want to be able to run it on a scheduled basis, this is how it is done.

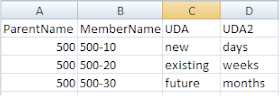

The first thing that needs to be done is to create a scenario; the scenario compiles all the elements of your integration ready to be executed. A scenario can be created from an interface, package, procedure or variable, for this example I am going to use a previously created package.

There are a couple of ways of generating a scenario, you can right click over your component and choose “Generate Scenario”

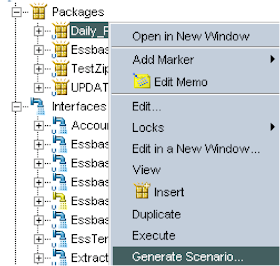

Or open the component, go to the scenarios tab and generate

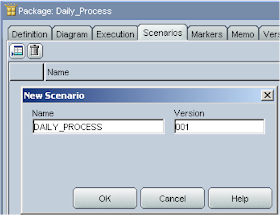

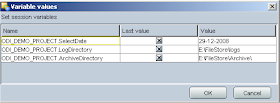

If it is a package you are scheduling and it contains variables you will be presented with the option to be able set the value when the scenario is executed.

If you execute the scenario then the session variable window will open where you can enter the value for the selected variables

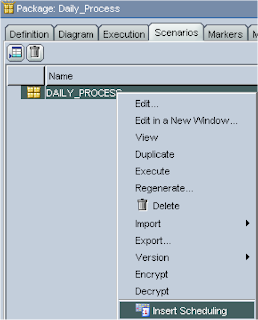

If you change the component such as a package in any way you will need to regenerate the scenario again to compile it with the changes, this can easily be done from the right click menu.

Right clicking the scenario also gives the option to schedule.

This will open the scheduling window that has a multitude of options to suite your requirements

There is also a variables tab to let you set what the initial value of the variables will be; once these parameters have been set and applied they will be sent to the operator.

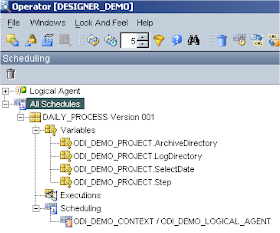

Opening the operator and selecting the Scheduling tab will display the information about all the scheduled scenarios.

You can also schedule the scenarios directly from the scenarios section in the Operator and that’s all there is to it, couldn’t be easier.

If you want to execute a scenario from a Java application or over the web then one way of doing this is to use the provided invocation java package.

Within the ODI directory installation (\OraHome_1\oracledi\tools\web_services) there is a file name odi-public-ws.aar, the file is a aar type which stands for Apache Axis Archive, axis is Apache’s java platform for creating and web services applications which I am not going to be going into today.

What I am interested in is inside this file, in the lib directory is the java archive package odi-sdk-invocation.jar.

Once the file has been extracted from the aar file then you are ready to use it in with your Java IDE of choice.

The API documentation is available at \OraHome_1\oracledi\doc\sdk\invocation\index.html

One thing to be aware of is that you need to have an agent created to use the API with the example I am going to show, I went through the agent setup in an earlier blog

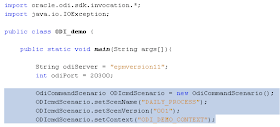

First create a new instance of the OdiCommandScenario object and set the scenario and context information.

If it is a package and has variables associated with it you can also set them.

You can also set whether scenario is executed in synchronous mode (waits for the scenario to complete executing) or asynchronous mode (executes scenario and returns).

Next is to use the OdiRepositoryConnection object.

There are a number of JDBC connection methods but these are not needed if using the scheduler agent.

With all the parameters set the odiInvocation object can be initialized with the host name and port of the agent.

If the java is going to be executed on the same machine as the ODI agent and if using port 20910 then it can be initialized without any constructors.

The invokeCommand method can then be used passing in the connection and scenario objects to execute the scenario.

Executing the scenario will return an odiInvocationResult object, the isOk method returns true or false depending on the request be successful or not.

It is also has a method - getSessionNumber which returns the session id of the executed scenario

So there we have a really simple example of executing a scenario using the Java API, now if we want to run this from the web then it can be easily transferred to a JSP script.

Yes, it’s very basic and only runs a specific scenario then outputs the session id if it was successful but the script can be updated in many way like using dropdowns to select the scenario.

With a bit of work here is an example of what is possible combining JSP, Java classes & Ajax :- Have a look Here

Well that completes the ODI Series, I believe I have covered the most important aspects of ODI and integrating it with planning and essbase. I know the series has proved quite popular and I am glad it has helped people starting out with ODI.

OK, what next ….