There are many different reasons that would need to invoke a web service to satisfy the requirements of your integration, keeping tradition in the Hyperion arena I am going use this opportunity to call an EPMA web service. The EPMA web services are not too well documented but they are out there and can be used, if you are using version 9.3.1 there is a document available here.

I am not sure where the documentation is for version 11 but the web services do still exist and fully functional.

If you are going to consume the web services of EPMA with ODI then I recommend making sure you are running an ODI release of 10.1.3.5.0_02 or later as it seems to fix a bug with resolving WSDLs correctly, I hit the bug in the past and couldn't call some web services.

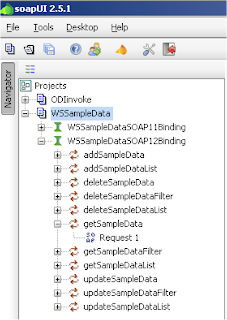

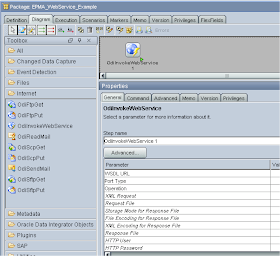

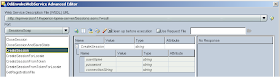

The object of this simple example is to connect to EPMA, retrieve a session id and then create a new folder in EPMA. The first step is to create a package and add the tool ODIInvokeWebService to the diagram.

The tool takes a WSDL URL as one of the parameters, if you are going to use any of the EPMA web services then you will need a session id so the first URL to use is :- http://<epma machine>/hyperion-bpma-server/Sessions.asmx?wsdl

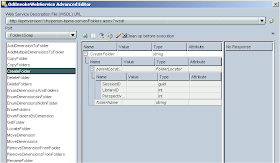

Once you have entered the URL, you can click on the advanced button and connect to the WSDL and that will retrieve all the available operations, the advanced editor lets you enter values for the operation variables then send the soap request and review the results.

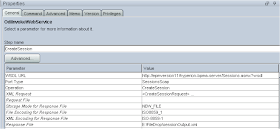

The CreateSession operation takes a UserName, Password and connectionString (the connectionString is optional)

Invoking the SOAP request from the editor returns the required SessionID, once applied the parameters “Port Type”, “Operation” and “XML Request” are automatically populated.

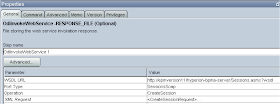

There is a parameter “Request File” which allows the use of a file to be as the request instead of using editor.

Now after the session id is retrieved we need a way of storing the value so it can be used with the next call, there are a number parameters that come into play.

“Storage Mode for Response File” that takes the values “NO_FILE” (no response is stored), “NEW_FILE” (A new

response file is generated.If the file already exists, it is overwritten) and “APPEND” (The response is appended to the file. If the file does not exist, it is created)

“File Encoding for Response File” - character encoding for the response file.

“XML Encoding for Response File” - Character encoding that will be indicated in the XML declaration header of the response file.

“Response File” - File storing the web service soap response.

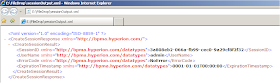

Executing the package produces the following XML file.

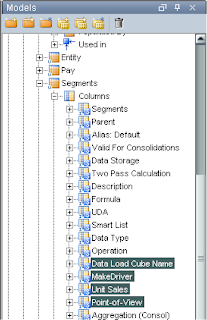

So now we have the XML file containing the session id but we need to be able to read in the id, like most technologies there is one available for XML documents and allows the ability of reversing XML files into a set of datastores.

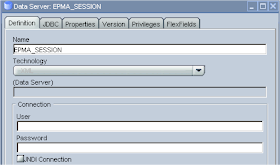

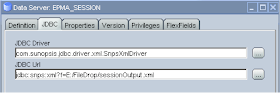

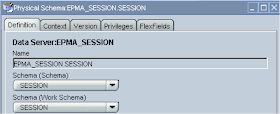

As usual the physical details have to be created in the topology manager.

Only a name is required in the definition.

In the JDBC Url the location of the xml file is entered.

In the physical schema definition any values can be entered for the schema and a logical schema has to be given and applied to context.

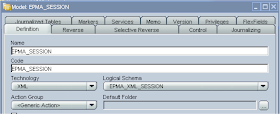

The process for reversing the XML file follows the same process as other technologies in the Designer.

Once reversed a separate dataStore is created in the Model for each element of the XML file.

Now the XML has been converted to datastores you can use standard SQL to query the information, as I am only interested in the session id I can query the value simply.

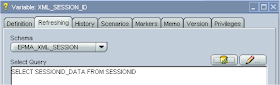

To store the session id I created a variable that is refreshed by using SQL to query the dataStore.

This variable can then be added to the package.

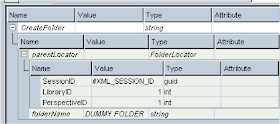

For the final step I want to create a new folder in EPMA, to do this there is a WSDL available for Folder operations.

By using the variable the SessionID value can be populated, without using the session id the soap request will fail, as the request will not be authenticated.

In the parameters of the operation the only other value that needs to be entered is for “Storage Mode for Response File” and this is set to “NO_FILE”

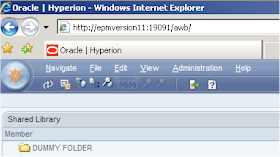

Executing the package will first send a soap request for a session id, the response will write to an xml file, a refreshing variable will pick up the session id from the xml file and store it, the last step uses the Create folder operation and passes in the stored session id and creates a new folder in EPMA.

So there we have a really simple example of how to use the ODIInvokeWebService tool with a bit of XML and EPMA thrown in.