The question basically is how do you know when there have been any metadata load errors so you can take action, it is true that an error log is produced but there is no real indication that errors have occurred.

There are a number of different methods to attack this problem and I am going to go through a couple of methods that you can use. You would of thought that just checking for the existence of the error log would be enough but unfortunately the error log is recreated each time an interface is executed even if no errors are wrote to it, otherwise the ODI tool “OdiFileWait” could have been used to check for the existence of the file.

One method would be to use something like Jython/Java/OS commands to check the size of the file and if it is not zero bytes then act on it, it is a perfectly acceptable way of dealing with it but not one of my preferred methods.

The first method I am going to take you through is based on the statistics that are generated in one of the steps on the metaload knowledge modules,

I did write a blog on how to report statistics correctly so it is worth having a look through that first to get an understanding of what I am going to be talking about.

Right, I will now consider you know how to report statistics correctly by updating “Report Statistics” step in the knowledge module.

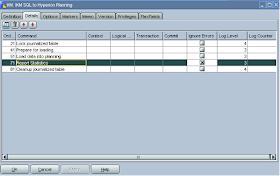

If you run an interface metaload that generates load errors and check out the “Report Statistics” step in the operator you will see the number of records processed and how many of them were errors

So by looking in the operator we can see that 1 record was rejected but we need to be able to act on that error, to do this we need to access the ODI work repository where it stores the statistical information, before we can do that we need to set up a connection to the work repository, I will briefly go through setting up the connection which I am sure you can do for yourself by now.

In the Topology manager create a new Data Server for the technology your ODI work repository is using.

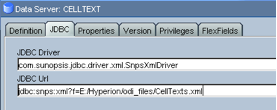

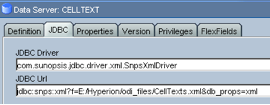

Add the JDBC connection information.

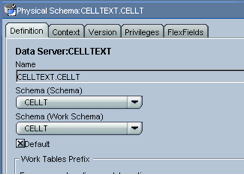

Add the physical schema information

Finally add a logical schema to the context you are using.

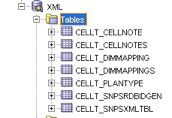

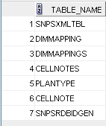

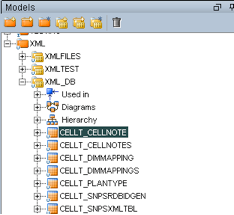

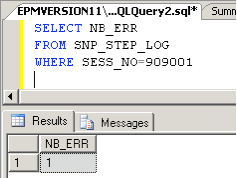

With the connection in place we can now access the tables in the work repository, the table that stores the statistical information is “SNP_STEP_LOG”.

As you see there are fields NB_ROW,NB_INS,NB_ERR

These relate to the total number of records, the records inserted and the number of error records, so you can see the values for the interface I ran earlier, the whole record is defined by the session number.

The session number for the interface I ran was 909001. So if you think about it by querying this information you will be able to find out if and how many errors were generated.

That is all fine but how are we going to know what the session number is for the interface being run, luckily ODI has an API command that will tell us just that. The command is odiRef.getSession(“SESS_NO”)

Now we need a way of storing the value produced from the query and once again ODI has just the thing for it – variables, to be specific a refreshing variable, a refreshing variable lets you set the value of a variable by the use of a query.

Create a new variable, it can be set to a numeric type.

In the refreshing tab select the logical schema for the work repository that was set up in the topology manager earlier.

The query is added, note you will need to surround the ODI API with <%= %> for it to be able to compile and execute correctly, you will not be able to validate the query as the query can only use the API commands at runtime.

With this refreshing variable we can now store the number of errors that have been generated, we just need to put this all together in a package and add a check to act on the value of the refreshing variable.

If you drag the variable onto the diagram again and set it as an evaluate variable.

This means we can check the current value of the variable and if it is greater than 0 then act on it.

In my example if the number of errors is greater than 0 then I send an email out advising there were errors, the amount of errors and attaches the error log, if the errors were 0 then I send out a success email. This is just an example you could add whatever process you want depending if there were errors or not.

Well that is the first method covered and it is probably the method I prefer to use.

The second method also uses a refreshing variable but this time the variable will use a query to read the first line of the error log and if it doesn’t contain a first line it errors and follows the next step of a process, if it does contain an error it follows a separate step.

First of all you will need to have a logical schema that points to directory location of the error logs.

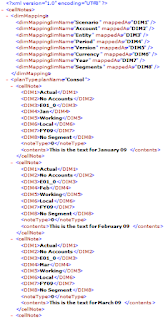

If you have ever looked in the operator at the SQL generated when loading from a text file you will notice a long string of ODI specific parameters for the file that is being loaded.

Basically we are just going to lift the code that is generated and put that into a refreshing variable.

If you want to generate the code for yourself then just create a new data store using file technology

In the Files tab just set it is as delimited, you don’t need to change any other options.

In the columns tab manually add a new column of string type and set the lengths to a high value. Now you can just create an interface and use the data store as the source and for the target it doesn’t really matter as we are just after the code that is generated.

Execute the interface, it doesn’t matter if it fails just check the operator for the Load data step.

Now you can just copy the code that is generated.

Create a variable, the data type of Alphanumeric can be chosen.

Set the logical schema to the one that was created earlier that points to the error log directory.

Paste in the code

select C1 C1_C1

from TABLE

/*$$SNPS_START_KEYSNP$CRDWG_TABLESNP$CRTABLE_NAME=

ERROR_LOGSNP$CRLOAD_FILE=E:\FileStore\/enterr.logSNP $CRFILE_FORMAT=DSNP$CRFILE_SEP_FIELD=09SNP

$CRFILE_SEP_LINE=0D0ASNP$CRFILE_FIRST_ROW=0SNP

$CRFILE_ENC_FIELD=SNP$CRFILE_DEC_SEP=SNP$CRSNP

$CRDWG_COLSNP$CRCOL_NAME=C1SNP$CRTYPE_NAME=STRINGSNP

$CRORDER=1SNP$CRLENGTH=1000SNP$CRPRECISION=1000SNP$CR$

$SNPS_END_KEY*/

Now I updated the code slightly, I removed the C1_C1 as that is not really required, I also updated the section

$CRLOAD_FILE=E:\FileStore\/enterr.log

to

$CRLOAD_FILE=<%=odiRef.getSchemaName("D")%>\enterr.log

I updated it to use the ODI API command getSchemaName, this will convert the current schema details at runtime into the full path, this is good practice as if the directory ever changes you only have to change the location in the topology manager.

So the final code is

select C1

from TABLE

/*$$SNPS_START_KEYSNP$CRDWG_TABLE

SNP$CRTABLE_NAME=ERROR_LOG

SNP$CRLOAD_FILE=lt;%=odiRef.getSchemaName("D")%>\enterr.log

SNP$CRFILE_FORMAT=DSNP$CRFILE_SEP_FIELD=09

SNP$CRFILE_SEP_LINE=0D0A

SNP$CRFILE_FIRST_ROW=0SNP$CRFILE_ENC_FIELD=

SNP$CRFILE_DEC_SEP=SNP$CRSNP$CRDWG_COLSNP$CRCOL_NAME=C1

SNP$CRTYPE_NAME=STRINGSNP$CRORDER=1SNP$CRLENGTH=1000

SNP$CRPRECISION=1000SNP$CR$$SNPS_END_KEY*/

Just to recap, if you use this refreshing variable it will try and read in information in the file, if will either fail if there is no data or succeed if there is data in the file.

Now we can put this into a package like the earlier example

Then just add steps to the success/failure of the refreshing variable.

So there we have it two examples of error handling when using the Hyperion KMs, hopefully you have understood and found it useful what I have tried to explain, I know it can get a little confusing at times. If you want it explaining further then just get in touch.

Until next time!!!