There have been a few reoccurring questions raised recently around FDMEE/Data Management so I thought I would collate some of these types of questions and put a few posts together to address them, this way it saves me having to repeat the same response and instead refer to these posts.

The first is question is:

“Is it possible to have EPM cloud to cloud or hybrid integrations where the target application is the same name as one that has already been registered?”

I have written about EPM cloud to cloud integrations and on-premise hybrid functionality in the past, the functionality works well until you try and add an application with the same name as one registered.

This has definitely caused problems because if you wanted to move data from say a test to production cloud instance where typically the application name would the same it wasn’t possible and rendered the cloud to cloud functionality useless.

It looks like Oracle finally recognised this design flaw and from the 17.10 EPM Cloud release it is now possible to register a duplicate application name.

Update: the duplicate target application name functionality is available from FDMEE 11.1.2.4.220

The duplicate target application name functionality in the cloud works against remote cloud connections or a local target application.

You may ask why would you want to add a duplicate local application, well I am going to go through an example of why you might want to do this which also addresses another question that I have seen raised quite often.

“Is it possible to have the period and year in the rows of source data and not have to drive the data load by the period in the POV or start/end period?”

I have already written about the functionality to load data when the period and year are contained in a source file, the problem with this functionality is that it is still driven by the period in the POV when importing from the workbench or by the start/end period when running a data load rule.

The way around this is to change the period/year dimensions to a generic class and then define a data table column name for them in the target application dimension details, the problem with this is that it would affect all the integrations and you couldn’t have a mixture of driving loads by the POV or driving from the period information in a source file, with the ability to add a duplicate application it allows you to have both methods operate in tandem.

So let me go through a simple example which uses the duplicate target application functionality with the ability to drive a data load from the period information contained in a source file.

First, I am going to add a new local target application, the concept would be the same if adding a cloud target application.

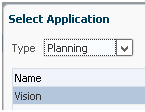

Before the 17.10 or 11.1.2.4.220 release you would select the application type and then application name.

From 17.10 or 11.1.2.4.220 there is option to define a prefix.

The application is added with the prefix.

In the original target application, the period dimension is assigned as a period dimension class and the year as a year dimension class, this means any of the existing integrations are driven by the POV.

The existing integrations will be unaffected by the duplicate application.

For the duplicate application, I set the Period dimension is set as a generic dimension class and the data table column assigned to UD4, the year is set as a generic dimension and this is set to UD5 in the data table column name.

Now a period mapping is created where the period name does not relate to a period or year and can be used in the POV.

At first, I created under the new duplicate target application.

After testing I found that the period is not picked up in the POV and the values are read for the original target application, I also found errors were generated when using period mappings for the duplicate application.

I removed the period mapping from the duplicate application and added one to the original application.

The target period month and year target are not important but are required for the mapping to validate.

The source csv file I am going to load contains both the periods and years I want the data to be loaded to.

On to the import format, the duplicate application name is selected as the target, the mappings include the period and year dimensions because they were defined as generic in the target application dimension details.

The only thing to note in the location definition except the import format is selected.

The POV is set to the new location and the period to “No Per/Yr” which was created earlier.

A new data load rule is created, the source csv file is uploaded and selected, the remaining properties were left as default meaning this rule would be a numeric data only type load method.

You will notice that the data load mappings also include the period and year dimensions, usually these dimensions would be mapped by the period mapping but as we are using a different method these are going to be controlled by the data load mappings.

For this simple example, all mapping except for the year dimension were created as like for like.

As the source file contains the year in the style of “YYYY” so in this example “2017” it needs to be mapped to the target planning application format of “FYYY”, for this I used a FORMAT mapping type which prefixes the target with “FY”, ignores the first two character in the source and includes the third and fourth characters.

Now to load the source data through the workbench.

The source data has been successfully imported and mapped, you can see that the year values have been transformed into the correct format.

The export is then executed to load the data from data management into the target application.

To confirm the data has been loaded correctly an ad hoc retrieve was performed in Smart View.

So now I am able to drive the data load from the period/year values in a source file and by using a duplicate application this allows the existing data loads to carry on being driven by the POV with integrations against the original target application.

I am going to leave it there for this part as I don’t want to overload with too much information, in the next part I will go through some common misunderstandings with the all data type load method and look at mapping options for the all data type.

The first is question is:

“Is it possible to have EPM cloud to cloud or hybrid integrations where the target application is the same name as one that has already been registered?”

I have written about EPM cloud to cloud integrations and on-premise hybrid functionality in the past, the functionality works well until you try and add an application with the same name as one registered.

This has definitely caused problems because if you wanted to move data from say a test to production cloud instance where typically the application name would the same it wasn’t possible and rendered the cloud to cloud functionality useless.

It looks like Oracle finally recognised this design flaw and from the 17.10 EPM Cloud release it is now possible to register a duplicate application name.

Update: the duplicate target application name functionality is available from FDMEE 11.1.2.4.220

The duplicate target application name functionality in the cloud works against remote cloud connections or a local target application.

You may ask why would you want to add a duplicate local application, well I am going to go through an example of why you might want to do this which also addresses another question that I have seen raised quite often.

“Is it possible to have the period and year in the rows of source data and not have to drive the data load by the period in the POV or start/end period?”

I have already written about the functionality to load data when the period and year are contained in a source file, the problem with this functionality is that it is still driven by the period in the POV when importing from the workbench or by the start/end period when running a data load rule.

The way around this is to change the period/year dimensions to a generic class and then define a data table column name for them in the target application dimension details, the problem with this is that it would affect all the integrations and you couldn’t have a mixture of driving loads by the POV or driving from the period information in a source file, with the ability to add a duplicate application it allows you to have both methods operate in tandem.

So let me go through a simple example which uses the duplicate target application functionality with the ability to drive a data load from the period information contained in a source file.

First, I am going to add a new local target application, the concept would be the same if adding a cloud target application.

Before the 17.10 or 11.1.2.4.220 release you would select the application type and then application name.

From 17.10 or 11.1.2.4.220 there is option to define a prefix.

The prefix can be up to 8 characters long.

The application is added with the prefix.

In the original target application, the period dimension is assigned as a period dimension class and the year as a year dimension class, this means any of the existing integrations are driven by the POV.

The existing integrations will be unaffected by the duplicate application.

For the duplicate application, I set the Period dimension is set as a generic dimension class and the data table column assigned to UD4, the year is set as a generic dimension and this is set to UD5 in the data table column name.

Now a period mapping is created where the period name does not relate to a period or year and can be used in the POV.

At first, I created under the new duplicate target application.

After testing I found that the period is not picked up in the POV and the values are read for the original target application, I also found errors were generated when using period mappings for the duplicate application.

I removed the period mapping from the duplicate application and added one to the original application.

The target period month and year target are not important but are required for the mapping to validate.

The source csv file I am going to load contains both the periods and years I want the data to be loaded to.

On to the import format, the duplicate application name is selected as the target, the mappings include the period and year dimensions because they were defined as generic in the target application dimension details.

The only thing to note in the location definition except the import format is selected.

A new data load rule is created, the source csv file is uploaded and selected, the remaining properties were left as default meaning this rule would be a numeric data only type load method.

You will notice that the data load mappings also include the period and year dimensions, usually these dimensions would be mapped by the period mapping but as we are using a different method these are going to be controlled by the data load mappings.

For this simple example, all mapping except for the year dimension were created as like for like.

As the source file contains the year in the style of “YYYY” so in this example “2017” it needs to be mapped to the target planning application format of “FYYY”, for this I used a FORMAT mapping type which prefixes the target with “FY”, ignores the first two character in the source and includes the third and fourth characters.

Now to load the source data through the workbench.

The source data has been successfully imported and mapped, you can see that the year values have been transformed into the correct format.

The export is then executed to load the data from data management into the target application.

To confirm the data has been loaded correctly an ad hoc retrieve was performed in Smart View.

So now I am able to drive the data load from the period/year values in a source file and by using a duplicate application this allows the existing data loads to carry on being driven by the POV with integrations against the original target application.

I am going to leave it there for this part as I don’t want to overload with too much information, in the next part I will go through some common misunderstandings with the all data type load method and look at mapping options for the all data type.

Finally they changed the way data is extracted to look at database column rather than period mappings only.

ReplyDeleteThat probably means they changed view AIF_HS_BALANCES in 220. Let's see when it's released.

Great post!

This comment has been removed by a blog administrator.

ReplyDelete