I have been meaning to go through all the new functionality in planning 11.1.2, there are quite a few changes and additions in this new release, planning has had a bit of an overhaul in some areas. I thought maybe some of the new content may have been covered in other blogs but I have not seen much, so until it is covered I will go through what I can.

Today I am going to look at a new piece of functionality that lets you map data from a planning BSO database to one or more BSO/ASO databases.

One example of why you may want to use this functionality is you say you have a reporting database such as an ASO database reporting actuals and you want to push a proportion of the forecast/budget data from the planning application into the ASO model. It could also be that you have a number of different cubes that you need to push different areas of data to from your planning application

In the past if you wanted to achieve this you would create a partition between the BSO planning database and the ASO database, this could sometimes be troublesome because when planning refreshes it destroys the partition so you would have to put in an automated process to get around this.

When I first read about this new functionality I assumed it would create a replicated partition and push the data to the mapped database, I thought it would manage the partition so it wasn’t destroyed with a refresh, this is not the way it works though but more about that later.

Right, so let’s go through how to set up a mapping.

My source is going to be the trusty Planning sample application (Plansamp)

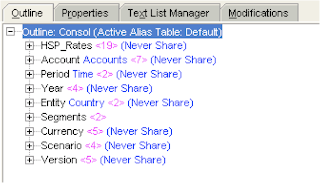

The Plansamp application has dimensions: - HSP_Rates (not that you usually see this dimension from within planning), Account, Period, Year, Entity, Segments, Currency, Scenario and Version.

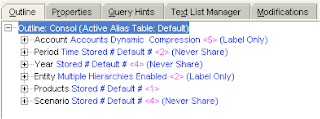

The target database is a ASO database (PlanASO) loosely based on the BSO db.

The dimensionality is similar except it doesn’t contain the HSP_Rates, Currency & Version dimensions, the Products dimension is closely linked with Segments dimension of BSO db.

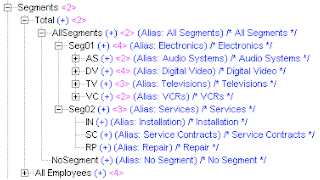

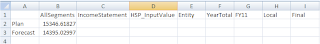

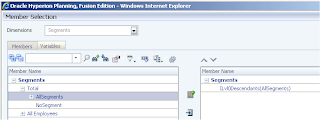

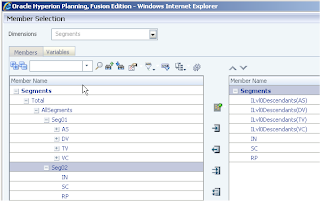

The segments dimensional structure of the planning sample db in case you have not seen it before.

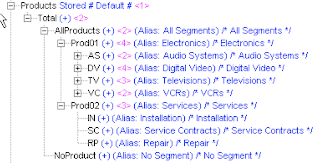

As you can see the ASO products dimension is very similar, basically AllSegments equals AllProducts, Seg01 equals Prod01, Seg02 equals Prod02.

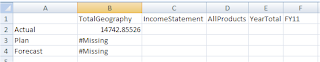

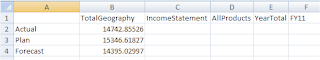

A quick high-level retrieve from the ASO db shows Actual data but Plan/Forecast is missing.

The planning sample db contains the Plan/Forecast data I want to push to the ASO db, these are the steps to perform to map the data.

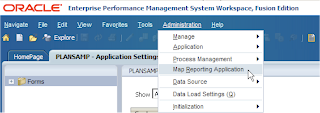

To access the mapping functionality go to Administration > Map Reporting Application.

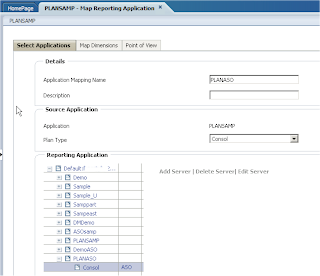

First you give a name to your mapping and then select the essbase database you want to map to, it is possible to add essbase servers if the default server doesn’t contain the database you require.

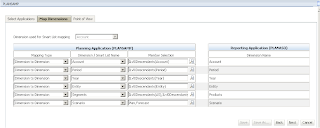

The next screen is the main mapping section, this is where you define which members from the source planning database will map to your target database dimensions.

The map dimensions screen will automatically map all the dimensions that match between source and target.

As the target reporting application is ASO the rule is all the mappings have to map to level0 members in the ASO database.

All the members that are mapped must resided in both and target applications.

If the reporting application is a block storage database, then the default members can be any stored members on the target database.

So basically you just need to go through and map the areas that you want to push data to your reporting application.

By default the selection will be all level 0 descendants of the dimension.

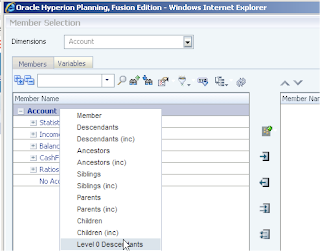

If you click the member selection button you are given a number of different member functions to choose from.

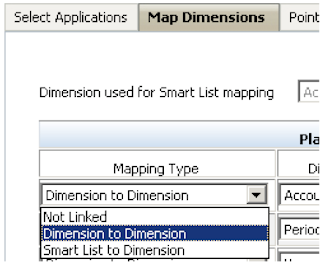

There are three mapping types available, “dimension to dimension” which is selected by default.

“Not Linked” – which is saying there is no direct mapping between the dimensions, this could be used if you say you wanted “Forecast” in your source to be mapped “Actual” in your target or you want to map a number of members in your source to one member in your target, this is defined in the “Point of View” section which is the next page in the configuration.

“Smart List to Dimension” - Displays the available Smart Lists. When a Smart List is selected, the account members associated with it are displayed. If there is only one member, it is automatically selected.

With Smart List to dimension mapping, the source plan type must contain a dense Account dimension with at least one member associated with a Smart List.

I did have an issue using the smart list functionality, every time I went to save the mapping it came up with a JavaScript alert popup warning “You must select a member for the Planning dimension”. I left it there with the smart list error until I have a look at resolving another day.

Right back to the mapping, as I highlighted earlier the source segments members are slightly different to the target product members.

I would of thought I could just select Level 0 descendants of “AllSegments” as that should pick all the correct members I want to map.

Unfortunately there is a rule that states :-

If Descendants (“AllSegments”) is selected in a mapping, the “AllSegments” member must exist in the reporting application.

This meant I had to map where the member names matched.

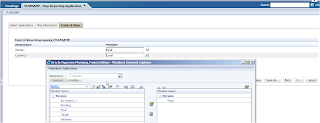

Once all members have been mapped you move on to the “Point of View” section.

This is where you can define which members are used in the source mapping that does not exist in the target. As “Version” and “Currency” don’t exist in the reporting application the members of where the data needed to be taken from the source were chosen.

The POV section is also used when you choose “Not Linked” as the mapping type, which I briefly went over earlier.

The dimension “HSP_Rates” is automatically dealt with if it doesn’t exist in your target, so you won’t see it appearing anywhere unless the dimension did exist in your target.

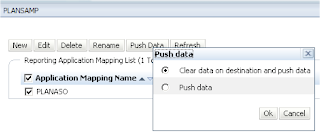

Once the mappings have been saved you have the option to “Push Data” which will invoke your mappings and move the data from source to target.

You are given two options; you can either just do a straight push or choose to clear out the data against your defined area on the target first.

A quick retrieve of the data on the target and you can see the plan, forecast data has been pushed across.

Like with everything I am not happy until I know what is going on behind the scenes, is it a partition?

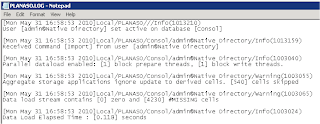

Well the answer is no, if you have a look in the planning applications essbase log you will get an idea of what is happening.

The essbase calculation command DATAEXPORT is being executed, so I assume that a calc script is generated in the background with a FIX on the members defined in the mappings.

If you don’t know much about the DATAEXPORT command it is worthwhile checking out the essbase technical reference documentation. -

Something you be aware of when you using DATAEXPORT is dynamic calc members can severely hit the performance of the export so watch out for this if you are going to use this functionality.

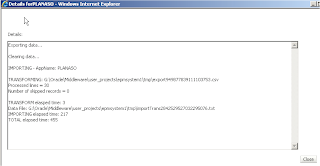

Now when using the command the output is either sent to a flat file or to a relational database table using an ODBC DSN. In this case it is using a flat file and you can find out what file it is generating by going to “Tools” > “Job Console” in planning.

If you click the link under “Run Status” it will open a window with further information.

The export generates a csv file a temporary directory, if you go into the directory after you have run a push data the file will still exist.

I would watch out because it looks like this directory does not get cleaned out and if you start running big data exports are a number of times it could easily start consuming a fair bit of space on the drive.

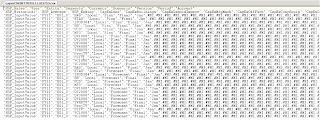

If you open the file the output looks exactly the same as if you would of created your own calc script using the DATAEXPORT command.

After the output csv file has been produced, the output is then transformed into another flat file (.txt) to map to target members or remove any unnecessary dimensions, in my case remove HSP_Rates, Version and Currency dimensions.

The text file is then loaded into target reporting database adding to any existing values.

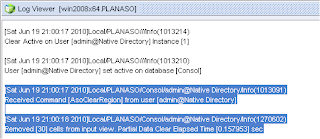

If you choose the option of “Clear data on destination..” then the data for the area that has been defined will be removed.

If it is an ASO application then the equivalent of the Maxl

“alter database appname.dbname clear data in region 'MDX set expression' physical;” is executed.

I assuming it is a physical and not a logical clear that is executed, as I don’t see a slice created after pushing data.

If the reporting application is a BSO type database then a CLEARDATA command will be executed for the area that has been defined.

I don’t see any utility to run this from command line so if you wanted to push data you would have to log into planning and run it manually.

Anyway the functionality can easily be replicated and scheduled by creating maxl script running a calc script that contains the DATAEXPORT command and then a data load.

Well that’s enough covered for today, I will let you decide if you are impressed with this new functionality.

Until next time whenever that may be.