Today is the day to have a look at some of the planning command line utilities; there are a number of utilities inside the bin directory that used to the utils directory until 9.3. Some of the utilities have been around since time begun, some of them have been modified and they’re a couple of new ones just for version 11.

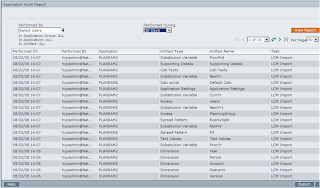

With LCM being thrown into the limelight I can see some of them not being used as often anymore, no doubt that LCM just uses the same java classes as the utilities use.

inside in the bin

The most noticeable new addition is the Outline load utility, this has been on peoples wish list since the early days of planning, it should have been developed a long long time ago and then the emphasis wouldn’t have been put so much on HAL and the awful EPMA (I am not much of a fan of EPMA maybe version 11 will change my mind a bit but we will see).

I have always found the documentation on the utilities to be a little scarce but it seems with version 11 they have got there acts together and covered most of them.

The Outline load utility allows you to load metadata and data, I am only going to cover off the metadata today, I did have a quick read through the data loading and to be honest I would jump to using a essbase data load rule any day.

If you are going to use the Outline utility to load metadata I would recommend reading through the sections “Loading Metadata” and “Command Line Parameters for the Outline Load Utility” in the planning admin documentation.

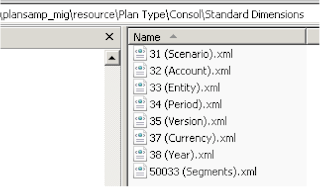

The utility will let you load metadata for Account, Period, Year, Scenario, Version, Currency, Entity, user-defined dimensions, attributes, UDAs and values for exchange rates, so pretty much everything.

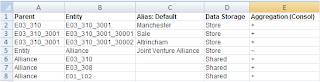

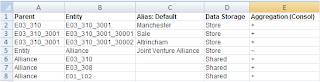

First thing to do is a create a load file with metadata, If you have ever used HAL to load metadata then creating the CSV load file will seem very familiar, you create a separate load file for each area you are going to update.

I usually find creating a template in excel with drop down boxes in the cells with different member properties the best option and then saving it as csv once completed.

The documentation has details on the member properties, though the HTML version doesn’t seem to show them so use the PDF (page 87).

It is worth mentioning that if your load file doesn’t contain all the properties then it will use the default or inherit from the parent member, so if it is a simple hierarchy you are trying to create you don’t have to put too much effort into it, the only concept you have to work with is parent/child members.

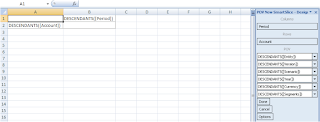

The utility doesn’t just let you insert/update members you can also delete members (Level0, Idescendants and descendants)

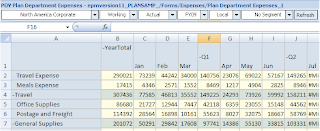

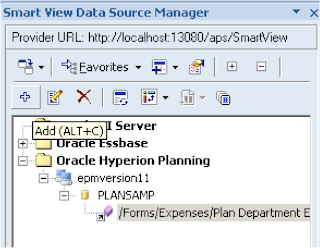

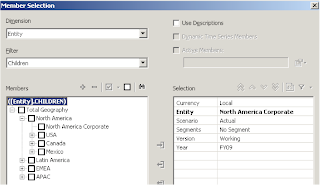

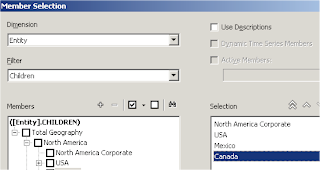

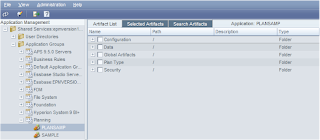

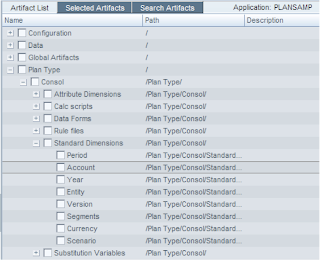

For this exercise I am going to load a simple hierarchy into the entity dimension of the planning sample application.

Once the file has been saved as .csv you are ready to invoke the utility. Be careful when creating the headers as they are case sensitive and have to match exactly, I didn’t put a space in the Alias header and it failed in the first attempt.

There are a multitude of options for using the command line that are covered in the documentation, the format being:-

OutlineLoad [-f:passwordFile][/S:server] /A:application /U:userName [/M] [/I:inputFileName/D[U]:loadDimensionName/DA:attributeDimensionName:baseDimensionName] [/N] [[/R] [/U]] [/C] [/F] [/K] [/X:exceptionFileName] [L:logFileName] [/?]

Now before I create my command line I will just point a new option that has been included in most of the utilities for this release and that is the ability to use a password file that contains the encrypted password, in previous releases you would have to hard code the password.

To create the password file you just need to run the utility with the path to the file.

Example :- PasswordEncryption.cmd passwordFile

Now you have the encrypted file you can reuse it for all the utilities.

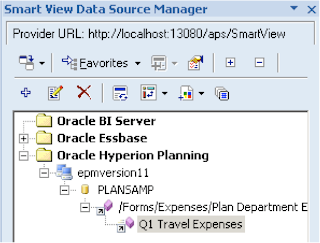

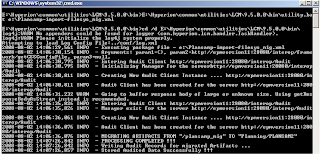

OutlineLoad -f:passfile.txt /A:PLANSAMP /U:hypadmin /I:entityload.csv /D:Entity /L:outlineLoad.log /X:outlineLoad.exc /N

I have used /N filter as this performs a dry run.

Ok so the dry run completed with no exceptions so time to run it normally.

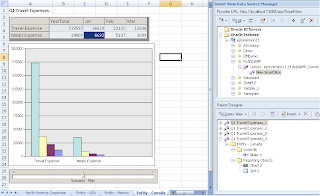

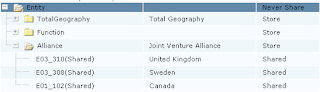

And the output was the desired result.

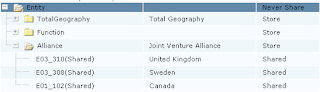

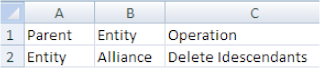

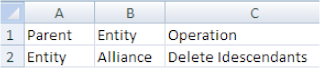

If you were told the Alliance part of the hierarchy was not required and should be deleted then you could easily do this by using the utility.

Just create the above and run the same command line as before and the Alliance part of the hierarchy will be removed.

Another couple of filters in the command line may be useful, /O which maintains the order members of the load file /-O ignores the order.

/H to order the records internally in parent-child order which is default, if your load file is in the correct order then you could use /-H which is much faster.

If you use the /C filter then it will perform a cube refresh.

I know this was just a simple example but it doesn’t take much more effort to load complex hierarchies and just compare it to the nightmare EPMA load files, I just wish this utility had been around before and was available for 9.3.

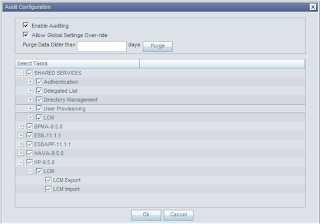

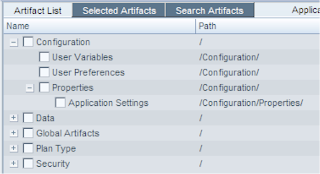

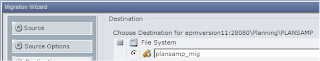

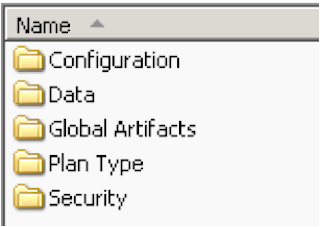

Another new utility is the ability to export/import task lists; another feature that has been long awaited though can also be done through LCM.

It is easy to use and does not have many filters.

TaskListDefUtil [-f:passwordFile] importexportFILE_NAMETASK_LIST_NAME-all SERVER_NAME USER_NAME APPLICATION

Examples

To export all task lists:-

TaskListDefUtil.cmd –f:passfile.txt export -all localhost hypadmin PLANSAMP

To import task list:-

TaskListDefUtil.cmd -f:passfile.txt import TaskList.xml localhost hypadmin PLANSAMP

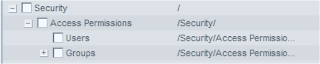

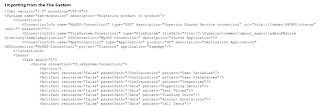

The export/import security utility now handles security on forms, composite forms, form folders and task lists, once again this can be done through LCM and has probably been enhanced for use in LCM.

Example output

User1,DataForm2,read,MEMBER, SL_COMPOSITE

User2,Folder3,write,MEMBER,SL_FORMFOLDER

User3,DataForm4,write,MEMBER,SL_FORM

One of the utilities that has always been useful for me but has never had much press is updateUsers (I am not even sure if has been documented in previous releases). If you ever been involved in migrations across servers and use native users and groups then if you don’t use this utility you can loose their access permissions, this is because planning uses a native SID value and it changes across environments. You don't need to be concerned about this utility if you have created native users/groups using the import/export utility because they will have been set up with the same SID value.

Syntax :- updateusers.cmd [-f:passwordFile] serverName adminName applicationName

Nearly all of the other utilities are self-explanatory and have been around for a while, they are well documented now with a quick search.

There are a couple of utilities I have not covered:-

CalcMgrCmdLineLauncher.cmd

HBRMigrateSecurity.cmd

These both relate more to EPMA and the new Calculation Manager that I may cover in the future.

Well that’s me done for today I can get out of the bin now.