Now there are a few different ways to approach renaming members, one of the methods which I am going to cover today involves going directly to the planning database tables and understandably might not be favoured by some, so in the next blog to be complete I am going to go through another method which is much more convoluted but stays on a supported track.

There are occasions when updating alias members is not enough and you have to resort to renaming members, there has never been any functionality through the available tools (ODI,DIM,HAL & Outline loader) to directly rename members. I am not sure why this functionality has not been provided yet as it doesn’t seem a complex area to build into an adaptor and would definitely be beneficial; hopefully the ability to do so will be made available one day.

Let’s go through the first method, I must point out that if you plan on renaming entity members please make sure that there is no workflow process running for the members that are going to be updated. I also do not promote changing planning underlying tables without experience in this area, so don’t hold me responsible if anything goes wrong it is all at your own risk :)

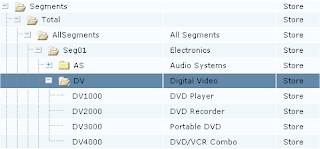

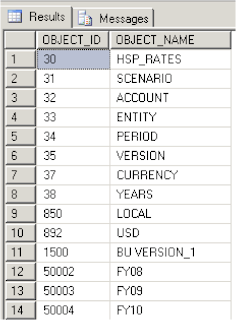

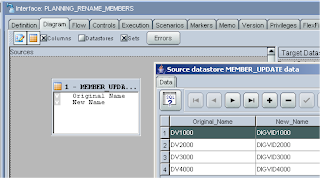

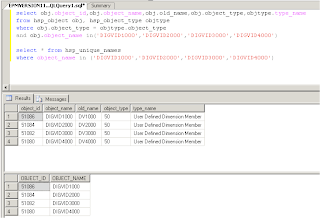

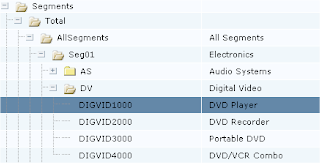

In this example I want to rename the level 0 DV members so that is DV1000 – DV4000

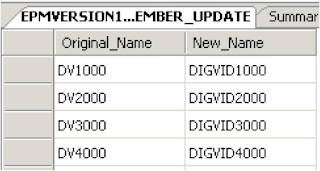

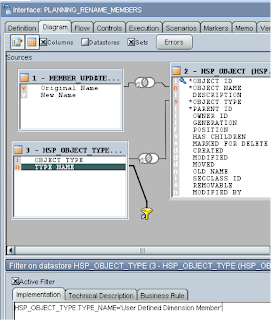

I have a source table that contains just two columns, one with the original member name and the other with the name I want to rename the member to, so nice and simple.

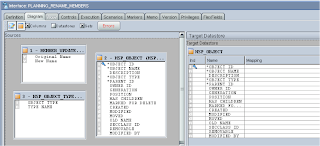

In the planning applications relational database there are a few tables that we will need to use to be able to update the member names.

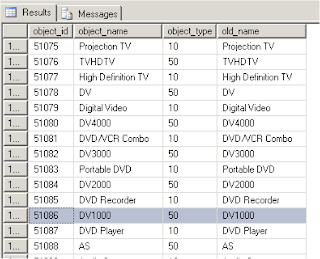

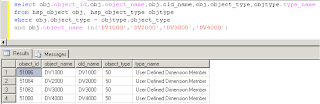

The first table and the most important is HSP_OBJECT, this is the core table in the planning architecture, nearly all other tables relate to this table in some way, and the key to it all is the OBJECT_ID, though this is not going to be a lesson on what’s in the table; it is only about the columns we are interested in.

The columns that are important for this exercise are

OBJECT_ID – Each object inside planning has a numerical id, so for each member there will be an object id.

OBJECT_NAME – In our case this holds all the member name information.

OBJECT_TYPE – This column is driven by an ID and defines what the object is within planning e.g. form, user, member…

OLD_NAME – This contains the previous name of an object, so if a member is changed in planning the OBJECT_NAME will hold the new name and OLD_NAME holds the previous name. Some would argue that I don’t need to touch this column but I have see instances where the value can be null and just to be certain I am covering all angles I am including it.

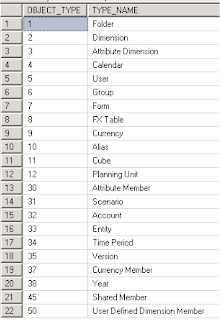

The next table is HSP_OBJECT_TYPE; this table hold descriptive information to what the object in planning is.

The table links to HSP_OBJECT by the OBJECT_TYPE column

So by linking the two tables together you can make sure you are only retrieving information for the correct type, in this example all members are part of a user defined dimension so will have an object_type of 50 associated with them. It is import that this table is used as we only want to update member information and not another object like a form that could have the same name

The final table is HSP_UNIQUE_NAMES. As you are probably well aware member names/aliases all have to be unique with planning, this table keeps a record of all the unique names to make sure you don’t add a new member that already exists.

The table links back to the HSP_OBJECT table by the important OBJECT_ID. When we update the member name we will also have to update the name in this table to keep everything in sync.

Now we have the source and targets sorted out we can move on to ODI.

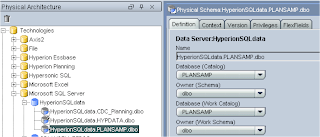

You will need to set up a physical connection to your planning applications database.

I am not going into the details of setting up physical and logical schemas, if you don’t know how to do it then read back into my early blogs. I am also going to assume you have set up the schemas to your source data.

Once you have set up the connection, create a model using the logical schema created and reverse the required tables (HSP_OBJECT, HSP_OBJECT_TYPE,HSP_UNIQUE_NAMES)

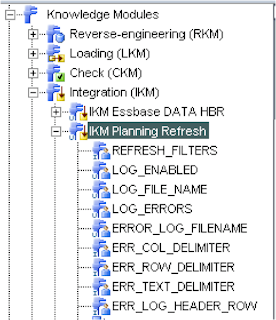

Before we start building an interface we need to make sure the correct KM’s have been imported, as the integration is updating the target then one of the IKM UPDATE knowledge modules can be used.

There are a number of different UPDATE IKM’s depending on the technology using, I am not going to go through them all because I don’t feel performance will be an issue when updating the number of records you will have to when dealing with planning, you can read the documentation to see the differences between each KM, in this example I am going to use the plain and simple “IKM SQL Incremental Update”

When using UPDATE KM’s you will need to have a primary key, there is no need to worry in this example, as OBJECT_ID is already defined as a primary key in the tables.

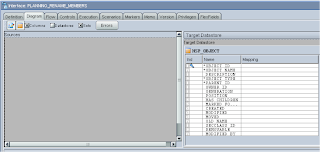

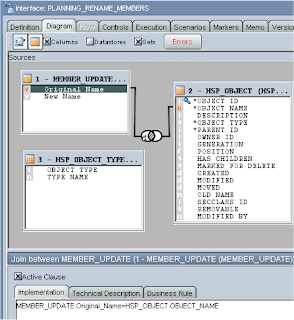

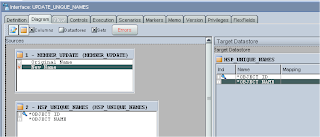

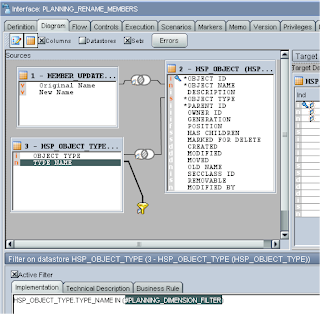

Ok, the first interface we require is one that will map our source records to the target (HSP_OBJECT) and updated them accordingly.

Create a new interface and drag the HSP_OBJECT datastore on to the target.

On the source drag in the datastore that contains the orginal and new member names, in my case it is called “MEMBER_UPDATE”

Now drag HSP_OBJECT and HSP_OBJECT_TYPE on to the source.

Create a join between “MEMBER_UPDATE”.“Original Name” and “HSP_OBJECT”.“Object Name” (drag “Original Name” on to “Object Name”) this will make sure we only update the records that match by name.

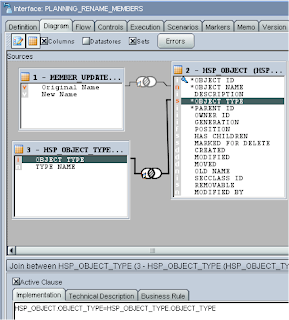

Next create a join between “HSP_OBJECT”.”OBJECT_TYPE” and “HSP_OBJECT_TYPE”.”OBJECT_TYPE”

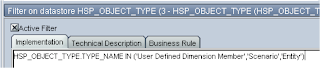

This will let us define which object types to return in the query ODI creates, to make the definition a filter is required, drag TYPE_NAME on to the source area.

So this will only return the types of “User Defined Dimension Member”, if you want your interface to deal with more than one type of dimension member update you can easily add more logic into the filter e.g.

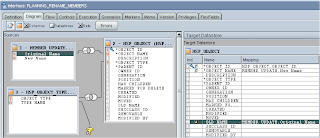

Now we can perform the target mappings, which is straightforward.

OBJECT_ID is mapped to the source.

OBJECT_NAME is mapped to “New Name” as we want this column to hold the new member name.

OLD_NAME is mapped to “Original Name” as we this column to be updated with the original member name

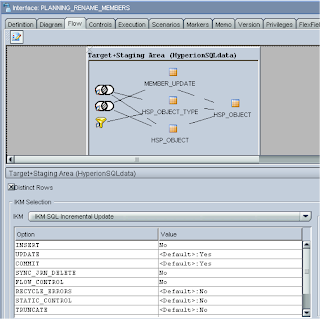

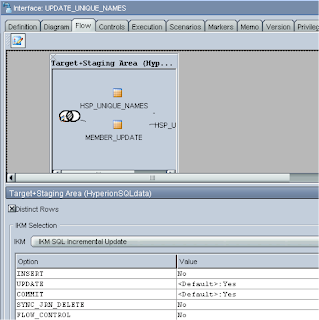

In the flow tab I updated the following parameters for the IKM.

INSERT set to “No” as we are only interested in updating the target, we don’t want to insert any records.

SYNC_JRN_DELETE & FLOW_CONTROL set to “No” as we don’t require this functionality.

That is the first interface complete and we now need a similar interface that will update the HSP_UNIQUE_RECORDS table.

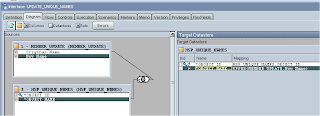

Create a new interface; drag the HSP_UNIQUE_RECORDS datastore to the target and source as well as the source member information (“MEMBER_UPDATE”)

Create a join between “MEMBER_UPDATE”.”Original_Name” and “HSP_UNIQUE_NAMES”.”OBJECT_NAME” as this will focus on only member names we want to update.

You will notice I have used the UPPER function (UCASE in Oracle), as the member information in HSP_UNIQUE_NAMES is all in uppercase so I need to convert my source member information to Uppercase before I map. I know in my example all the member names are in uppercase but they may not be in future.

The target mapping is straightforward again.

OBJECT_ID is mapped to “HSP_UNIQUE_NAMES”."OBJECT_ID"

OBJECT_NAME is mapped to “MEMBER_UPDATE”.”New Name” and converted to uppercase.

The IKM options are set up like the previous interface.

So I now have two interfaces that should map to the source member file and update to the new member name information on both of the target datastores.

Both interfaces run successfully and updates = 4 which is correct.

A quick query on the planning tables and all looks good as the new member names are in place.

This is where the blog could end but I am not so lucky, if you look in planning the original member names are still there, back with a vengeance is the planning cache in action, the cache still has the original names stored in it.

There are a few options, easiest is just to restart planning and the planning cache will be refreshed but I don’t want to take that route as it may not be convenient to restart the planning web app each time a member is renamed.

If you run a planning refresh the planning cache for member information is also refreshed so I could also create an interface that loads 1 record that already exist into the planning application and use the KM option of “REFRESH_DATABASE” set to Yes, this is fine but I want my to be able to use my renaming interfaces again and again and I don’t want to have to load one dummy record in each time.

Ok, I could call the cube refresh utility by creating a procedure and using an OS command.

What I need is an interface that just runs a refresh, is this possible? Well, after too much time spent digging around the java code trying to understand if it could be done I have come up with a solution and here is how it can be done.

Right click the KM “IKM SQL to Hyperion Planning” and select duplicate, I want to keep my original KM so that’s why I am creating a copy.

In the details tab delete the following commands, as they are not required.

“Lock journalized table”

“Load data into planning”

“Report Statistics”

“Cleanup journalized table”

Edit the command “Prepare for Loading”, you can rename it if you like.

Most of the code stays pretty much the same; I have just imported a couple of extra classes from the planning adaptor and used them later in the code.

Here is the complete code if you want you copy and paste it in.

from java.util import HashMap

from java.lang import Boolean

from java.lang import Integer

from com.hyperion.odi.common import ODIConstants

# new imports

from com.hyperion.odi.planning import ODIPlanningWriter

from com.hyperion.odi.planning import ODIPlanningConnection

#

# Target planning connection properties

#

serverName = "<%=odiRef.getInfo("DEST_DSERV_NAME")%>"

userName = "<%=odiRef.getInfo("DEST_USER_NAME")%>"

password = "<%=odiRef.getInfo("DEST_PASS")%>"

application = "<%=odiRef.getInfo("DEST_CATALOG")%>"

srvportParts = serverName.split(':',2)

srvStr = srvportParts[0]

portStr = srvportParts[1]

#

# Put the connection properites and initialize the planning loader

#

targetProps = HashMap()

targetProps.put(ODIConstants.SERVER,srvStr)

targetProps.put(ODIConstants.PORT,portStr)

targetProps.put(ODIConstants.USER,userName)

targetProps.put(ODIConstants.PASSWORD,password)

targetProps.put(ODIConstants.APPLICATION_NAME,application)

print "Initalizing the planning wrapper and connecting"

dimension = "<%=snpRef.getTargetTable("RES_NAME")%>"

loadOrder = 0

sortParentChild = 0

logEnabled = <%=snpRef.getOption("LOG_ENABLED")%>

logFileName = r"<%=snpRef.getOption("LOG_FILE_NAME")%>"

maxErrors = 0

logErrors = <%=snpRef.getOption("LOG_ERRORS")%>

cubeRefresh = 1

errFileName = r"<%=snpRef.getOption("ERROR_LOG_FILENAME")%>"

errColDelimiter = r"<%=snpRef.getOption("ERR_COL_DELIMITER")%>"

errRowDelimiter = r"<%=snpRef.getOption("ERR_ROW_DELIMITER")%>"

errTextDelimiter = r"<%=snpRef.getOption("ERR_TEXT_DELIMITER")%>"

logHeader = <%=snpRef.getOption("ERR_LOG_HEADER_ROW")%>

# set the load options

loadOptions = HashMap()

loadOptions.put(ODIConstants.SORT_IN_PARENT_CHILD, Boolean(sortParentChild))

loadOptions.put(ODIConstants.LOAD_ORDER_BY_INPUT, Boolean(loadOrder))

loadOptions.put(ODIConstants.DIMENSION, dimension)

loadOptions.put(ODIConstants.LOG_ENABLED, Boolean(logEnabled))

loadOptions.put(ODIConstants.LOG_FILE_NAME, logFileName)

loadOptions.put(ODIConstants.MAXIMUM_ERRORS_ALLOWED, Integer(maxErrors))

loadOptions.put(ODIConstants.LOG_ERRORS, Boolean(logErrors))

loadOptions.put(ODIConstants.ERROR_LOG_FILENAME, errFileName)

loadOptions.put(ODIConstants.ERR_COL_DELIMITER, errColDelimiter)

loadOptions.put(ODIConstants.ERR_ROW_DELIMITER, errRowDelimiter)

loadOptions.put(ODIConstants.ERR_TEXT_DELIMITER, errTextDelimiter)

loadOptions.put(ODIConstants.ERR_LOG_HEADER_ROW, Boolean(logHeader))

loadOptions.put(ODIConstants.REFRESH_DATABASE, Boolean(cubeRefresh))

# connect to planning and set parameters

odiPC = ODIPlanningConnection(targetProps)

ODIPlanWrite = ODIPlanningWriter(odiPC)

ODIPlanWrite.beginLoad(loadOptions)

# run refresh with or without filters

odiPC.getWrapper().runCubeRefresh(Boolean("false"), Boolean(<%=odiRef.getOption("REFRESH_FILTERS")%>))

# clean up

ODIPlanWrite.endLoad()

print "Planning refresh completed"

I left in all the logging options, they won’t really tell you much other than the connection to planning was successful.

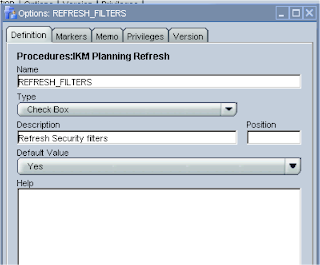

I also added into the code the option to refresh filters or not which takes me on to the options, some of them I removed because they are not required anymore.

I added the following option :- REFRESH_FILTERS

I deleted the following options :-

LOAD_ORDER_BY_INPUT

SORT_PARENT_CHILD

MAXIMUM_ERRORS_ALLOWED

REFRESH_DATABASE

To save you time on creating the KM, you can download it from here.

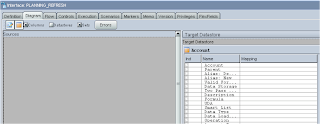

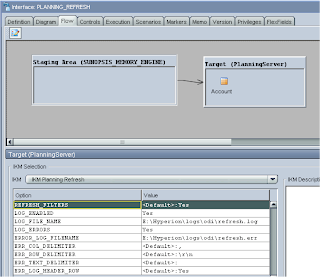

To use the refresh KM in an interface you just need to create an interface set a staging area, I usually use the sunopsis memory engine as no processing is done in the staging area it is just used so the interface will validate.

Drag any planning dimension datastore on to the target, it doesn’t matter which dimension, it is just being used to generate the correct connection details to the planning application.

In the IKM options you can define whether or not to refresh the security filters, I doubt you will see anything being generated in the error log and I probably could of removed it.

Now you have an interface that can be used again and again for refreshing planning, to change which planning app it refreshes is also simple, either drag a different datastore on to the target or just update the physical connection information in the topology manager.

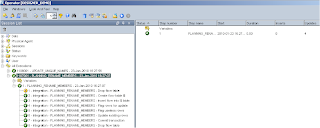

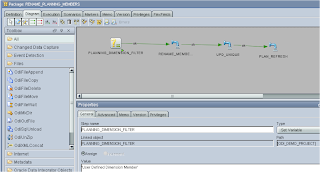

To put it all together I created a package.

As you can see I added a variable that I set to define the planning dimension type of the members that will be renamed.

I updated the renaming interface to use the variable, this gives the flexibility to quickly change the dimension I will be renaming members for or just include all the dimension types.

The complete flow is :- Set Planning Dimension variable, Rename Members interface, Update unique members table interface and finally a planning refresh.

The interfaces can easily used for different planning applications by either dragging a different datastore to the targets or by updating the physical connection information in the topology manager.

Executing the package will complete the process for renaming members and a quick check in planning shows it was successful

So in the end I have a solution that can be easily ported to any environment where I need to rename members.

As usual I thought this blog would be really quick to write up, it always seems quick in my mind until I go through it all step by step.

Next time I will go through the alternative method of achieving the same end game but without having to go directly to the planning tables.