- Load metadata and data

- Extract data

- Consolidate data

- Enumerate members of member lists

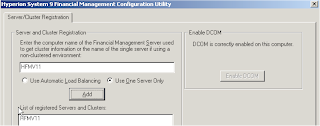

The objective today is load metadata information from a flat file into the Account dimension of an HFM application, if you have loaded metadata using any of the other Hyperion adaptors then you will see no difference in using the HFM adaptor and the process is straightforward, in fact this example I am going to go through couldn’t get much simpler.

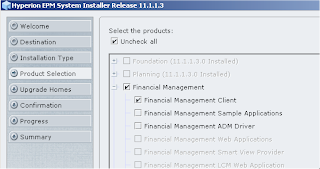

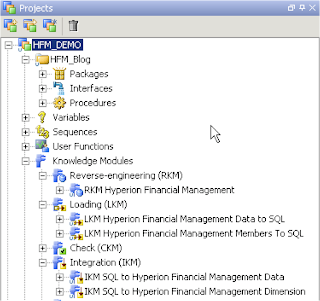

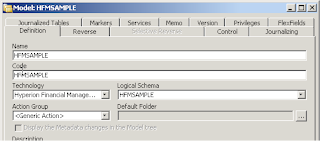

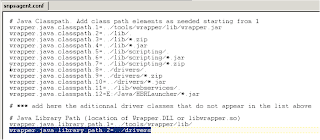

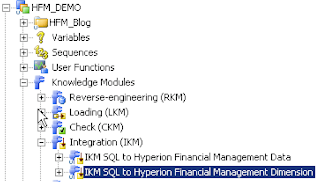

You will need to make sure you have imported in the KM - IKM SQL to Hyperion Financial management Dimension.

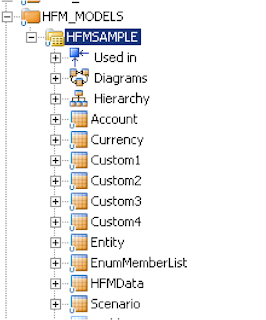

With the KM you are able to load metadata in dimensions Account, Currency, Custom 1-4, Entity and Scenario.

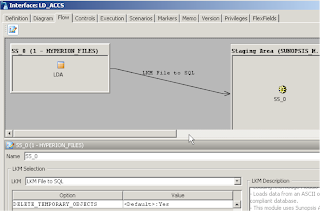

As I will be loading from a flat file you will also need to have imported in the KM – LKM File to SQL

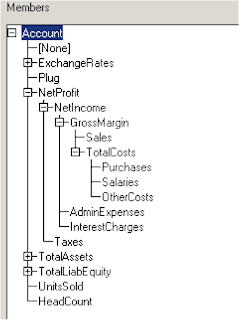

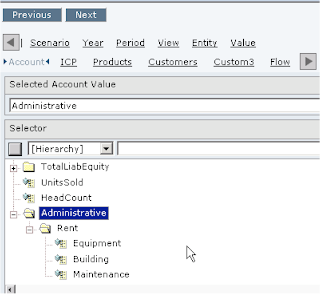

Currently I have the following hierarchy in my sample HFM application (this hierarchy was loaded from the Simple Demo files that are available in the HFM installation directory)

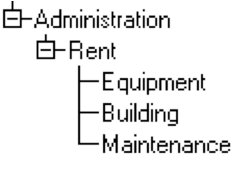

In my example I want to load the following expense members as a child of Account.

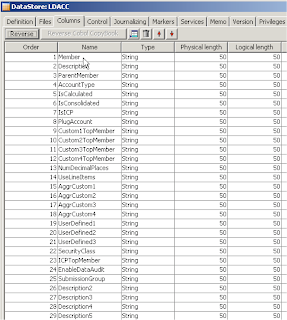

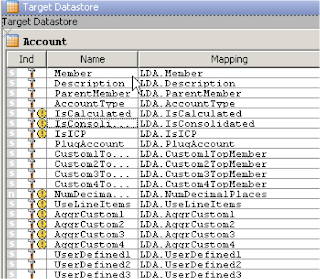

If you have a look at the Account datastore in the Designer you will see all the available properties that can be set when loading in metadata.

I am not going to go through what each of the columns means, as this is all available in the HFM documentation, if you have a read here you will find the details for each of the properties on the Account dimension, the documentation also contains information on the other dimensions.

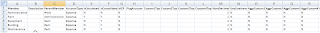

Now I created a file template with the exact same column headers as the datastore columns, I find it easier to match the column headers as when you have auto mapping enabled in ODI it will set all your target mapping for you without having to manually set them up. You don’t have to create a template with all the columns if you don’t like, most of the time you might never use some of the columns so it is up to you if you include them in your file.

The file was populated with all the metadata information.

Once you the file is completed then it is on to defining the file model and datastore in the designer.

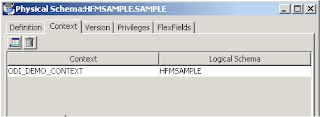

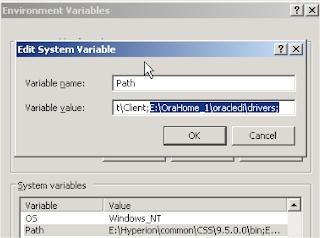

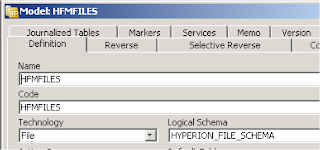

Create a new model of file technology and use a logical schema that points to the correct location of where the file will be stored. I am using a logical schema set up in previous blogs and just points a directory where most of the flat files I use are stored.

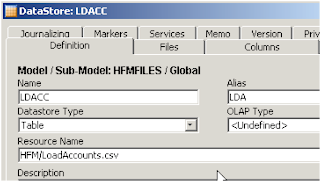

Next a new DataStore is inserted under the model, the file is manually selected using the browse functionality as the file is sitting on the same machine as where ODI is installed.

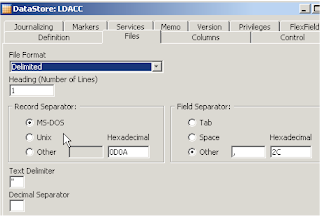

If you looked at the image of the file I am using you will notice it was a CSV and has heading so I set the file format as Delimited, the Heading number of Lines is 1 and the field separator is set to comma.

Reversing the file returns and stores all the columns in the datastore against the header columns in the csv file. After reversing you may need to change length of the columns, I have just left them set to the default for the example.

I usually set flat file types to String and then if I need to convert them to a different type I do it on the mappings of an interface, I do this because I find I have more success and encounter less when loading from files.

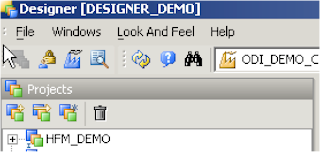

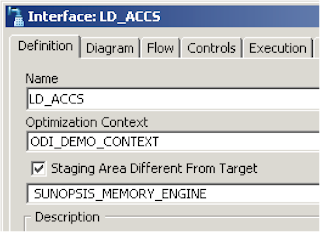

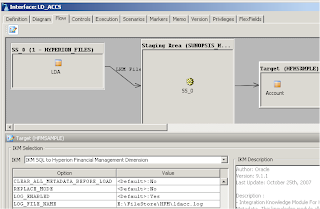

Right on to creating the interface to load the load from the file into the HFM application.

I am just using the SUNOPSIS_MEMORY_ENGINE as the staging area, this is because the volume of data from the flat file is very small, I would think that when loading metadata into a HFM then the volume would usually be pretty low.

You will always need to set a staging area as the target (HFM) can not be used to transform data as it has not SQL capability.

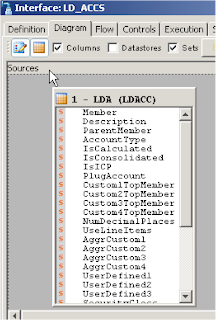

Drag the file source datasource on to the source of the diagram.

Drag the HFM Account DataStore on to the target of the diagram, as the columns on my source match the target all the columns are auto-mapped.

The warnings are just done to column length difference between source and target; if your source data is consistent then you don’t need to worry about it otherwise you could substring the length of the data in the target mapping or change the length in the source file datastore.

The default settings are kept for the LKM.

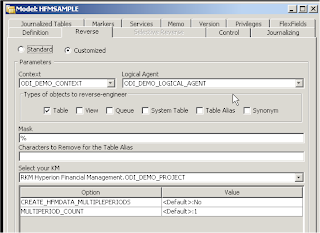

The IKM only has a few options

CLEAR_ALL_METADATA_BEFORE_LOAD - All dimension members and corresponding data, journals, and intercompany transactions in the application database are deleted.

REPLACE_MODE -

No= Merge - If a dimension member exists in the load file and in the application database, then the member in the database is replaced with the member from the load file. If the database has other dimension members that are not referenced in the load file, the members in the database are unchanged.

Yes = Replace - All dimension members in the application database are deleted and the members from the load file are put into the database.

In this example I am just doing a simple load for a few members so all the default settings are kept. I enabled the log and set the full path and filename.

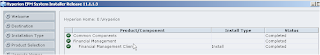

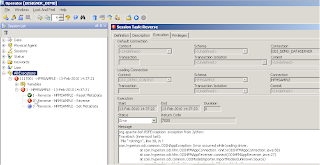

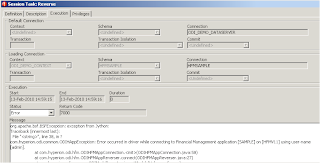

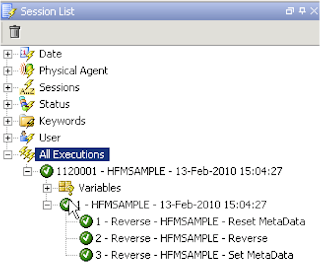

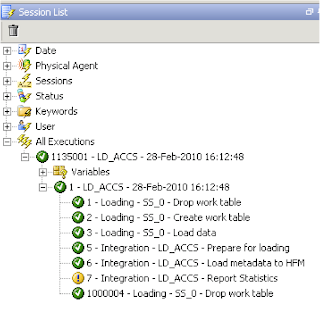

After executing the scenario check the operator to make sure it was successful.

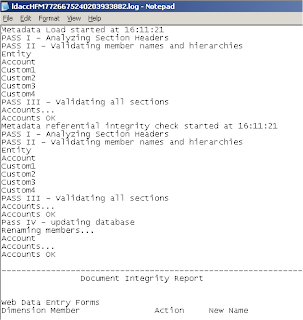

In the log file you will notice another log is generated, the log is the equivalent of what the HFM client produces, it is a validation of the source data.

If the interface fails then the additional log is the place you should check to find out the reason why the validation failed, I have no idea how the numeric code in the log filename relates to anything, it doesn’t look like a timestamp, maybe it is just created so the file will be unique.

In the HFM application you should now be able to select the new members that have been loaded. So there we have it a really simple example of loading metadata into HFM.

As I said in the first HFM blog I am going to keep it to the basics just to get people up and running with the different functionality available with the HFM adaptor.