I was hoping to write up this second part of the Essbase 12c for BI much sooner but I just couldn’t find the time.

In the last post I covered the main noticeable changes to Essbase in 12c which is currently only available bundled with OBIEE, in this post I want to continue the theme and cover how clustering Essbase differs in 12c as OPMN is no more.

I know this topic will not appeal to everyone but it is worth looking into how Essbase is clustered in OBIEE 12c and it is a glimpse into how it may function in EPM 12c, nobody knows for sure when 12c will be available for EPM and Oracle seem to be focused only on one thing at that moment so it may be a long wait.

In 11.x Essbase was clustered in an active/passive configuration and managed by OPMN which was mainly aimed at *nix type deployments, for Windows you have the additional option of using Failover Clusters though annoyingly OPMN was still in the mix to manage the agent process.

I think OPMN divided many opinions and personally I have never found it great for managing Essbase and if you were not clustering Essbase or clustering with Windows it didn’t even really make much sense it being there and ended up causing more confusion than anything.

In 12c OPMN has been dropped and everything is now being managed by the WebLogic framework and as I covered in the last part Essbase is deployed as a WebLogic managed service and the Essbase Java agent replaces the C based one though there are no changes to the Essbase server.

In 12c the Essbase agent is still using an active-passive clustering topology using a WebLogic singleton service, so if you were expecting active-active then you will be disappointed.

A singleton service is a service running on a managed server that is available on only one member of a cluster at a time. WebLogic Server provides allows you to automatically monitor and migrate singleton services from one server to another.

This is what the documentation has to say about clustering and failover for Essbase 12c.

High availability support for Essbase is a function of the WebLogic Server interface on which the Essbase Java Agent runs. The agent and the Essbase Server continually register “heartbeats” with the database, to confirm that it is still actively running. If a server process does not return a regular heartbeat, the agent assumes there is a problem, and terminates the server process.

Essbase failover uses WebLogic Server to solve the problem of split-brain. A split-brain situation can develop when both instances in an active-passive clustered environment think they are “active” and are unaware of each other, which violates the basic premise of active-passive clustering. Situations where split-brain can occur include network outages or partitions.

Lease management built into WebLogic Server ensures exclusivity of instance control. There are two types of cluster migration techniques you can select:

Consensus leasing - This is the default type for the WebLogic Server cluster on which Essbase is deployed.

Database leasing

It is worth picking up on a few of the points made in the statement above, as the Essbase agent operates in an active/passive configuration it uses a singleton service so that it is only active on one member of a WebLogic cluster at a time and this is managed using consensus leasing.

To try and explain WebLogic leasing here are some more excerpts from the documentation :

Leasing is the process WebLogic Server uses to manage services that are required to run on only one member of a cluster at a time. Leasing ensures exclusive ownership of a cluster-wide entity. Within a cluster, there is a single owner of a lease. Additionally, leases can failover in case of server or cluster failure. This helps to avoid having a single point of failure.

Consensus leasing requires that you use Node Manager to control servers within the cluster. Node Manager should be running on every machine hosting Managed Servers within the cluster.

In Consensus leasing, there is no highly available database required. The cluster leader maintains the leases in-memory. All the servers renew their leases by contacting the cluster leader, however, the leasing table is replicated to other nodes of the cluster to provide failover.

The cluster leader is elected by all the running servers in the cluster. A server becomes a cluster leader only when it has received acceptance from the majority of the servers.

Essbase also has new functionality in 12c to register heartbeats into a set of database tables to confirm the agent or server are actively running.

After starting the Essbase agent you will see the following entry in the log to confirm the heartbeat has started.

[SRC_CLASS: oracle.epm.jagent.net.nio.mina.server.NetNioCapiServerMain] [SRC_METHOD: startAgentHeartBeatDaemonThread] agent.heartbeat.thread.started

There is a new essbase configuration file setting that controls the interval of the heartbeat.

HEARTBEATINTERVAL

Sets the interval at which the Essbase Java Agent and the Essbase Server check to confirm that the database is running.

The default interval is 10 seconds.

Syntax

HEARTBEATINTERVAL n

The interval is set to 20 seconds in the essbase.cfg when Essbase is deployed in OBIEE 12c.

There are two tables that are part of the heartbeat, one for the agent and one for the server.

The agent runtime table holds information on the current active host and includes last modified date column will be updated every n seconds depending on the heartbeat interval setting.

The server runtime table which holds information on all the active applications and the last modified date will be updated in line with the heartbeat interval.

There are two views which additionally calculate the number of seconds since the last heartbeat.

If an Essbase application crashes and the esssvr process is lost then you will see the following entries in the log:

[SRC_CLASS: oracle.epm.jagent.process.heartbeat.ServerHeartbeatHarvestor] [SRC_METHOD: harvest] server.heartbeat.missed

[SRC_CLASS: oracle.epm.jagent.process.heartbeat.ServerHeartbeatHarvestor] [SRC_METHOD: harvest] removed.pathalogical.server

The server runtime table will then be updated to remove the server entry.

If the agent crashes and there are active applications then in Essbase application logs there is entry:

Essbase agent with ID [PRIMODIAL_AGENT_ID], is not likely to be running because there is no heart beat since [56] seconds. This server instance will be gracefully shutdown.

Each active Essbase server process will be stopped and records removed from the server runtime table.

Right, now on to clustering Essbase and as Essbase is embedded into the BI managed server this means scaling out by adding a new machine to the domain to end up with the following architecture.

Usually you would then front the managed servers by a HTTP server like OHS or a load balancer.

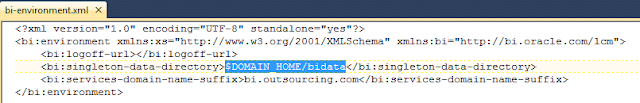

From the diagram you can see that there is a singleton data directory which by default is DOMAIN_HOME/bidata

In terms of Essbase this is where the equivalent of the arborpath location.

Now that Essbase is going to be clustered across two machines then the directory will need to be accessible across both nodes, for Essbase you want the shared file system to be running on the fastest possible storage.

The singleton data directory (SDD) is defined in:

DOMAIN_HOME/config/fmwconfig/bienv/core/bi_environment.xml

The directory should be updated to the shared file system.

The contents of the bidata directory are copied to the shared directory.

Next install OBIEE 12c on the second node using same ORACLE_HOME.

On the master node there is a script available which clone the machine in the BI domain to a new machine and then pack the domain.

DOMAIN_HOME/bitools/bin/clone_bi_machine.sh|cmd [-m]

This will create a template archive (.jar) that contains a snapshot of the BI domain.

Copy the template archive to the second node and run the unpack command to create the DOMAIN_HOME

unpack.sh|cmd –domain=DOMAIN_HOME -nodemanager_type=PerDomainNodeManager

For example:

Re-synchronize the data source on new machine by running the script:

DOMAIN_HOME/bitools/bin/sync_midtier_db.cmd

On the second node start node manager and on the master node start BI components, all done.

In the WL admin console you should see multiple machines and a BI managed server running on them which are part of the BI cluster.

From an Essbase perspective the web application should be running on both nodes in the cluster but the agent will only be active on one of them.

So how do you know which is the active node, well there are a number of ways.

You could check the jagent.log and check for

[SRC_CLASS: oracle.epm.jagent.net.nio.mina.server.NetNioCapiServerMain] [SRC_METHOD: startMain] Oracle Essbase Jagent 12.2.1.0.000.150 started on 9799 at Sun Nov 29 14:51:33 GMT 2015

You could check the Essbase runtime database table or view.

There is also the discover URL which is used when connecting to a clustered essbase instance by such methods as Maxl.

If you have been involved in Essbase clustering in 11.x then you will have used a similar method going via APS.

The discovery URL will be available on each active managed server node.

Usually you would have a HTTP server or load balancer in front of the managed servers so only one URL is required

The discovery URL works by querying the agent runtime table to find the active node so when using Maxl you would connect to the URL and then be directed to the active agent.

To mimic what Maxl is doing then all you need is post a username and password to the agent servlet.

For example using a rest browser plugin you can post the following

The response will include the server and port of the active Essbase agent.

If you look at the jagent log then you will see the request to return the active node

[SRC_CLASS: oracle.epm.jagent.servlet.EssbaseDiscoverer] [SRC_METHOD: getActiveJAgentNodeFromDB] essbase.active.node.found

There is another method to find the active node through WL admin console but I will get on to that shortly as it requires additional configuration.

Right so let us test failover of the Essbase agent, currently the agent is running on FUSION12 and I want to failover to OBINODE2.

The bi managed server is shutdown on FUSION12.

Check the jagent.log:

[SRC_CLASS: oracle.epm.jagent.net.nio.mina.server.NetNioCapiServerMain] [SRC_METHOD: startMain] Oracle Essbase Jagent 12.2.1.0.000.150 started on 9799 at Sun Nov 29 16:08:24 GMT 2015

The agent runtime database view:

The discovery URL:

So each method confirms the Essbase agent has failed over.

This is fine but what if you want both managed servers to be active and you want to move only the active Essbase agent across servers.

This can be done within the WL admin console using the Singleton Service migrate functionality, the problem is this has not been configured by default so I had to add the configuration into the console.

Once configured it is possible to migrate the agent across servers.

Simply select the server to migrate to.

The agent should now be active on bi_server1 – FUSION12.

To confirm this once again using the various methods.

jagent.log:

[SRC_CLASS: oracle.epm.jagent.net.nio.mina.server.NetNioCapiServerMain] [SRC_METHOD: startMain] Oracle Essbase Jagent 12.2.1.0.000.150 started on 9799 at Sun Nov 29 16:33:55 GMT 2015

Agent runtime database view:

Discovery URL:

I was also looking for an automated way of migrating the singleton service which I am sure is possible as I found the mbean responsible for it but it looks more complex than I first thought it would be.

I am sure it is possible by some means of scripting and one day I might actually look into in more detail but for now I have not got the time or energy.

So that is my Sunday gone, I hope you found this post useful, why oh why do I bother :)

In the last post I covered the main noticeable changes to Essbase in 12c which is currently only available bundled with OBIEE, in this post I want to continue the theme and cover how clustering Essbase differs in 12c as OPMN is no more.

I know this topic will not appeal to everyone but it is worth looking into how Essbase is clustered in OBIEE 12c and it is a glimpse into how it may function in EPM 12c, nobody knows for sure when 12c will be available for EPM and Oracle seem to be focused only on one thing at that moment so it may be a long wait.

In 11.x Essbase was clustered in an active/passive configuration and managed by OPMN which was mainly aimed at *nix type deployments, for Windows you have the additional option of using Failover Clusters though annoyingly OPMN was still in the mix to manage the agent process.

I think OPMN divided many opinions and personally I have never found it great for managing Essbase and if you were not clustering Essbase or clustering with Windows it didn’t even really make much sense it being there and ended up causing more confusion than anything.

In 12c OPMN has been dropped and everything is now being managed by the WebLogic framework and as I covered in the last part Essbase is deployed as a WebLogic managed service and the Essbase Java agent replaces the C based one though there are no changes to the Essbase server.

In 12c the Essbase agent is still using an active-passive clustering topology using a WebLogic singleton service, so if you were expecting active-active then you will be disappointed.

A singleton service is a service running on a managed server that is available on only one member of a cluster at a time. WebLogic Server provides allows you to automatically monitor and migrate singleton services from one server to another.

This is what the documentation has to say about clustering and failover for Essbase 12c.

High availability support for Essbase is a function of the WebLogic Server interface on which the Essbase Java Agent runs. The agent and the Essbase Server continually register “heartbeats” with the database, to confirm that it is still actively running. If a server process does not return a regular heartbeat, the agent assumes there is a problem, and terminates the server process.

Essbase failover uses WebLogic Server to solve the problem of split-brain. A split-brain situation can develop when both instances in an active-passive clustered environment think they are “active” and are unaware of each other, which violates the basic premise of active-passive clustering. Situations where split-brain can occur include network outages or partitions.

Lease management built into WebLogic Server ensures exclusivity of instance control. There are two types of cluster migration techniques you can select:

Consensus leasing - This is the default type for the WebLogic Server cluster on which Essbase is deployed.

Database leasing

It is worth picking up on a few of the points made in the statement above, as the Essbase agent operates in an active/passive configuration it uses a singleton service so that it is only active on one member of a WebLogic cluster at a time and this is managed using consensus leasing.

To try and explain WebLogic leasing here are some more excerpts from the documentation :

Leasing is the process WebLogic Server uses to manage services that are required to run on only one member of a cluster at a time. Leasing ensures exclusive ownership of a cluster-wide entity. Within a cluster, there is a single owner of a lease. Additionally, leases can failover in case of server or cluster failure. This helps to avoid having a single point of failure.

Consensus leasing requires that you use Node Manager to control servers within the cluster. Node Manager should be running on every machine hosting Managed Servers within the cluster.

In Consensus leasing, there is no highly available database required. The cluster leader maintains the leases in-memory. All the servers renew their leases by contacting the cluster leader, however, the leasing table is replicated to other nodes of the cluster to provide failover.

The cluster leader is elected by all the running servers in the cluster. A server becomes a cluster leader only when it has received acceptance from the majority of the servers.

Essbase also has new functionality in 12c to register heartbeats into a set of database tables to confirm the agent or server are actively running.

After starting the Essbase agent you will see the following entry in the log to confirm the heartbeat has started.

[SRC_CLASS: oracle.epm.jagent.net.nio.mina.server.NetNioCapiServerMain] [SRC_METHOD: startAgentHeartBeatDaemonThread] agent.heartbeat.thread.started

There is a new essbase configuration file setting that controls the interval of the heartbeat.

HEARTBEATINTERVAL

Sets the interval at which the Essbase Java Agent and the Essbase Server check to confirm that the database is running.

The default interval is 10 seconds.

Syntax

HEARTBEATINTERVAL n

The interval is set to 20 seconds in the essbase.cfg when Essbase is deployed in OBIEE 12c.

There are two tables that are part of the heartbeat, one for the agent and one for the server.

The agent runtime table holds information on the current active host and includes last modified date column will be updated every n seconds depending on the heartbeat interval setting.

The server runtime table which holds information on all the active applications and the last modified date will be updated in line with the heartbeat interval.

There are two views which additionally calculate the number of seconds since the last heartbeat.

If an Essbase application crashes and the esssvr process is lost then you will see the following entries in the log:

[SRC_CLASS: oracle.epm.jagent.process.heartbeat.ServerHeartbeatHarvestor] [SRC_METHOD: harvest] server.heartbeat.missed

[SRC_CLASS: oracle.epm.jagent.process.heartbeat.ServerHeartbeatHarvestor] [SRC_METHOD: harvest] removed.pathalogical.server

The server runtime table will then be updated to remove the server entry.

If the agent crashes and there are active applications then in Essbase application logs there is entry:

Essbase agent with ID [PRIMODIAL_AGENT_ID], is not likely to be running because there is no heart beat since [56] seconds. This server instance will be gracefully shutdown.

Each active Essbase server process will be stopped and records removed from the server runtime table.

Right, now on to clustering Essbase and as Essbase is embedded into the BI managed server this means scaling out by adding a new machine to the domain to end up with the following architecture.

Usually you would then front the managed servers by a HTTP server like OHS or a load balancer.

From the diagram you can see that there is a singleton data directory which by default is DOMAIN_HOME/bidata

In terms of Essbase this is where the equivalent of the arborpath location.

Now that Essbase is going to be clustered across two machines then the directory will need to be accessible across both nodes, for Essbase you want the shared file system to be running on the fastest possible storage.

The singleton data directory (SDD) is defined in:

DOMAIN_HOME/config/fmwconfig/bienv/core/bi_environment.xml

The directory should be updated to the shared file system.

The contents of the bidata directory are copied to the shared directory.

Next install OBIEE 12c on the second node using same ORACLE_HOME.

On the master node there is a script available which clone the machine in the BI domain to a new machine and then pack the domain.

DOMAIN_HOME/bitools/bin/clone_bi_machine.sh|cmd [-m

This will create a template archive (.jar) that contains a snapshot of the BI domain.

Copy the template archive to the second node and run the unpack command to create the DOMAIN_HOME

unpack.sh|cmd –domain=DOMAIN_HOME -nodemanager_type=PerDomainNodeManager

For example:

Re-synchronize the data source on new machine by running the script:

DOMAIN_HOME/bitools/bin/sync_midtier_db.cmd

On the second node start node manager and on the master node start BI components, all done.

In the WL admin console you should see multiple machines and a BI managed server running on them which are part of the BI cluster.

From an Essbase perspective the web application should be running on both nodes in the cluster but the agent will only be active on one of them.

So how do you know which is the active node, well there are a number of ways.

You could check the jagent.log and check for

[SRC_CLASS: oracle.epm.jagent.net.nio.mina.server.NetNioCapiServerMain] [SRC_METHOD: startMain] Oracle Essbase Jagent 12.2.1.0.000.150 started on 9799 at Sun Nov 29 14:51:33 GMT 2015

You could check the Essbase runtime database table or view.

There is also the discover URL which is used when connecting to a clustered essbase instance by such methods as Maxl.

If you have been involved in Essbase clustering in 11.x then you will have used a similar method going via APS.

The discovery URL will be available on each active managed server node.

Usually you would have a HTTP server or load balancer in front of the managed servers so only one URL is required

The discovery URL works by querying the agent runtime table to find the active node so when using Maxl you would connect to the URL and then be directed to the active agent.

To mimic what Maxl is doing then all you need is post a username and password to the agent servlet.

For example using a rest browser plugin you can post the following

It also requires a header GET_ACTIVE_ESSBASE_NODE with a value of ON.

The response will include the server and port of the active Essbase agent.

If you look at the jagent log then you will see the request to return the active node

[SRC_CLASS: oracle.epm.jagent.servlet.EssbaseDiscoverer] [SRC_METHOD: getActiveJAgentNodeFromDB] essbase.active.node.found

There is another method to find the active node through WL admin console but I will get on to that shortly as it requires additional configuration.

Right so let us test failover of the Essbase agent, currently the agent is running on FUSION12 and I want to failover to OBINODE2.

The bi managed server is shutdown on FUSION12.

Check the jagent.log:

[SRC_CLASS: oracle.epm.jagent.net.nio.mina.server.NetNioCapiServerMain] [SRC_METHOD: startMain] Oracle Essbase Jagent 12.2.1.0.000.150 started on 9799 at Sun Nov 29 16:08:24 GMT 2015

The agent runtime database view:

The discovery URL:

So each method confirms the Essbase agent has failed over.

This is fine but what if you want both managed servers to be active and you want to move only the active Essbase agent across servers.

This can be done within the WL admin console using the Singleton Service migrate functionality, the problem is this has not been configured by default so I had to add the configuration into the console.

Once configured it is possible to migrate the agent across servers.

Simply select the server to migrate to.

The agent should now be active on bi_server1 – FUSION12.

To confirm this once again using the various methods.

jagent.log:

[SRC_CLASS: oracle.epm.jagent.net.nio.mina.server.NetNioCapiServerMain] [SRC_METHOD: startMain] Oracle Essbase Jagent 12.2.1.0.000.150 started on 9799 at Sun Nov 29 16:33:55 GMT 2015

Agent runtime database view:

Discovery URL:

I was also looking for an automated way of migrating the singleton service which I am sure is possible as I found the mbean responsible for it but it looks more complex than I first thought it would be.

I am sure it is possible by some means of scripting and one day I might actually look into in more detail but for now I have not got the time or energy.

So that is my Sunday gone, I hope you found this post useful, why oh why do I bother :)