Back again to continue looking at what FDMEE has to offer in terms of Web Services, in the last part I looked at what is currently available in the on-premise world of FDMEE and covered the Web Services using the SOAP protocol and a possible alternative accessing the java web servlets.

Today I am going to look at what is available using the FDMEE REST web service which will be focusing on PBCS as unfortunately this option is not currently available for on-premise, maybe this will change in the next PSU or further down the line, we will have to wait and see.

Update December 2016

REST functionality is now available for on-premise FDMEE from PSU 11.1.2.4.210

I have covered the planning REST API with PBCS in previous posts so it may be worth having a read of them if you have not already because this is going to be in similar vein and I don’t want to have to go into the same detail when I have covered it before.

As you may well be aware if you have worked with PBCS that it is bundled with a cut down version of FDMEE which only known as data management.

If you have used the epm automate utility you will have seen that it has two options when integrating with data management, the documentation states:

Run Data Rule

Executes a Data Management data load rule based on the start period and end period, and import or export options that you specify.

Usage: epmautomate rundatarule RULE_NAME START_PERIOD END_PERIOD IMPORT_MODE EXPORT_MODE [FILE_NAME]

Run Batch

Executes a Data Management batch.

Usage: epmautomate runbatch BATCH_NAME

I am not going to cover using the epm automate utility as it is pretty straight forward and I want to concentrate on the REST API.

If you look at the PBCS REST API documentation there is presently nothing in there about executing the run rule and batch functionality, it must be available as that is how the epm automate utility operates.

Well, it is available which is good or that would have been the end of this blog, maybe it has just been missed in the documentation.

Before I look at the data management REST API I want to quickly cover uploading a file so that it can be processeed, it would be no use if you did not have a file to process.

I did cover in detail uploading files to PBCS but there is a slight difference when it comes to data management as the file needs to be uploaded to a directory that it can be imported from.

To upload a file using the REST API there is a post resource available using the URL format:

https://server/interop/rest/{api_version}/applicationsnapshot/{applicationSnapshotName}/contents?q={“isLast”:”boolean”,”extDirPath”:”upload_dir”chunkSize”:”sizeinbytes”,”isFirst”:”boolean”}

{applicationSnapshotName} is the name of the file to be uploaded

The query parameters are in JSON format:

chunkSize = Size of chunk being sent in bytes

isFirst = If this is the first chunk being sent set to true

isLast = If this is the last chunk being sent set to true.

extDirPath = Directory to upload file to, if you are not uploading to data management then this parameter is not required which is why I didn't cover it in my previous post.

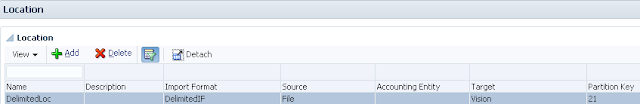

When you create a data load rule in data management and select the file name a pop up window will open and you can drill down the directory structure to find the required file.

It is slightly confusing that it starts with inbox and then has another directory called inbox, anyway I am going to load to the inbox highlighted which mean the extDirPath parameter will need to be set with a value of “inbox”.

Just like in my previous posts I am going to provide an example s with PowerShell scripts to demo how it can be simply achieved, if you are going to attempt this then you can pretty much use any scripting language you feel the most comfortable with.

In the above example the username and password are encoded and passed into the request header.

The file is uploaded from the local machine to the data management inbox using the “Invoke-RestMethod” cmdlet.

The response status codes are:

0 = success

-1 = in progress

Any other code is a failure.

The response was 0 which means the upload was a success and the file can be viewed and selected in the data load rule setup.

Now the file has been uploaded and there is a data load rule the data management the REST API can come into play to execute the rule.

The API can be reached from the following URL format:

https://server/aif/rest/{api_version}/jobs

The current api version is V1

I am going to use boomerang REST client for chrome to demonstrate.

Using a GET request against the REST resource returns the jobs that has been executed in data management.

The response includes the total number of jobs and a breakdown of each job including the status, id, who executed the job and output log.

If I run the same request against the 11.1.2.4.100 on-premise FDMEE the following is returned.

I deliberately executed the request with no authentication header just to show that the REST resource is available, adding the header returns the following response.

It looks like required Shared Services Java methods don’t yet exist in on-premise so that is as far you can get at the moment.

Back to PBCS and to run a rule the same URL format is required but this time it will be using the post request, the payload or body of the request has to include the job information in JSON format.

The parameters to supply are:

Once the request is posted the response will contain information about the rule that has been executed.

The rule status is shown as running, to keep checking the status of the rule then the URL that is returned in the href parameter can be accessed using a get request.

This time the status is returned as success so the rule has completed, the id can be matched to the process id in data management.

Converting this into a script is a pretty simple task.

The URL containing the job id can stored from the response and then another request executed to check the status.

Once again this can be confirmed in the process details area in data management.

Moving on to running a batch which is the same as running a rule except with less parameters in the payload.

The required parameters are “jobType” and “jobName” in JSON format.

The “jobType “value will be “BATCH” and the “jobname” will be the name of the batch, nice and simple.

The response is exactly the same as when running a rule.

In scripting terms it is the same logic as with running a rule, this time in my example I have updated the script so that it keeps checking the status of the batch until a value other than running (-1) is returned.

Well that covers what is currently available with the data management REST API, hopefully this will make its way down to on-premise FDMEE in the near future, it may appear with the imminent release of hybrid functionality but we will just have to wait and see.

Today I am going to look at what is available using the FDMEE REST web service which will be focusing on PBCS as unfortunately this option is not currently available for on-premise, maybe this will change in the next PSU or further down the line, we will have to wait and see.

Update December 2016

REST functionality is now available for on-premise FDMEE from PSU 11.1.2.4.210

I have covered the planning REST API with PBCS in previous posts so it may be worth having a read of them if you have not already because this is going to be in similar vein and I don’t want to have to go into the same detail when I have covered it before.

As you may well be aware if you have worked with PBCS that it is bundled with a cut down version of FDMEE which only known as data management.

If you have used the epm automate utility you will have seen that it has two options when integrating with data management, the documentation states:

Run Data Rule

Executes a Data Management data load rule based on the start period and end period, and import or export options that you specify.

Usage: epmautomate rundatarule RULE_NAME START_PERIOD END_PERIOD IMPORT_MODE EXPORT_MODE [FILE_NAME]

Run Batch

Executes a Data Management batch.

Usage: epmautomate runbatch BATCH_NAME

I am not going to cover using the epm automate utility as it is pretty straight forward and I want to concentrate on the REST API.

If you look at the PBCS REST API documentation there is presently nothing in there about executing the run rule and batch functionality, it must be available as that is how the epm automate utility operates.

Well, it is available which is good or that would have been the end of this blog, maybe it has just been missed in the documentation.

Before I look at the data management REST API I want to quickly cover uploading a file so that it can be processeed, it would be no use if you did not have a file to process.

I did cover in detail uploading files to PBCS but there is a slight difference when it comes to data management as the file needs to be uploaded to a directory that it can be imported from.

To upload a file using the REST API there is a post resource available using the URL format:

https://server/interop/rest/{api_version}/applicationsnapshot/{applicationSnapshotName}/contents?q={“isLast”:”boolean”,”extDirPath”:”upload_dir”chunkSize”:”sizeinbytes”,”isFirst”:”boolean”}

{applicationSnapshotName} is the name of the file to be uploaded

The query parameters are in JSON format:

chunkSize = Size of chunk being sent in bytes

isFirst = If this is the first chunk being sent set to true

isLast = If this is the last chunk being sent set to true.

extDirPath = Directory to upload file to, if you are not uploading to data management then this parameter is not required which is why I didn't cover it in my previous post.

When you create a data load rule in data management and select the file name a pop up window will open and you can drill down the directory structure to find the required file.

It is slightly confusing that it starts with inbox and then has another directory called inbox, anyway I am going to load to the inbox highlighted which mean the extDirPath parameter will need to be set with a value of “inbox”.

Just like in my previous posts I am going to provide an example s with PowerShell scripts to demo how it can be simply achieved, if you are going to attempt this then you can pretty much use any scripting language you feel the most comfortable with.

In the above example the username and password are encoded and passed into the request header.

The file is uploaded from the local machine to the data management inbox using the “Invoke-RestMethod” cmdlet.

The response status codes are:

0 = success

-1 = in progress

Any other code is a failure.

The response was 0 which means the upload was a success and the file can be viewed and selected in the data load rule setup.

Now the file has been uploaded and there is a data load rule the data management the REST API can come into play to execute the rule.

The API can be reached from the following URL format:

https://server/aif/rest/{api_version}/jobs

The current api version is V1

I am going to use boomerang REST client for chrome to demonstrate.

Using a GET request against the REST resource returns the jobs that has been executed in data management.

The response includes the total number of jobs and a breakdown of each job including the status, id, who executed the job and output log.

If I run the same request against the 11.1.2.4.100 on-premise FDMEE the following is returned.

I deliberately executed the request with no authentication header just to show that the REST resource is available, adding the header returns the following response.

It looks like required Shared Services Java methods don’t yet exist in on-premise so that is as far you can get at the moment.

Back to PBCS and to run a rule the same URL format is required but this time it will be using the post request, the payload or body of the request has to include the job information in JSON format.

The parameters to supply are:

- jobType which will be DATARULE

- jobName is the name of the data load rule defined in data management.

- startPeriod is the first period which is to be loaded.

- endPeriod for a single period load will be the same as start period and for a multi-period load it will be the last period which data is to be loaded.

- importMode determines how the data is imported into data management, possible values are:

- APPEND to add to the existing POV data in Data Management

- REPLACE to delete the POV data and replace it with the data from the file

- NONE to skip data import into the data management staging table

- exportMode determines how the data is exported and loaded to the planning application, possible values are:

- STORE_DATA merges the data in the data management staging table with the existing planning applications data

- ADD_DATA adds the data in the data management staging table to the existing planning applications data

- SUBTRACT_DATA subtracts the data in the data management staging table from the existing planning applications data.

- REPLACE_DATA clears the POV data in the planning application and then load the data in the data management staging table. The data is cleared for Scenario, Version, Year, Period, and Entity

- NONE does not perform the data export and load into the planning application.

- fileName (optional) if not supplied the file which is defined in the data load rule will be used.

Once the request is posted the response will contain information about the rule that has been executed.

The rule status is shown as running, to keep checking the status of the rule then the URL that is returned in the href parameter can be accessed using a get request.

This time the status is returned as success so the rule has completed, the id can be matched to the process id in data management.

Converting this into a script is a pretty simple task.

The URL containing the job id can stored from the response and then another request executed to check the status.

Once again this can be confirmed in the process details area in data management.

Moving on to running a batch which is the same as running a rule except with less parameters in the payload.

The required parameters are “jobType” and “jobName” in JSON format.

The “jobType “value will be “BATCH” and the “jobname” will be the name of the batch, nice and simple.

The response is exactly the same as when running a rule.

In scripting terms it is the same logic as with running a rule, this time in my example I have updated the script so that it keeps checking the status of the batch until a value other than running (-1) is returned.

Well that covers what is currently available with the data management REST API, hopefully this will make its way down to on-premise FDMEE in the near future, it may appear with the imminent release of hybrid functionality but we will just have to wait and see.