On to the third part in this series looking at the new FDMEE hybrid functionality which was introduced in 11.1.2.4.200

In the first part I went through the necessary configuration to get up and running with the hybrid functionality.

In the last part I covered loading data from on-premise FDMEE to a PBCS application and what happens behind the scenes with the REST API.

In this post I am going to reverse the process and export data from a PBCS application and load it into an on-premise EPM application, once again I will focus on what is actually happening with the REST API.

The assumption is going to be that you have read through the previous posts and understand what hybrid is all about.

The first step would be to add a PBCS application as a target, I am not going to cover what happens with REST as I provided the details in the last post.

Then enter the PBCS credentials

Select the Planning application that should have already been registered in PBCS data management.

If any ASO Plan Types have been registered in PBCS data management then you should see them in the dropdown if you select the type as Essbase

In my example I am going extract data from a PBCS BSO plan type and then load into an on-premise Essbase BSO database though it could be any supported application, the process to achieve this is no different than working totally on-premise so I am will only briefly cover it.

When creating an import format the Source Type will be EPM and the Source will be the PBCS application, Target type is EPM and Target is a registered on-premise EPM application.

A new location is added and the import format that has just been created is selected.

When creating a new data load rule the category and the source plan type to extract data from is selected.

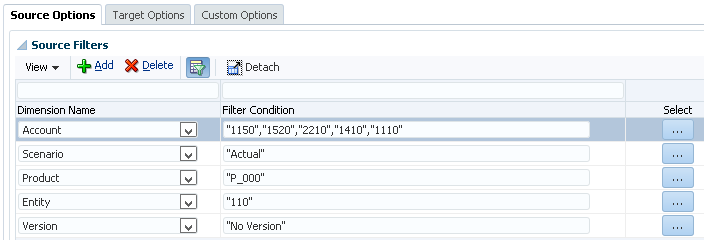

In the source options of the rule I defined the members where I want to extract the data from.

When using the member selector there is currently not an option like with on-premise to refresh members.

This is done through in the “Target Application” area.

I covered in detail in the last post how the members are refreshed from PBCS using the REST API.

Once the members have been refresh the member selector should be populated for each dimension.

Setting up the data load mappings is exactly the same as loading from on-premise to PBCS except this time it is reverse so the source is PBCS members and in my case the target is members in an Essbase database.

Let us move on to the exporting of the data from PBCS and moving that into on-premise.

In the on-premise FDMEE workbench it is exactly as you expect the data is imported into FDMEE, validated and then exported into the Essbase database.

So what is happening behind the scenes as the data does not magically appear on-premise to be loaded into the FDMEE database.

If you look in the process details for PBCS you will just see a step for the data being extracted from the planning application.

The on-premise FDMEE process details breaks down the steps a little further but does not give away too much unless you start looking into the logs.

The first step “Extract data from Planning application Vision” is where all the new functionality is occurring as once the data arrives to on-premise in the form of a file the standard functionality that we are used to in FDMEE takes over.

To extract the data a POST request against a REST resource in PBCS data management is executed.

The resource URL is the same one that it used in most of the interactions with PBCS data management.

https://<pbcs_instance>/aif/rest//jobs

It is the JSON input payload of the request that is the main driver to extract the data.

The jobName value is the name of the PBCS application.

The jobType value for a data export is always “DIRECTEXPORT”

The planName is the plan type name.

The exportDataScript value contains an Essbase calculation script using the DATAEXPORT command, the FIX in the calc script will be generated from the definition of the FDMEE data load rule.

In my example the formatted calc script would look like:

SET DataExportOptions

DATAEXPORTCOLFORMAT ON;

DATAEXPORTDIMHEADER ON;

DATAEXPORTDRYRUN OFF;

DataExportRelationalFile OFF;

DataExportNonExistingBlocks OFF;

DataExportLevel ALL;

DATAEXPORTCOLHEADER "Period";

DATAEXPORTOVERWRITEFILE ON;

DataExportDynamicCalc OFF;

};

FIX ("1150","1520","2210","1410","1110","110","BaseData","Apr","P_000","Actual","No Version","FY16")

DATAEXPORT "File" "," "PLACE_HOLDER_DATA_FILE_NAME.txt" "#";

ENDFIX

The JSON response includes details on the job that is being executed including the status and ID.

The name of the text file will be generated on the PBCS side and is a combination of the application name, plan type and process id

So in my example the filename is: Vision_Plan1_92.txt

If extracting from an ASO Essbase database then the export script would contain MDX and here is an example using the REST API to extract ASO data

Anyway back to my original process, once the export has completed the file is automatically renamed and moved into a directory ready to be downloaded, in my example the file is renamed to Vision_92.dat

The file can be viewed from the inbox/output explorer in the planning simplified interface.

The next step is the dat file is downloaded from PBCS to the on-premise FDMEE data directory, the downloading of the file is achieved with the application management REST API

I covered downloading files in a previous post on the PBCS REST API and here is an example of how to do this using a PowerShell script.

The file is downloaded from PBCS and renamed to include pbcs and the originating on-premise process ID, the target location depends on the application root folder configuration in FDMEE.

If the option to purge the data fail is set to Yes then a REST resource is called again using a DELETE request.

The above request deletes the file “Vision_92.dat” from PBCS.

Now the exported file is accessible from on-premise FDMEE the remaining steps in the process are no different than what usually happens when loading from a source file, the file is loaded into the FDMEE database, validated and then loaded to the target Essbase database.

Well that pretty much completes what I intend to cover on FDMEE hybrid functionality for now, if additional functionality is added in the future then no doubt there will be more posts on this subject.

In the first part I went through the necessary configuration to get up and running with the hybrid functionality.

In the last part I covered loading data from on-premise FDMEE to a PBCS application and what happens behind the scenes with the REST API.

In this post I am going to reverse the process and export data from a PBCS application and load it into an on-premise EPM application, once again I will focus on what is actually happening with the REST API.

The assumption is going to be that you have read through the previous posts and understand what hybrid is all about.

The first step would be to add a PBCS application as a target, I am not going to cover what happens with REST as I provided the details in the last post.

Then enter the PBCS credentials

Select the Planning application that should have already been registered in PBCS data management.

If any ASO Plan Types have been registered in PBCS data management then you should see them in the dropdown if you select the type as Essbase

In my example I am going extract data from a PBCS BSO plan type and then load into an on-premise Essbase BSO database though it could be any supported application, the process to achieve this is no different than working totally on-premise so I am will only briefly cover it.

When creating an import format the Source Type will be EPM and the Source will be the PBCS application, Target type is EPM and Target is a registered on-premise EPM application.

A new location is added and the import format that has just been created is selected.

When creating a new data load rule the category and the source plan type to extract data from is selected.

In the source options of the rule I defined the members where I want to extract the data from.

When using the member selector there is currently not an option like with on-premise to refresh members.

This is done through in the “Target Application” area.

I covered in detail in the last post how the members are refreshed from PBCS using the REST API.

Once the members have been refresh the member selector should be populated for each dimension.

Setting up the data load mappings is exactly the same as loading from on-premise to PBCS except this time it is reverse so the source is PBCS members and in my case the target is members in an Essbase database.

Let us move on to the exporting of the data from PBCS and moving that into on-premise.

In the on-premise FDMEE workbench it is exactly as you expect the data is imported into FDMEE, validated and then exported into the Essbase database.

So what is happening behind the scenes as the data does not magically appear on-premise to be loaded into the FDMEE database.

If you look in the process details for PBCS you will just see a step for the data being extracted from the planning application.

The on-premise FDMEE process details breaks down the steps a little further but does not give away too much unless you start looking into the logs.

The first step “Extract data from Planning application Vision” is where all the new functionality is occurring as once the data arrives to on-premise in the form of a file the standard functionality that we are used to in FDMEE takes over.

To extract the data a POST request against a REST resource in PBCS data management is executed.

The resource URL is the same one that it used in most of the interactions with PBCS data management.

https://<pbcs_instance>/aif/rest/

It is the JSON input payload of the request that is the main driver to extract the data.

The jobName value is the name of the PBCS application.

The jobType value for a data export is always “DIRECTEXPORT”

The planName is the plan type name.

The exportDataScript value contains an Essbase calculation script using the DATAEXPORT command, the FIX in the calc script will be generated from the definition of the FDMEE data load rule.

In my example the formatted calc script would look like:

SET DataExportOptions

DATAEXPORTCOLFORMAT ON;

DATAEXPORTDIMHEADER ON;

DATAEXPORTDRYRUN OFF;

DataExportRelationalFile OFF;

DataExportNonExistingBlocks OFF;

DataExportLevel ALL;

DATAEXPORTCOLHEADER "Period";

DATAEXPORTOVERWRITEFILE ON;

DataExportDynamicCalc OFF;

};

FIX ("1150","1520","2210","1410","1110","110","BaseData","Apr","P_000","Actual","No Version","FY16")

DATAEXPORT "File" "," "PLACE_HOLDER_DATA_FILE_NAME.txt" "#";

ENDFIX

The JSON response includes details on the job that is being executed including the status and ID.

The name of the text file will be generated on the PBCS side and is a combination of the application name, plan type and process id

So in my example the filename is: Vision_Plan1_92.txt

If extracting from an ASO Essbase database then the export script would contain MDX and here is an example using the REST API to extract ASO data

Anyway back to my original process, once the export has completed the file is automatically renamed and moved into a directory ready to be downloaded, in my example the file is renamed to Vision_92.dat

The file can be viewed from the inbox/output explorer in the planning simplified interface.

The next step is the dat file is downloaded from PBCS to the on-premise FDMEE data directory, the downloading of the file is achieved with the application management REST API

I covered downloading files in a previous post on the PBCS REST API and here is an example of how to do this using a PowerShell script.

The file is downloaded from PBCS and renamed to include pbcs and the originating on-premise process ID, the target location depends on the application root folder configuration in FDMEE.

If the option to purge the data fail is set to Yes then a REST resource is called again using a DELETE request.

The above request deletes the file “Vision_92.dat” from PBCS.

Now the exported file is accessible from on-premise FDMEE the remaining steps in the process are no different than what usually happens when loading from a source file, the file is loaded into the FDMEE database, validated and then loaded to the target Essbase database.

Well that pretty much completes what I intend to cover on FDMEE hybrid functionality for now, if additional functionality is added in the future then no doubt there will be more posts on this subject.