Well it almost a year since the 11.1.2.4.210 patch set was released but finally the next patch .220 has landed.

Besides bug fixes there are some new features in the .220 release which I will quickly go through in this post.

I must admit I was expecting more of the functionality from EPM Cloud to be pushed down to on-premise in this release but unfortunately that has not been the case.

There are four new features in the patch readme and one of them existed in .210, anyway let us have a quick look, the first new feature is:

Import and Export of Mapping Scripts in Text Mapping Files

“FDMEE now supports the export and import of mapping scripts in a text file. This support includes both Jython and SQL scripts. The scripts are enclosed in a <!SCRIPT> tag.”

It is amazing it has taken this long to include custom scripting in mapping text files, if I take the following mapping which has custom Jython scripting applied.

Then export the mapping using either the “Current Dimension” or “All Dimensions” option.

The file is then saved to a specified location under the FDMEE root folder.

If the exported mapping text file is opened you will see that the only difference to previous versions is that the custom script section is included.

The custom script can be spread across multiple lines and just needs to be enclosed with

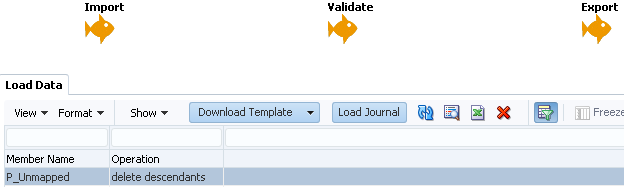

Now to import a mapping text file that contains a simple custom SQL script.

Just like in previous versions the file needs to uploaded or copied to a location under the FDMEE root folder and then imported.

The import mode and validation options are exactly the same as before.

Now the imported custom script is available with the mapping definition.

It is also possible to use the REST API to import and export the mappings which you can read about here.

On to the next new feature:

Registering Duplicate Target Application Names

“FDMEE now enables you to register target applications with the same name. This may be the case where a customer has multiple service environments and the application name is the same in each environment, or the application names are identical in development and production environments.

This feature enables you to add a prefix to the application name when registering the application so that it can be registered successfully in FDMEE and be identified correctly in the list of target applications.

Target applications with prefixes are not backward compatible, and cannot be migrated to an

11.1.2.4.210 or earlier release. Only a target application without a prefix name can be migrated to an earlier release.”

So basically, when you add a new target application you have the option to include a prefix.

I have already written about this functionality in EPM Cloud and it operates in the same way, you can read about it in more detail here.

The third new feature is:

Support Member Names with a Comma When Exporting to Planning

“When export to Planning, you can now export a dimension member name that contains a comma (,). A new Member name may contain comma setting has been added to Target options, which enables the feature.”

This is another feature that has been pushed down from EPM Cloud where it appeared in the 17.07 release.

I have always wondered why it needs to be an option and it should be able to handle member names with commas by default.

The property can be set at either target application level.

or in the target options for a data load rule.

If the property value is not set it will default to no.

I had a look at this functionality in the past and found that it does not relate to all load methods.

If I take a rule with a load method of numeric data only and set “Member name may contain a comma” to no.

In the following example, there is an entity member containing a comma, the export to the target planning application completes successfully.

The file that is produced before being loaded to the target application shows that the member containing the comma is enclosed by quotes so there is no problem with it loading.

There are no problems loading members containing commas with both the numeric data only load methods.

If I switch the rule to an all data loads method.

This time the export fails.

The process log contains the following error:

“The member Dummy does not exist for the specified plan type or you do not have access to it.”

Looking at the export file generated by FDMEE provides a clearer indication to why the load failed.

When setting the load method to “All Data Types” the outline load utility (OLU) will be used to load the data, if it numeric data only load method an Essbase data load rule will be created to load the data.

With the OLU method the Point-of-View contains a comma separated member list and because the member contains a comma this is causing the load to fail, if it was a driver member such as account that contained the comma the load would not have failed.

Also contained in the log is reference to the file delimiter.

DEBUG [AIF]: SELECT parameter_value FROM aif_bal_rule_load_params WHERE loadid = 1464 and parameter_name = 'EXPORT_FILE_DELIMITER'

DEBUG [AIF]: fileDelimiter: comma

The export delimiter will be set depending on the yes/no value in the “Member name may contain comma” property.

You can also see that the /DL parameter is set as part of the OLU load.

DEBUG [AIF]: Data Load: exportMode=STORE_DATA, loadMethod=OLU

Property file arguments: /DL:comma /DF:MM-DD-YYYY /TR

The POV is comma separated if you add a member into the POV that has a comma then it will fail.

Let me repeat the process but this time set the property value to yes.

This time the export is successful.

The export file that is produced is tab delimited.

The value retrieved from the FDMEE repository is tab.

DEBUG [AIF]: SELECT parameter_value FROM aif_bal_rule_load_params WHERE loadid = 464 and parameter_name = 'EXPORT_FILE_DELIMITER'

DEBUG [AIF]: fileDelimiter: tab

The OLU field delimiter is set to tab using the /DL parameter.

DEBUG [AIF]: Data Load: exportMode=STORE_DATA, loadMethod=OLU

Property file arguments: /DL:tab

So, even though “Member name can contain comma” can be set for any load method it will only apply to all data types and only affects members in the “point-of-view”.

Remember if the property is not set it will default to no.

The final new feature is actually not a new feature as it exists with the same functionality in 11.1.2.4.210

Support for REST APIs

“REST API can be used now in FDMEE to execute various jobs that run data load rules, batches, scripts, reports, and the import and export of mapping rules.”

I think the only difference is that the REST APIs are now officially supported even though they are referenced in the 11.1.2.4.210 FDMEE documentation.

I have previously written a couple of detailed posts about the REST API in FDMEE which you can read all about here and here.

There are some new features that have been missed from the patch readme, one of them being the data type load method “All data types with auto-increment of line item”

Not to worry I have two posts about this functionality which you can read about here and here

Finally, with the all data load types method there is the option to apply mappings to a data dimension.

Guess what, I have also written about this functionality which you can read about here, look for “Is mapping data values possible with the all data type?”

I think that covers off what's new in FDMEE 11.1.2.4.220

Besides bug fixes there are some new features in the .220 release which I will quickly go through in this post.

I must admit I was expecting more of the functionality from EPM Cloud to be pushed down to on-premise in this release but unfortunately that has not been the case.

There are four new features in the patch readme and one of them existed in .210, anyway let us have a quick look, the first new feature is:

Import and Export of Mapping Scripts in Text Mapping Files

“FDMEE now supports the export and import of mapping scripts in a text file. This support includes both Jython and SQL scripts. The scripts are enclosed in a <!SCRIPT> tag.”

It is amazing it has taken this long to include custom scripting in mapping text files, if I take the following mapping which has custom Jython scripting applied.

Then export the mapping using either the “Current Dimension” or “All Dimensions” option.

The file is then saved to a specified location under the FDMEE root folder.

If the exported mapping text file is opened you will see that the only difference to previous versions is that the custom script section is included.

The custom script can be spread across multiple lines and just needs to be enclosed with

Now to import a mapping text file that contains a simple custom SQL script.

Just like in previous versions the file needs to uploaded or copied to a location under the FDMEE root folder and then imported.

The mapping file is selected.

The import mode and validation options are exactly the same as before.

Now the imported custom script is available with the mapping definition.

It is also possible to use the REST API to import and export the mappings which you can read about here.

On to the next new feature:

Registering Duplicate Target Application Names

“FDMEE now enables you to register target applications with the same name. This may be the case where a customer has multiple service environments and the application name is the same in each environment, or the application names are identical in development and production environments.

This feature enables you to add a prefix to the application name when registering the application so that it can be registered successfully in FDMEE and be identified correctly in the list of target applications.

Target applications with prefixes are not backward compatible, and cannot be migrated to an

11.1.2.4.210 or earlier release. Only a target application without a prefix name can be migrated to an earlier release.”

So basically, when you add a new target application you have the option to include a prefix.

I have already written about this functionality in EPM Cloud and it operates in the same way, you can read about it in more detail here.

The third new feature is:

Support Member Names with a Comma When Exporting to Planning

“When export to Planning, you can now export a dimension member name that contains a comma (,). A new Member name may contain comma setting has been added to Target options, which enables the feature.”

This is another feature that has been pushed down from EPM Cloud where it appeared in the 17.07 release.

I have always wondered why it needs to be an option and it should be able to handle member names with commas by default.

The property can be set at either target application level.

or in the target options for a data load rule.

If the property value is not set it will default to no.

I had a look at this functionality in the past and found that it does not relate to all load methods.

If I take a rule with a load method of numeric data only and set “Member name may contain a comma” to no.

In the following example, there is an entity member containing a comma, the export to the target planning application completes successfully.

The file that is produced before being loaded to the target application shows that the member containing the comma is enclosed by quotes so there is no problem with it loading.

There are no problems loading members containing commas with both the numeric data only load methods.

If I switch the rule to an all data loads method.

This time the export fails.

The process log contains the following error:

“The member Dummy does not exist for the specified plan type or you do not have access to it.”

Looking at the export file generated by FDMEE provides a clearer indication to why the load failed.

When setting the load method to “All Data Types” the outline load utility (OLU) will be used to load the data, if it numeric data only load method an Essbase data load rule will be created to load the data.

With the OLU method the Point-of-View contains a comma separated member list and because the member contains a comma this is causing the load to fail, if it was a driver member such as account that contained the comma the load would not have failed.

Also contained in the log is reference to the file delimiter.

DEBUG [AIF]: SELECT parameter_value FROM aif_bal_rule_load_params WHERE loadid = 1464 and parameter_name = 'EXPORT_FILE_DELIMITER'

DEBUG [AIF]: fileDelimiter: comma

The export delimiter will be set depending on the yes/no value in the “Member name may contain comma” property.

You can also see that the /DL parameter is set as part of the OLU load.

DEBUG [AIF]: Data Load: exportMode=STORE_DATA, loadMethod=OLU

Property file arguments: /DL:comma /DF:MM-DD-YYYY /TR

The POV is comma separated if you add a member into the POV that has a comma then it will fail.

Let me repeat the process but this time set the property value to yes.

This time the export is successful.

The export file that is produced is tab delimited.

The value retrieved from the FDMEE repository is tab.

DEBUG [AIF]: SELECT parameter_value FROM aif_bal_rule_load_params WHERE loadid = 464 and parameter_name = 'EXPORT_FILE_DELIMITER'

DEBUG [AIF]: fileDelimiter: tab

The OLU field delimiter is set to tab using the /DL parameter.

DEBUG [AIF]: Data Load: exportMode=STORE_DATA, loadMethod=OLU

Property file arguments: /DL:tab

So, even though “Member name can contain comma” can be set for any load method it will only apply to all data types and only affects members in the “point-of-view”.

Remember if the property is not set it will default to no.

The final new feature is actually not a new feature as it exists with the same functionality in 11.1.2.4.210

Support for REST APIs

“REST API can be used now in FDMEE to execute various jobs that run data load rules, batches, scripts, reports, and the import and export of mapping rules.”

I think the only difference is that the REST APIs are now officially supported even though they are referenced in the 11.1.2.4.210 FDMEE documentation.

I have previously written a couple of detailed posts about the REST API in FDMEE which you can read all about here and here.

There are some new features that have been missed from the patch readme, one of them being the data type load method “All data types with auto-increment of line item”

Not to worry I have two posts about this functionality which you can read about here and here

Finally, with the all data load types method there is the option to apply mappings to a data dimension.

Guess what, I have also written about this functionality which you can read about here, look for “Is mapping data values possible with the all data type?”

I think that covers off what's new in FDMEE 11.1.2.4.220