The 19.02 EPM Cloud release brings new REST API resources to set the administration mode of an application and assist with possible optimisation of an application.

The following is now possible through the REST API:

Up to now, the functionality has only been available either by manually applying or creating a job which can be rescheduled or run straight away. With the REST API you don’t have to be confined to these restrictions.

In the post I am going to through each of the new REST resources, providing an example with a REST client and a simple script.

Let us start off with the one I feel will be the most welcomed - the ability to change the administration mode of an application. I have blogged about this a couple of times in the past, the first was when the only way to automate the process was to either run a refresh database and change the administration mode or change the mode through migration files. The second post looked at the schedule job feature which was introduced in 18.05

It is possible to change an application between administrators only and all users through the UI and this is under application settings.

It is also possible to create a schedule job with the “Administration Mode” option.

This can be run immediately or scheduled and allows the application to be enabled for administrator or all users.

Moving on to the REST API, all the new functionality is available through the jobs resource which has the URL format of:

https://<epm_cloud_instance>/HyperionPlanning/rest/v3/applications/<application_name>/jobs

A POST method is required, and the body of the post must include the job details in JSON format.

The JSON should include:

jobType which will always be “Administration Mode”

jobName just provides a description of the job and can be named appropriately.

loginLevel is a parameter that defines which mode to place the application in, possible values are either “Administrators” or “All Users”.

An example to put the application in application mode using a REST client is as follows:

The response from the REST request includes the status, job ID, details and a URL to access the job information.

The application mode change should be instant so there will no need to keep checking the status until it is complete. The job will be available in the simplified interface and contain the value that was defined in “jobName” parameter.

Under application settings the application is now only enabled for administrators.

If non-administrators try and log into the application, they will be greeted with:

A simple change to the “loginLevel” parameter will update the application mode back to all users, the REST request would be:

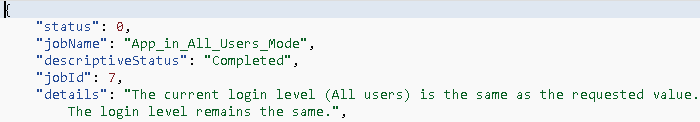

The response is similar except for the “details” parameter description.

Once again, the job activity will be available in the UI.

The application will be enabled for all users in the application settings.

The body of the REST request only allows two values for “loginLevel”, if a different value is specified then the following error will be returned in the response:

The reality is, the process of updating the administration mode of an application would need to be automated, this can be achieved using most scripting languages.

It can come down to personal preference or company policies to which language you select. The following is an example using PowerShell which does not take much effort to put together.

As the new REST functionality is all based around the jobs resource the script can be easily reused.

To update the application mode to all users, the only changes would be to the “jobName” and “loginLevel” variables.

After making the changes, running the script will enable the application for all users.

On to the next new welcomed feature and that is the ability to restructure a cube using the REST API.

It is possible to restructure a cube manually through database properties in Calculation Manager.

This can be automated by selecting the “Restructure Cube” option when creating a new job schedule.

The cube which requires restructuring can be selected in the schedule job options.

To achieve this through the REST API it is the same job resource URL and POST method.

This time the “jobType” will be “Restructure Cube”, the “jobName” can be named appropriately and “cubeName” is required to define which cube to restructure.

As a restructure is not instant, the response will no doubt inform that the process is running.

The status values are -1 = in progress; 0 = success; 1 = error; 2 = cancel pending; 3 = cancelled; 4 = invalid parameter

The response includes a URL to the job status, which can then be accessed to check whether the process has been completed.

The simplified interface contains the job activity.

To automate the process the same script can be reused, it only requires a few modifications for the job information which is included in the body of the REST request.

In previous blogs I have gone into more detail around repeatedly checking the status of the job, but in simple terms the job status URL which is returned in the REST response can be stored, with a GET request the job status can then be checked.

To confirm a restructure had taken place, before running the script I created some empty blocks in the cube.

After running the script, the empty blocks had been removed which confirms a restructure had taken place.

Moving on to the three remaining optimisation features, all of which are ASO related.

The first of these is the ability to compact the outline of an ASO cube. It is possible to compact the outline through database properties in Calculation Manager.

There is also a “Compact Outline” option in scheduled jobs in the simplified interface.

This provides a brief description of the functionality and allows you to define which cube to compact the outline.

If you don’t want to use the scheduling functionality in the UI, it is now possible to use the REST API.

As with all the new functionality, the same jobs URL is used and a similar body in the REST request.

The “jobType” will be “Compact Cube”, not sure why it wasn’t named “Compact Outline”. The “cubeName” parameter defines which cube to compact the outline.

The response includes the same parameters as the previous examples.

As compacting the outline is not instant, the job status will need to be repeatedly checked until it completes.

As you would expect the activity is available in the UI.

Again, the same script can be reused with a few minor alterations for the body of the REST request.

Next onto to merging data slices in an ASO cube. Data slices are automated created with actions like Smart View submits, data maps, smart pushes and data loads. Over time, the number of slices will increase, which can adversely affect performance. The slices can be either be merged into a single incremental slice or into the main cube, keeping in mind it does take longer to merge into the main cube and the time it takes relates to the size of the cube. When merging, zero values can be kept or removed.

Merging slices can be manually carried out through database properties in Calculation Manager.

To automate the process, there is a “Merge Data Slices” option when creating a new scheduled job in the simplified interface.

The job allows you to define which ASO database you wish to merge slices and whether to merge into the main database or a single incremental slice, zero values can be kept or removed.

If you don’t want to be constrained to scheduling a job, then this is where the REST API can help.

The REST API resource is the same jobs URL.

The “jobType” should be “Merge Data Slices”. The “cubeName” defines which cube to merge the slices.

There is a parameter called “mergeSliceType” which defines whether to merge into the main cube or incremental slice. The values are either “allIncrementalSlicesInMain” or “allIncrementalSlicesInOneIncremental”.

The parameter to define whether to keep zeros is “keepZeroCells” which can be “true” or “false”.

I went for the option of merging all slices in the main cube and to remove zero values. Before running the REST request, I checked the number of incremental slices in Calculation Manager.

After executing executing the REST call, all the incremental slices were merged into the main cube.

On to the final REST resource and that is based around ASO aggregations to optimise performance.

The documentation states:

“Optimize Aggregation can significantly improve the performance of aggregate storage cubes. You must first enable query tracking to capture tracking statistics on the aggregate storage cube. Then, after you enable query tracking, you must allow sufficient time to collect user data-retrieval patterns before you execute the aggregation process based on query data. The execute aggregation process deletes existing aggregated views and generates optimized views based on the captured data.

This job has two actions: Enable query tracking and Execute aggregation process.”

This can be manually achieved through Calculation Manager and in database properties there are the options to start query tracking and execute an aggregation.

No surprise that there is an option available in scheduled jobs in the simplified interface.

The job allows you to select the ASO cube and whether to enable query tracking or execute an aggregation process.

If the option to execute an aggregation process is selected, there are additional parameters to base the aggregation on.

The option to base the aggregation on query data should only be selected if query tracking is enabled.

The same functionality is now available through the REST API.

This is possible through the jobs resource URL with a POST method.

The “jobType” is “Optimize Aggregation”.

The parameters include the cube name, "type" with possible values of “enableQueryTracking” or “executeAggregationProcess”.

If type has been defined as “executeAggregationProcess” the following parameters can be included:

“useQueryData” which can be set as “true” or “false”, it can only be true if query tracking has been enabled.

“includeAlternateRollups” which can be defined as “enable” or “disable”

“growthSizeRatio” which is optional and specifies the maximum size the aggregated database can grow, which is based on the ratio and the input level data size.

The above REST request enables query tracking and the response includes the job information.

The job status URL can then be accessed to keep checking whether the process has completed.

This information will also be available in jobs in the simplified interface.

After query tracking has been enabled and sufficient retrievals on the data has taken placed, the cube can be aggregated based on the query tracking using the following REST request.

Once completed, the response from the job status URL will be something along the lines of:

In the following example, query tracking has not been enabled, an aggregation is to be executed and the maximum size will be 10% greater than the input level data.

The job status URL based on the job ID can then be requested until the job completes.

Automating this process would only require a slight modification to the example scripts I provided earlier in this blog.

I am going to leave it there for this post as I think that covers all the new REST API functionality.

The following is now possible through the REST API:

- Set administration mode for an application.

- Perform a full restructure of a block storage cube to eliminate or reduce fragmentation.

- Compact the outline file of an aggregate storage cube.

- Merge incremental data slices of an aggregate storage cube to improve performance.

- Optimise aggregation to improve the performance of aggregate storage cubes.

Up to now, the functionality has only been available either by manually applying or creating a job which can be rescheduled or run straight away. With the REST API you don’t have to be confined to these restrictions.

In the post I am going to through each of the new REST resources, providing an example with a REST client and a simple script.

Let us start off with the one I feel will be the most welcomed - the ability to change the administration mode of an application. I have blogged about this a couple of times in the past, the first was when the only way to automate the process was to either run a refresh database and change the administration mode or change the mode through migration files. The second post looked at the schedule job feature which was introduced in 18.05

It is possible to change an application between administrators only and all users through the UI and this is under application settings.

It is also possible to create a schedule job with the “Administration Mode” option.

This can be run immediately or scheduled and allows the application to be enabled for administrator or all users.

Moving on to the REST API, all the new functionality is available through the jobs resource which has the URL format of:

https://<epm_cloud_instance>/HyperionPlanning/rest/v3/applications/<application_name>/jobs

A POST method is required, and the body of the post must include the job details in JSON format.

The JSON should include:

jobType which will always be “Administration Mode”

jobName just provides a description of the job and can be named appropriately.

loginLevel is a parameter that defines which mode to place the application in, possible values are either “Administrators” or “All Users”.

An example to put the application in application mode using a REST client is as follows:

The response from the REST request includes the status, job ID, details and a URL to access the job information.

The application mode change should be instant so there will no need to keep checking the status until it is complete. The job will be available in the simplified interface and contain the value that was defined in “jobName” parameter.

Under application settings the application is now only enabled for administrators.

If non-administrators try and log into the application, they will be greeted with:

A simple change to the “loginLevel” parameter will update the application mode back to all users, the REST request would be:

The response is similar except for the “details” parameter description.

Once again, the job activity will be available in the UI.

The application will be enabled for all users in the application settings.

The body of the REST request only allows two values for “loginLevel”, if a different value is specified then the following error will be returned in the response:

The reality is, the process of updating the administration mode of an application would need to be automated, this can be achieved using most scripting languages.

It can come down to personal preference or company policies to which language you select. The following is an example using PowerShell which does not take much effort to put together.

As the new REST functionality is all based around the jobs resource the script can be easily reused.

To update the application mode to all users, the only changes would be to the “jobName” and “loginLevel” variables.

After making the changes, running the script will enable the application for all users.

On to the next new welcomed feature and that is the ability to restructure a cube using the REST API.

It is possible to restructure a cube manually through database properties in Calculation Manager.

This can be automated by selecting the “Restructure Cube” option when creating a new job schedule.

The cube which requires restructuring can be selected in the schedule job options.

To achieve this through the REST API it is the same job resource URL and POST method.

This time the “jobType” will be “Restructure Cube”, the “jobName” can be named appropriately and “cubeName” is required to define which cube to restructure.

As a restructure is not instant, the response will no doubt inform that the process is running.

The status values are -1 = in progress; 0 = success; 1 = error; 2 = cancel pending; 3 = cancelled; 4 = invalid parameter

The response includes a URL to the job status, which can then be accessed to check whether the process has been completed.

The simplified interface contains the job activity.

To automate the process the same script can be reused, it only requires a few modifications for the job information which is included in the body of the REST request.

In previous blogs I have gone into more detail around repeatedly checking the status of the job, but in simple terms the job status URL which is returned in the REST response can be stored, with a GET request the job status can then be checked.

To confirm a restructure had taken place, before running the script I created some empty blocks in the cube.

After running the script, the empty blocks had been removed which confirms a restructure had taken place.

Moving on to the three remaining optimisation features, all of which are ASO related.

The first of these is the ability to compact the outline of an ASO cube. It is possible to compact the outline through database properties in Calculation Manager.

There is also a “Compact Outline” option in scheduled jobs in the simplified interface.

This provides a brief description of the functionality and allows you to define which cube to compact the outline.

If you don’t want to use the scheduling functionality in the UI, it is now possible to use the REST API.

As with all the new functionality, the same jobs URL is used and a similar body in the REST request.

The “jobType” will be “Compact Cube”, not sure why it wasn’t named “Compact Outline”. The “cubeName” parameter defines which cube to compact the outline.

The response includes the same parameters as the previous examples.

As compacting the outline is not instant, the job status will need to be repeatedly checked until it completes.

As you would expect the activity is available in the UI.

Again, the same script can be reused with a few minor alterations for the body of the REST request.

Next onto to merging data slices in an ASO cube. Data slices are automated created with actions like Smart View submits, data maps, smart pushes and data loads. Over time, the number of slices will increase, which can adversely affect performance. The slices can be either be merged into a single incremental slice or into the main cube, keeping in mind it does take longer to merge into the main cube and the time it takes relates to the size of the cube. When merging, zero values can be kept or removed.

Merging slices can be manually carried out through database properties in Calculation Manager.

To automate the process, there is a “Merge Data Slices” option when creating a new scheduled job in the simplified interface.

The job allows you to define which ASO database you wish to merge slices and whether to merge into the main database or a single incremental slice, zero values can be kept or removed.

If you don’t want to be constrained to scheduling a job, then this is where the REST API can help.

The REST API resource is the same jobs URL.

The “jobType” should be “Merge Data Slices”. The “cubeName” defines which cube to merge the slices.

There is a parameter called “mergeSliceType” which defines whether to merge into the main cube or incremental slice. The values are either “allIncrementalSlicesInMain” or “allIncrementalSlicesInOneIncremental”.

The parameter to define whether to keep zeros is “keepZeroCells” which can be “true” or “false”.

I went for the option of merging all slices in the main cube and to remove zero values. Before running the REST request, I checked the number of incremental slices in Calculation Manager.

After executing executing the REST call, all the incremental slices were merged into the main cube.

On to the final REST resource and that is based around ASO aggregations to optimise performance.

The documentation states:

“Optimize Aggregation can significantly improve the performance of aggregate storage cubes. You must first enable query tracking to capture tracking statistics on the aggregate storage cube. Then, after you enable query tracking, you must allow sufficient time to collect user data-retrieval patterns before you execute the aggregation process based on query data. The execute aggregation process deletes existing aggregated views and generates optimized views based on the captured data.

This job has two actions: Enable query tracking and Execute aggregation process.”

This can be manually achieved through Calculation Manager and in database properties there are the options to start query tracking and execute an aggregation.

No surprise that there is an option available in scheduled jobs in the simplified interface.

The job allows you to select the ASO cube and whether to enable query tracking or execute an aggregation process.

If the option to execute an aggregation process is selected, there are additional parameters to base the aggregation on.

The option to base the aggregation on query data should only be selected if query tracking is enabled.

The same functionality is now available through the REST API.

This is possible through the jobs resource URL with a POST method.

The “jobType” is “Optimize Aggregation”.

The parameters include the cube name, "type" with possible values of “enableQueryTracking” or “executeAggregationProcess”.

If type has been defined as “executeAggregationProcess” the following parameters can be included:

“useQueryData” which can be set as “true” or “false”, it can only be true if query tracking has been enabled.

“includeAlternateRollups” which can be defined as “enable” or “disable”

“growthSizeRatio” which is optional and specifies the maximum size the aggregated database can grow, which is based on the ratio and the input level data size.

The above REST request enables query tracking and the response includes the job information.

The job status URL can then be accessed to keep checking whether the process has completed.

This information will also be available in jobs in the simplified interface.

After query tracking has been enabled and sufficient retrievals on the data has taken placed, the cube can be aggregated based on the query tracking using the following REST request.

Once completed, the response from the job status URL will be something along the lines of:

In the following example, query tracking has not been enabled, an aggregation is to be executed and the maximum size will be 10% greater than the input level data.

The job status URL based on the job ID can then be requested until the job completes.

Automating this process would only require a slight modification to the example scripts I provided earlier in this blog.

I am going to leave it there for this post as I think that covers all the new REST API functionality.