I was recently asked whether Data Management could display the alias for account members where the source is Oracle Financials. The reason behind the request was the users found it easier to understand and map data based on the source Financials account alias.

I am going to go through a possible solution and lead on to extracting data from a source application and producing an export file with alias members.

This post is going to be based on EPM Cloud but most of it can be replicated with on-premise FDMEE.

At first, I thought maybe the alias information was being pulled through from the source. If all columns are displayed in the workbench there is quite a bit of additional information available which is held in attribute columns.

This does not include any member aliases though.

In the source filter of the load rule there is an option to define the members to extract data for.

If the filter condition is selected this will open the member selector. Selecting List View will display the member and description.

So the type of member information I am after is being stored. Populating the member information is controlled by selecting “Refresh Members” from the target application.

It is a shame that the source values can not be selected in the Data Load Mappings even though they are being stored, it is only possible to select target values.

Anyway, the member information is being stored in a database table named “AIF_TARGET_APPL_MEMBERS”. There is no access to this table in EPM Cloud, but it is possible to track down the details in on-premise.

There is currently no scripting available in Data Management, but there is an option to use SQL mappings, which means it should be possible to query the member name and return the alias.

To achieve this, you could add a lookup dimension to the target application. This dimension will hold the account alias members. As it is a lookup dimension it will not be loaded to the target.

The lookup dimension does not need to be mapped in the import format.

A SQL mapping can be added to the lookup dimension. The SQL will return the alias member based on the source account member.

I am sure the SQL could be improved, this is a simple example of how to go about it, and I am not saying it is the only or right way.

Once the mapping has been created the validation can be run again.

Now the data in the workbench contains the Financials account and alias. To keep the source information up to date then it is important to run refresh members.

This leads me on to a question that has been raised on numerous occasions; which is whether it is possible to extract data to a file, but populate with member alias instead of member name?

Based on the logic that I have just gone through then it should be possible. Currently in the cloud or on-premise there is no option to extract data by member alias, yes you can argue that mappings could be added to map the members in the extracted data to alias. This would require a process to keep them in sync and there is nothing wrong with going down that route. I just want to provide an alternative.

So, let us go through an example of extracting data from a source EPM Cloud Planning application and generate an output file including member alias.

I am going to base it on the “Data Export to File” which I went through in a previous blog post, if you are not aware of all the functionality it offers then have a read of the post. It should also be possible to use a custom target application.

I have created my target application which includes a dimension for account alias.

As with my previous example, I have added a SQL mapping to map the member name to alias.

In the data load rule, I have defined a download file name. You don’t have to do this if you are happy with downloading the .dat file version.

Once the rule has been run, the data in the workbench includes the account alias.

The output file can be downloaded from Process Details, the filename will be in the format of

<TARGET_APPLICATION>_ <PROCESS_ID>.dat

As a filename was specified in the data load rule options, it can also be downloaded from the inbox/output explorer.

Alternatively, it can be downloaded using EPM Automate or REST.

The target file includes a column for account member name and alias.

If I only wanted to include the member alias the target application would only need to contain the required dimensions.

A similar SQL mapping can be added for each dimension.

The workbench includes both the source member and the alias as the target.

The output file will only contain the member aliases. I also defined pivot by period in the data load rule options.

Nice. How about automating refreshing the member information so it is in sync with the source application.

This is possible using REST. A post request can be made containing the application name in the body.

Unfortunately, this doesn’t work for Oracle Financials as ‘refresh members’ is processed in a different way, it should work for most EPM type applications.

This can be scripted and automated using a language of your choice.

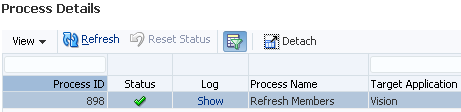

After executing the script, you will be able to validate the refresh members process has been run in Process Details.

If you have Enterprise planning, you can use Groovy and the REST API to run the refresh members process. I wrote a post detailing how to use the REST API with Groovy which you can read all about here.

In summary a Groovy business rule can be created which makes a REST call.

Once deployed the rule will be available in planning.

After running, Job Details contains some of the response from the REST call.

The process ID can be matched to confirm the refresh members process has been run.

To keep the member information in sync then the rule can be scheduled to run on a regular basis.

This means that when the data extract is run it will be populated with up to date member aliases.

I am going to leave it there, hopefully you have found this useful. Until next time…

I am going to go through a possible solution and lead on to extracting data from a source application and producing an export file with alias members.

This post is going to be based on EPM Cloud but most of it can be replicated with on-premise FDMEE.

At first, I thought maybe the alias information was being pulled through from the source. If all columns are displayed in the workbench there is quite a bit of additional information available which is held in attribute columns.

This does not include any member aliases though.

In the source filter of the load rule there is an option to define the members to extract data for.

If the filter condition is selected this will open the member selector. Selecting List View will display the member and description.

So the type of member information I am after is being stored. Populating the member information is controlled by selecting “Refresh Members” from the target application.

It is a shame that the source values can not be selected in the Data Load Mappings even though they are being stored, it is only possible to select target values.

Anyway, the member information is being stored in a database table named “AIF_TARGET_APPL_MEMBERS”. There is no access to this table in EPM Cloud, but it is possible to track down the details in on-premise.

There is currently no scripting available in Data Management, but there is an option to use SQL mappings, which means it should be possible to query the member name and return the alias.

To achieve this, you could add a lookup dimension to the target application. This dimension will hold the account alias members. As it is a lookup dimension it will not be loaded to the target.

The lookup dimension does not need to be mapped in the import format.

A SQL mapping can be added to the lookup dimension. The SQL will return the alias member based on the source account member.

I am sure the SQL could be improved, this is a simple example of how to go about it, and I am not saying it is the only or right way.

Once the mapping has been created the validation can be run again.

Now the data in the workbench contains the Financials account and alias. To keep the source information up to date then it is important to run refresh members.

This leads me on to a question that has been raised on numerous occasions; which is whether it is possible to extract data to a file, but populate with member alias instead of member name?

Based on the logic that I have just gone through then it should be possible. Currently in the cloud or on-premise there is no option to extract data by member alias, yes you can argue that mappings could be added to map the members in the extracted data to alias. This would require a process to keep them in sync and there is nothing wrong with going down that route. I just want to provide an alternative.

So, let us go through an example of extracting data from a source EPM Cloud Planning application and generate an output file including member alias.

I am going to base it on the “Data Export to File” which I went through in a previous blog post, if you are not aware of all the functionality it offers then have a read of the post. It should also be possible to use a custom target application.

I have created my target application which includes a dimension for account alias.

As with my previous example, I have added a SQL mapping to map the member name to alias.

In the data load rule, I have defined a download file name. You don’t have to do this if you are happy with downloading the .dat file version.

Once the rule has been run, the data in the workbench includes the account alias.

The output file can be downloaded from Process Details, the filename will be in the format of

<TARGET_APPLICATION>_ <PROCESS_ID>.dat

As a filename was specified in the data load rule options, it can also be downloaded from the inbox/output explorer.

Alternatively, it can be downloaded using EPM Automate or REST.

The target file includes a column for account member name and alias.

If I only wanted to include the member alias the target application would only need to contain the required dimensions.

A similar SQL mapping can be added for each dimension.

The workbench includes both the source member and the alias as the target.

The output file will only contain the member aliases. I also defined pivot by period in the data load rule options.

Nice. How about automating refreshing the member information so it is in sync with the source application.

This is possible using REST. A post request can be made containing the application name in the body.

Unfortunately, this doesn’t work for Oracle Financials as ‘refresh members’ is processed in a different way, it should work for most EPM type applications.

This can be scripted and automated using a language of your choice.

After executing the script, you will be able to validate the refresh members process has been run in Process Details.

If you have Enterprise planning, you can use Groovy and the REST API to run the refresh members process. I wrote a post detailing how to use the REST API with Groovy which you can read all about here.

In summary a Groovy business rule can be created which makes a REST call.

Once deployed the rule will be available in planning.

After running, Job Details contains some of the response from the REST call.

The process ID can be matched to confirm the refresh members process has been run.

To keep the member information in sync then the rule can be scheduled to run on a regular basis.

This means that when the data extract is run it will be populated with up to date member aliases.

I am going to leave it there, hopefully you have found this useful. Until next time…