It has been a quiet year up to now for new functionality in EPM Cloud, but that has all changed with the 19.05 release. In this post I am going to concentrate on the new features that have made their way to the REST API and EPM Automate.

There are new REST API jobs to import and export security, export audit records and the job console, and finally the ability to sort dimension members. Not all of these are available in EPM Automate yet but no doubt they will be included in future releases.

In the post I am going to go through each of the new REST resources, providing an example using a REST client and a simple script which can easily be updated for the different job types. I have covered the REST API many times in previous posts, so I am going to assume that you understand the basics.

Let us begin with the resources that I feel will be most welcomed – the ability to import and export access permissions. If you would like detailed information on access permissions, please refer to the documentation.

All the new REST API functionality is available through the jobs resource, which uses a post method and has the URL format of:

https://<epm_cloud_instance>/HyperionPlanning/rest/v3/applications/<application_name>/jobs

The body or payload of the REST request is where the job information is defined using parameters.

So, let us take an example of exporting access permissions, this can simply be achieved with the following:

The “jobType” parameter for exporting access permissions should be “Export Security”

The “jobName” parameter can be anything relevant, it is just the name that is going to be shown in the job console.

The “filename” parameter is optional, if it is not supplied, a CSV file will be created with a name containing the administrator who ran the request, description and current date/time.

Once you have the basic authentication details you are ready to execute the request.

After the request has been executed, a response will be returned containing all the job information.

A status of -1 indicates the job is in progress, to keep checking the job status, a get request can be made which includes the job ID.

This time the job has completed, and the export was successful. You can see that the filename I provided has been automatically generated as a CSV file.

The file is available within the EPM Cloud instance and can be seen through the UI in the inbox/outbox explorer.

If I had executed the REST request without the filename parameter:

The file would have been generated with the following naming convention:

The file can be downloaded from the UI or by using EPM Automate or using the REST API, an example being:

As the file is a CSV type, it can be opened and viewed in a much more readable format through Excel, or a similar application.

Most of the headings should be self-explanatory, the name column is the name of the user or group, the “Is User” column defines whether it is a user or a group.

The object type is an artefact within the application and can be any of the following:

The access mode is the inheriting access permission, this can be: MEMBER, CHILDREN, @ICHILDREN, @DESCENDANTS or @IDESCENDANTS. The documentation provides further details on the these.

I will provide details on the “Remove” column later in this post.

In the previous example the access permissions were exported for all users and groups. It is possible to restrict the export to specified users or groups.

To export access by group the “exportGroups” parameter can be used and assigned with a list of comma separated groups.

The above example will export access permissions for only the “Analyst” and “Reviewer” groups.

To export access permissions by user, the “exportUsers” parameter can be used and defined by a list of comma separated users.

The above example exports security for a single user.

To automate the process of exporting the access permissions, you can easily convert this into script. Most programming languages support REST in some form so pick the one you feel most comfortable with.

In the following PowerShell script, a REST request is made to export access permissions for a group named “Planner”.

Once the REST request has been made the status can be repeatably checked until it changes from progressing.

Finally, a separate REST resource can be executed to download the file from the EPM Cloud instance.

The file that has been downloaded contains the access permissions for the “Planner” group.

The script can be modified to work with any of the REST API job resources contained in this post.

Moving on to importing access permissions and I will start off with a CSV file that defines permissions for a user and group.

The “Planner” group will be defined with read access to the form folder “Long Range Plan”.

The user will be granted read/write permissions to the “Total Entity” member and all its descendants.

To demonstrate the “Total Entity” member has no user access defined.

The “Long Range Plan” form folder has no group access defined.

The CSV file must first be uploaded to the Cloud instance and this can be achieved using a REST resource, an example being:

Once the request has been successfully executed the file should be available in the Cloud instance.

The access permissions can then be imported using the REST resource with the same jobs URL and post method.

The “jobType” is “Import Security. The “jobName” can be anything relevant.

Just like with the export security resource the “filename” is optional.

Once the request has been sent, a response containing the job information is returned.

This can then be checked by including the job ID in the URL.

The import failed as the filename was incorrect. The filename is case sensitive, and I incorrectly set the first character as upper case.

After rectifying the filename, the import was successful.

The response includes the details of how many records were imported and if any were ignored.

To confirm the import, the form folder was correctly assigned with the group.

The member permissions were assigned to the user.

It is possible to remove access permissions, and this can be achieved by setting the value in the remove column to “Y”.

In the following example I am going to remove access to the “Planner” group for the member “BS”.

Currently access has been assigned to the group.

The CSV file was uploaded, and the access permissions imported using the REST resource.

The response confirms 1 record was successfully imported.

Checking in the UI confirms the access permissions have been removed for the group.

On to the next REST resource and that is the ability to export audit records. Please note that you will have to enable auditing in the UI if you have not already done so.

Once again, the URL and method are the same.

The “jobType” parameter should be “Export Audit”. “JobName” can be anything relevant you decide on.

The “filename” parameter is optional. If it is not included in the body of the request it will be generated in the same format as previously explained, the only difference is the filename will include “AuditRecords”.

The “ndays” parameter defines the number of days which audit records should be exported. The values can be 1,2,7,30,60,180 or All. If not included the default is 7 days.

Once the request has been sent the audit records will be exported and a zip file produced which can be downloaded.

The zip file contains a csv file with the same name.

The CSV file is in the same format as that viewed in the UI.

It is possible to export audit data for specified users.

The “userNames” parameter can contain a comma separated list of users.

The generated CSV file will contain only audit records for the specified user(s).

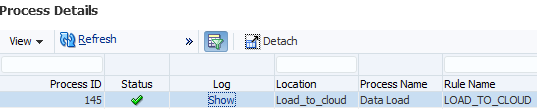

Next REST resource allows the export of the job console information.

The “jobType” should be “JOBCONSOLE_EXPORT”. Define “jobType” to anything you want.

Again the “filename” parameter is optional. If it is not included in the body of the request it will be generated in the same format as previously explained, the only difference is the filename will include “PlanningJobConsoleRecords”.

Just like with the other resources the job can be repeatedly checked until the status changes.

A zip file will be created which can be downloaded using either the UI, EPM Automate or REST API.

Unless specified with parameters in the body of the REST request the only job console information exported will be for rules and with a completed status.

By default, 7 days of job console information will be exported. This can be changed using the “ndays” parameter. The possible values can be 1,2,7,30,60,180 or All.

You can define the job types included in the export with the “jobTypes” parameter. This can be a comma separated list, please refer to the documentation for the full list.

In the above example, job console information will be exported for all records and job types.

To export the job console information for importing and exporting security the following parameter can be defined in the body of the request.

This will produce a CSV file with job console information for only import and export security jobs.

It is possible to restrict the export to the job name.

In this example the job console information is only exported for jobs that have been named “MTL Import Security” and “MTL Export Security”.

By default, only jobs with a completed status are exported. This can be changed by using the “jobStatusCodes” parameter and a list of comma separated codes.

The possible values are:

In this example, job console information has been exported only for jobs that have errored.

In the zip file that is produced there is a folder called “errorlogs”.

This contains additional error files that match by job ID.

So for the job ID 18 the error file includes the following information.

If the error files are not required, the “exportErrorDetails” parameter can be used with a value of “false”.

This means the folder in the zip file will not include the error files.

On to the final new REST API resource and this allows dimension members to be sorted.

The same jobs URL and method are used.

The “jobType” should be “Sort Members” and the “jobName” can be set to anything relevant.

The “member” parameter is the parent member whose children or descendants are being sorted.

By default, the sort will be applied to the children of the specified member and in ascending order.

The above example will sort all the children of member “SortMe” in ascending order.

Just like with all the other resources the job status can be checked.

The children of “SortMe” have been sorted in ascending order.

Now let’s add some children to the “Z_Product” member.

The body of the REST request has been updated to include the “order” and “type” parameters.

The descendants of member “SortMe” have been sorted in descending order.

This sort functionality has also been added to EPM Automate with the “sortmember” command.

As you would expect it is very simple to use and the command has the following syntax.

The following will sort the descendants of the member “SortMe” in ascending order.

I didn’t need to include the “order” parameter as the default is to sort in ascending order.

Another new command to be introduced into EPM Automate allows the application to be put into administration mode or returned to all users.

This functionality has been available in the REST API for a while, I covered this in a previous blog which you can read about here.

The new EPM Automate command is named “ApplicationAdminMode” and only requires either “true” or “false” to be included.

Before running the command, the application is available for all users.

Running the command with the “true” parameter puts the application into administrator mode.

This is confirmed by checking the settings section in the UI.

The application can be returned to all users by issuing the command with the “false” parameter.

The application is now all enabled for all users.

That completes my look at all the new REST API functionality and some of the more useful new EPM Automate commands.

I hope you have found it useful!

There are new REST API jobs to import and export security, export audit records and the job console, and finally the ability to sort dimension members. Not all of these are available in EPM Automate yet but no doubt they will be included in future releases.

In the post I am going to go through each of the new REST resources, providing an example using a REST client and a simple script which can easily be updated for the different job types. I have covered the REST API many times in previous posts, so I am going to assume that you understand the basics.

Let us begin with the resources that I feel will be most welcomed – the ability to import and export access permissions. If you would like detailed information on access permissions, please refer to the documentation.

All the new REST API functionality is available through the jobs resource, which uses a post method and has the URL format of:

https://<epm_cloud_instance>/HyperionPlanning/rest/v3/applications/<application_name>/jobs

The body or payload of the REST request is where the job information is defined using parameters.

So, let us take an example of exporting access permissions, this can simply be achieved with the following:

The “jobType” parameter for exporting access permissions should be “Export Security”

The “jobName” parameter can be anything relevant, it is just the name that is going to be shown in the job console.

The “filename” parameter is optional, if it is not supplied, a CSV file will be created with a name containing the administrator who ran the request, description and current date/time.

Once you have the basic authentication details you are ready to execute the request.

After the request has been executed, a response will be returned containing all the job information.

A status of -1 indicates the job is in progress, to keep checking the job status, a get request can be made which includes the job ID.

This time the job has completed, and the export was successful. You can see that the filename I provided has been automatically generated as a CSV file.

The file is available within the EPM Cloud instance and can be seen through the UI in the inbox/outbox explorer.

If I had executed the REST request without the filename parameter:

The file would have been generated with the following naming convention:

The file can be downloaded from the UI or by using EPM Automate or using the REST API, an example being:

As the file is a CSV type, it can be opened and viewed in a much more readable format through Excel, or a similar application.

Most of the headings should be self-explanatory, the name column is the name of the user or group, the “Is User” column defines whether it is a user or a group.

The object type is an artefact within the application and can be any of the following:

- SL_FORM – Form

- SL_COMPOSITE - Composite Form

- SL_TASKLIST – Tasklist

- SL_CALCRULE – Rule

- SL_FORMFOLDER - Form Folder

- SL_CALCFOLDER - Rule Folder

- SL_DIMENSION – Dimension

- SL_REPORT – Financial Report

- SL_REPORTSSHOT – Financial Report Snapshot

The access mode is the inheriting access permission, this can be: MEMBER, CHILDREN, @ICHILDREN, @DESCENDANTS or @IDESCENDANTS. The documentation provides further details on the these.

I will provide details on the “Remove” column later in this post.

In the previous example the access permissions were exported for all users and groups. It is possible to restrict the export to specified users or groups.

To export access by group the “exportGroups” parameter can be used and assigned with a list of comma separated groups.

The above example will export access permissions for only the “Analyst” and “Reviewer” groups.

To export access permissions by user, the “exportUsers” parameter can be used and defined by a list of comma separated users.

The above example exports security for a single user.

To automate the process of exporting the access permissions, you can easily convert this into script. Most programming languages support REST in some form so pick the one you feel most comfortable with.

In the following PowerShell script, a REST request is made to export access permissions for a group named “Planner”.

Once the REST request has been made the status can be repeatably checked until it changes from progressing.

Finally, a separate REST resource can be executed to download the file from the EPM Cloud instance.

The file that has been downloaded contains the access permissions for the “Planner” group.

The script can be modified to work with any of the REST API job resources contained in this post.

Moving on to importing access permissions and I will start off with a CSV file that defines permissions for a user and group.

The “Planner” group will be defined with read access to the form folder “Long Range Plan”.

The user will be granted read/write permissions to the “Total Entity” member and all its descendants.

To demonstrate the “Total Entity” member has no user access defined.

The “Long Range Plan” form folder has no group access defined.

The CSV file must first be uploaded to the Cloud instance and this can be achieved using a REST resource, an example being:

Once the request has been successfully executed the file should be available in the Cloud instance.

The access permissions can then be imported using the REST resource with the same jobs URL and post method.

The “jobType” is “Import Security. The “jobName” can be anything relevant.

Just like with the export security resource the “filename” is optional.

Once the request has been sent, a response containing the job information is returned.

This can then be checked by including the job ID in the URL.

The import failed as the filename was incorrect. The filename is case sensitive, and I incorrectly set the first character as upper case.

After rectifying the filename, the import was successful.

The response includes the details of how many records were imported and if any were ignored.

To confirm the import, the form folder was correctly assigned with the group.

The member permissions were assigned to the user.

It is possible to remove access permissions, and this can be achieved by setting the value in the remove column to “Y”.

In the following example I am going to remove access to the “Planner” group for the member “BS”.

Currently access has been assigned to the group.

The CSV file was uploaded, and the access permissions imported using the REST resource.

The response confirms 1 record was successfully imported.

Checking in the UI confirms the access permissions have been removed for the group.

On to the next REST resource and that is the ability to export audit records. Please note that you will have to enable auditing in the UI if you have not already done so.

Once again, the URL and method are the same.

The “jobType” parameter should be “Export Audit”. “JobName” can be anything relevant you decide on.

The “filename” parameter is optional. If it is not included in the body of the request it will be generated in the same format as previously explained, the only difference is the filename will include “AuditRecords”.

The “ndays” parameter defines the number of days which audit records should be exported. The values can be 1,2,7,30,60,180 or All. If not included the default is 7 days.

Once the request has been sent the audit records will be exported and a zip file produced which can be downloaded.

The zip file contains a csv file with the same name.

The CSV file is in the same format as that viewed in the UI.

It is possible to export audit data for specified users.

The “userNames” parameter can contain a comma separated list of users.

The generated CSV file will contain only audit records for the specified user(s).

Next REST resource allows the export of the job console information.

The “jobType” should be “JOBCONSOLE_EXPORT”. Define “jobType” to anything you want.

Again the “filename” parameter is optional. If it is not included in the body of the request it will be generated in the same format as previously explained, the only difference is the filename will include “PlanningJobConsoleRecords”.

Just like with the other resources the job can be repeatedly checked until the status changes.

A zip file will be created which can be downloaded using either the UI, EPM Automate or REST API.

Unless specified with parameters in the body of the REST request the only job console information exported will be for rules and with a completed status.

By default, 7 days of job console information will be exported. This can be changed using the “ndays” parameter. The possible values can be 1,2,7,30,60,180 or All.

You can define the job types included in the export with the “jobTypes” parameter. This can be a comma separated list, please refer to the documentation for the full list.

In the above example, job console information will be exported for all records and job types.

To export the job console information for importing and exporting security the following parameter can be defined in the body of the request.

This will produce a CSV file with job console information for only import and export security jobs.

It is possible to restrict the export to the job name.

In this example the job console information is only exported for jobs that have been named “MTL Import Security” and “MTL Export Security”.

By default, only jobs with a completed status are exported. This can be changed by using the “jobStatusCodes” parameter and a list of comma separated codes.

The possible values are:

- 1 Processing: Current jobs under execution

- 2 Completed: Successfully completed jobs

- 3 Error: Jobs failed with errors

- 4 Unknown: Jobs completed with unknown status

- 5 Completed with Threshold Violation: Jobs completed with threshold violation status

- 6 Pending Cancel: Jobs with Pending cancel status

- 7 Cancelled: Cancelled jobs

- 8 Completed with Errors: Jobs completed with errors

- 9 Completed with Warnings: Jobs completed with warnings

- All Export the jobs with any status

In this example, job console information has been exported only for jobs that have errored.

In the zip file that is produced there is a folder called “errorlogs”.

This contains additional error files that match by job ID.

So for the job ID 18 the error file includes the following information.

If the error files are not required, the “exportErrorDetails” parameter can be used with a value of “false”.

This means the folder in the zip file will not include the error files.

On to the final new REST API resource and this allows dimension members to be sorted.

The same jobs URL and method are used.

The “jobType” should be “Sort Members” and the “jobName” can be set to anything relevant.

The “member” parameter is the parent member whose children or descendants are being sorted.

By default, the sort will be applied to the children of the specified member and in ascending order.

The above example will sort all the children of member “SortMe” in ascending order.

Just like with all the other resources the job status can be checked.

The children of “SortMe” have been sorted in ascending order.

Now let’s add some children to the “Z_Product” member.

The body of the REST request has been updated to include the “order” and “type” parameters.

The descendants of member “SortMe” have been sorted in descending order.

This sort functionality has also been added to EPM Automate with the “sortmember” command.

As you would expect it is very simple to use and the command has the following syntax.

The following will sort the descendants of the member “SortMe” in ascending order.

I didn’t need to include the “order” parameter as the default is to sort in ascending order.

Another new command to be introduced into EPM Automate allows the application to be put into administration mode or returned to all users.

This functionality has been available in the REST API for a while, I covered this in a previous blog which you can read about here.

The new EPM Automate command is named “ApplicationAdminMode” and only requires either “true” or “false” to be included.

Before running the command, the application is available for all users.

Running the command with the “true” parameter puts the application into administrator mode.

This is confirmed by checking the settings section in the UI.

The application can be returned to all users by issuing the command with the “false” parameter.

The application is now all enabled for all users.

I hope you have found it useful!