So what does this mean, well say you have a data store of some description and the data gets automatically incremented, instead of having to load all the data into the database at a set interval you can use ODI to capture the additional data and with the use of a package monitor when the change occurs resulting in a load executing.

In ODI terms this process is known as Changed Data Capture (CDC), CDC is performed by journalizing models. Journalizing a model consists of setting up the components to capture the changes (inserts, updates and deletes) made to the records of the models datastores.

ODI has two journalizing modes

• Simple Journalizing tracks changes in individual datastores in a model.

• Consistent Set Journalizing tracks changes to a group of the model's datastores, taking into account the referential integrity between these datastores.

The journalizing components are :

• Journals: Where changes are recorded. Journals only contain references to the changed

records along with the type of changes (insert/update, delete).

• Capture processes: Journalizing captures the changes in the source datastores either by

creating triggers on the data tables.

• Subscribers: CDC uses a publish/subscribe model. Subscribers are entities (applications,

integration processes, etc) that use the changes tracked on a datastore or on a consistent set.

They subscribe to a model's CDC to have the changes tracked for them. Changes are

captured only if there is at least one subscriber to the changes. When all subscribers have

consumed the captured changes, these changes are discarded from the journals.

• Journalizing views: Provide access to the changes and the changed data captured. They are

used by the user to view the changes captured, and by integration processes to retrieve the

changed data.

Ok, as usual I don’t like to get too bogged down in lengthy spiel so I think we will get right into how CDC can be set up and what is it actually doing, now I understand this topic may have been covered by others in different forms but I wanted to write about in a way that most could understand and relate it to the essbase/planning world.

To keep everything simple I will be using the sample.basic essbase database as the target, the source will be a SQL server db table, I am also going to assume that you have enough understanding about the concepts of ODI, if you don’t then I suggest reading through previously blogs.

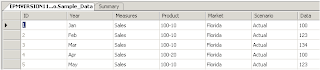

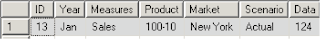

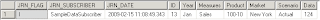

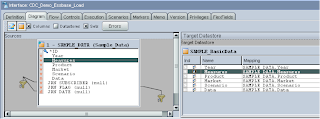

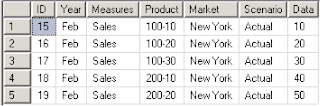

The above table will be used as the source and any changes to this table will need to be captured, before you use CDC on a table make sure it has a primary key otherwise you won’t be able to use it.

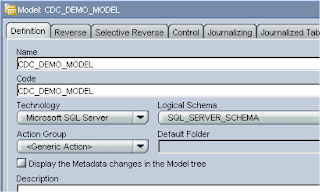

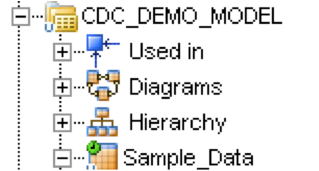

First the Model is created that is going to hold the journalized tables.

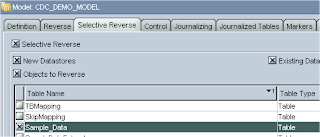

The table that is going to be monitored for changes is selected and reversed.

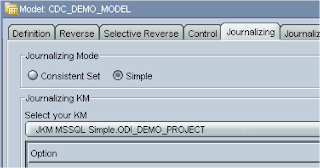

The next step will be to choose the Journalizing Knowledge Module, if you are using SQL server you will need to have imported in the following KMs

- JKM MSSQL Consistent

- JKM MSSQL Simple

If you are using Oracle there are a number of KMs available depending version.

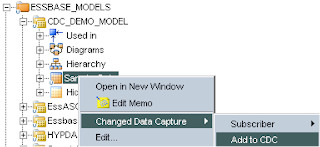

The next step is to flag the datastore that is going to be journalized, right click the datastore and select “Changed Data Capture” > Add to CDC

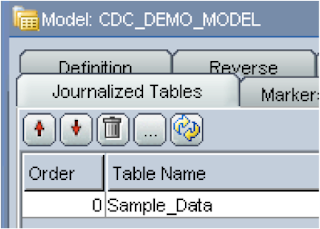

If you edit your model again and choose the “Journalized Tables” tab the datastore should be visible.

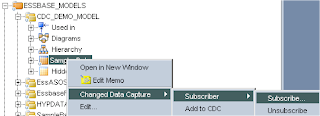

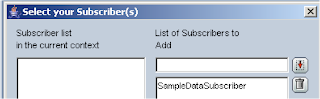

A subscriber (described earlier) should be added next, to do so right click the datastore > “Changed Data Capture” > Subscriber > Subscribe

The name of the subscriber can be anything that seems relevant.

Once you click ok then a session is executed and as this is the first time a subscriber has been created then a db table (SNP_SUBSCRIBERS) is created and populated with the subscriber information.

Ok, now the journal can be started that will capture the changes. Right click the datastore > “Changed Data Capture” > “Start Journal”, select the subscriber and another session will be started; this will create the required journal tables, views and triggers.

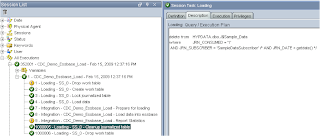

I will try and explain the details of what happens in the session:-

Two triggers (DT$<CDC table> & UT$<CDC table>) are created on the table that is going to be monitored, so in my example UT$Sample_Data & DT$Sample, one trigger will monitor for any updates or inserts and the other monitors deletions. Once a change occurs a table named J$<CDC Table> (J$Sample_Data) table will be updated.

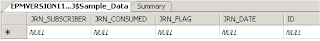

A table named J$<CDC table> is created, as the table I am using in this example is called “Sample_Data” a table named J$Sample_Data is created; this is the table which is updated once the above trigger is fired.

Two views are created “JV$

Once journalizing is active a green icon will be displayed on the datastore.

Inserting a record into the sample_data table

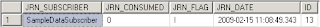

Fires off the trigger and inserts a record into the J$Sample_Data table

The field JRN_SUBSCRIBER is populated with the name of the subscriber which is monitoring the changes, the JRN_FLAG field is populated with

Running the view JV$Sample_Data will return the inserted record and journal information.

Right, back to ODI, you can view the changed data by right clicking the datastore > “Changed Data Capture” > Journal Data

This is performing the same functionality as running the view but in the ODI environment.

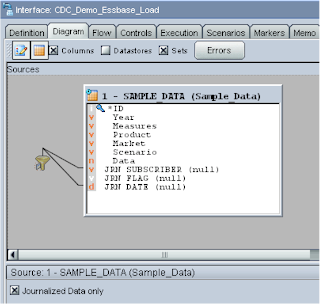

Now the journalizing is active an interface can be created which will take the new records and load them into our essbase database.

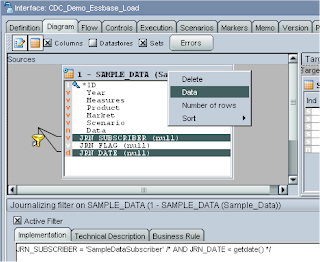

When you drag a journalized datastore onto the source a check box appears, when you check the box the journalizing columns JRN_FLAG, JRN_DATE and JRN_SUBSCRIBER become available.

A filter is automatically created on JRN_SUBSCRIBER & JRN_DATE columns; make sure you highlight the filter and update the expression as by default the subscriber to filter on is named as ‘SUNOPSIS’, you will need to update this to the name of the subscriber you are using, you can test you have it correct by applying the changes then right clicking the source datastore and selecting “Data”. The filter on JRN_DATE is commented out by default and can be updated if you require to use it.

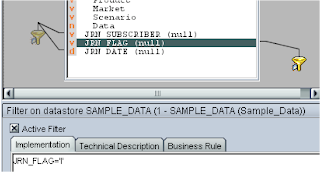

I added an extra filter on the JRN_FLAG column only to return records that equalled ‘I’, so inserts/updates and not deletes.

The rest of the interface is set up exactly like you would if you were loading data into any essbase database (this has all been covered in previous blogs)

Executing the interface will load any changed data into essbase, due to the nature of simple journalizing once the interface has completed successfully it deletes all the journal information (the JRN_FLAG is not taken into account), so if you require more than one interface in your integration to use the journal information then consistent set journalizing will be required, this will be covered in the next blog.

This interface could be scheduled though if you want to automate the interface to run when a change in data happens then there a number of journalizing tools available.

• OdiWaitForData waits for a number of rows in a table or a set of tables.

• OdiWaitForLogData waits for a certain number of modifications to occur on a journalized

table or a list of journalized tables. This tool calls OdiRefreshJournalCount to perform the

count of new changes captured.

• OdiWaitForTable waits for a table to be created and populated with a pre-determined

number of rows.

• OdiRetrieveJournalData retrieves the journalized events for a given table list or CDC set

for a specified journalizing subscriber. Calling this tool is required if using Database-Specific

Processes to load journalizing tables. This tool needs to be used with specific knowledge

modules. See the knowledge module description for more information.

• OdiRefreshJournalCount refreshes the number of rows to consume for a given table list or

CDC set for a specified journalizing subscriber.

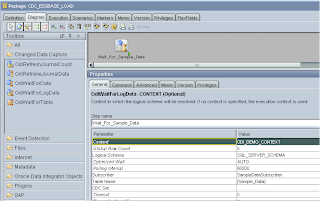

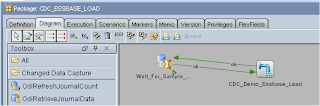

For this example I am going to use the OdiWaitForLogData tool, first a package is created and the tool added on to the diagram.

There are a number of parameters that can be set for the tool. I set it up so it would check the journalized table Sample_Data using the subscriber SampleDataDataSubscriber, it will check every one minute with an infinite timeout and move on to the next step when five rows of data have changed in the journalized table.

All that was left was to add the interface to load the data and once the data has been loaded start monitoring again.

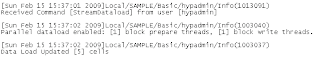

Now after executing the package, the session waits until five records have been added then the data load is initiated.

In the next blog I will look at using CDC with loading Metadata into a planning application, I will look at using separate interfaces depending on whether the metadata changed is a new member or it is a deletion, this will require the use of consistent set journalizing because as we have seen today once one interface has been executed in simple mode all the journal information is deleted.

Hi John;

ReplyDeleteDoes the "Oracle GL-to-EPMA Adaptor" for ODI come with rich, out-of-the-box functionality to query and join the right GL tables for extracting what HFM needs (e.g. COA segment values, hierarchies, code combinations, etc.) or does ODI leaves it up to us to identify those tables and define the queries and joins, as well as any custom code for subsequent processing that might be needed, then we'll have more questions.

Thanks

-Ach

Hi,

ReplyDeleteI have not really been involved with using any of the HFM knowledge modules so unfortunately I can't expand on your questions.

Cheers

John

Hi John, I'm not sure but I think there is a mistake on your post: Once you insert a new record, the view should be JV$DSAMPLE_DATA. The view JV$SAMPLE_DATA is created using a where clause WHERE L.JRN_CONSUMED = '1' and this column is set to '0' so the view keeps empty.

ReplyDeleteThanks for your work.