I thought I would take this opportunity to put together a full list of links to all the ODI related blogs I have written, as I try to cover all areas of EPM I feel the ODI ones are becoming fragmented and it is worth putting together a summary page that can be used as a start point and one that I will update in the future as and when I cover ODI subject areas.

This is probably one of the few posts that I don’t have to spend hours taking screen shots and uploading them.

Many of the earlier posts were based on ODI 10g but to be honest the concept still holds true in 11g and they still can be used as a reference point, I know I still use them because I have not had the chance use ODI as much as I would like lately and with my failing memory when I do use it I need to try and remember how I went about it.

No point in babbling on but if you feel I have not covered an area or you have some ideas for articles then just get in touch, there have been quite a few posts that I have written due to being contacted one way or another.

So here is the table of contents for the ODI series.

ODI Series Part 1

ODI Series Part 2 - The Agent

ODI Series Part 3 - The configuration continues

ODI Series 4 - Enter the Designer

ODI Series Part 5 - SQL to Planning

ODI Series Part 6 - Data load to planning

ODI - Getting text data into planning

ODI & Planning - Brief look into file manipulation

ODI Series - Planning and multiple UDAs

ODI Series - Handling errors with Planning/Essbase loads

ODI Series - Planning 11.1.1.3 enhancements

ODI Series - Planning 11.1.1.3 enhancements continued

ODI Series - Renaming planning members

ODI Series - Deleting planning members

ODI Series - When is a planning refresh not a refresh

Planning 11.1.2 - additional ODI functionality mixed in with a helping of ODI 11G

ODI - Question on loading planning Smart List data directly into essbase

ODI Series - Loading Smart List data into planning

ODI Series - Loading Essbase Metadata

ODI Series - Loading data into essbase

ODI Series - Extracting data from essbase

ODI Series - Essbase Outline Extractor

ODI / Essbase challenge - Extracting alternate alias member information

ODI Series - Loading SQL Metadata into essbase and its problems

ODI Series - Extracting essbase formula issue

ODI Series - Essbase to Planning

ODI Series - Deleting essbase members part 1

ODI Series - Deleting essbase members part 2

ODI Series - Deleting essbase members part 3

ODI Series - Renaming essbase members

ODI Series - Essbase/Planning - Automating changes in data

ODI Series - Essbase/Planning - Automating changes in data - Part 2

ODI Series - Release Update

ODI Series - Patch Update - Essbase data load issue resolved

ODI Series - Problem with latest essbase patch release

ODI Series - Patch Update - 10.1.3.5.5

ODI Series - Essbase related bug fixes

ODI Series - Will the real ODI-HFM blog please stand up

ODI Series - Loading HFM metadata

ODI Series - Loading HFM data

ODI Series - Extracting data from HFM

ODI Series - Putting it all together

ODI Series - A few extra tips

ODI Series - The final instalment

ODI Series - Excel functionality

ODI Series - Reversing a collection of files from an excel template

ODI Series - Web Services

ODI Series - Web Services Part 2

ODI Series - Web Services Part 3

ODI Series - Web Services Part 4

ODI Series - Processing all files in a directory

ODI Series - Quick look at user functions

ODI Series - Executing Hyperion Business Rules

ODI Series - Executing Hyperion Business Rules Part 2

Using Lifecycle Management (LCM) with ODI

Managing ODI 11g standalone agents using OPMN with EPM 11.1.2

If you are looking for all other areas of ODI not covered here then I can recommend these excellent blogs -

Still can't find what you are looking for or need help then the ODI forum is your next stop

This is probably one of the few posts that I don’t have to spend hours taking screen shots and uploading them.

Many of the earlier posts were based on ODI 10g but to be honest the concept still holds true in 11g and they still can be used as a reference point, I know I still use them because I have not had the chance use ODI as much as I would like lately and with my failing memory when I do use it I need to try and remember how I went about it.

No point in babbling on but if you feel I have not covered an area or you have some ideas for articles then just get in touch, there have been quite a few posts that I have written due to being contacted one way or another.

So here is the table of contents for the ODI series.

ODI Series Part 1

- Introduction and installation of ODI, this was based on 10g. If you are looking to install 11g then there are a number of different blogs that have covered off the installation.

ODI Series Part 2 - The Agent

- Covers the configuration of an agent on 10g, the concept is similar if configuring a standalone agent of 11g the main difference is if running on windows then the method to run the agent as a service has changed as it can be controlled by using OPMN which I covered here.

ODI Series Part 3 - The configuration continues

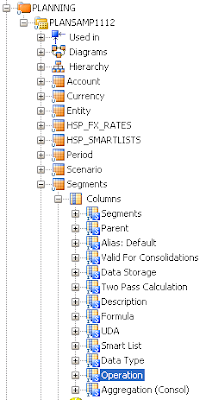

- A run through of the topology manager and the configuration for using flat files, rdbms, essbase and planning.

ODI Series 4 - Enter the Designer

- An introduction to the designer and process to building an interface to load metadata from a flat file to a planning application.

ODI Series Part 5 - SQL to Planning

- Covers the process for loading planning metadata from an rdbms source.

ODI Series Part 6 - Data load to planning

- How to load data through the planning layer.

ODI - Getting text data into planning

- An alternative solution and not the official method to load text data into planning, I am sure at the time of writing the blog this was the only method but I know in subsequent releases of ODI and planning then loading text data can easily be achieved by using the adaptor.

- Part of this blog covers the direct method of loading text member data to planning, I believe this is only possible from planning 11.1.1.3+

ODI & Planning - Brief look into file manipulation

- As requested an introduction to manipulating metadata from a flat file source and loading into planning.

ODI Series - Planning and multiple UDAs

- How to load multiple UDAs into a planning application.

ODI Series - Handling errors with Planning/Essbase loads

- A run through of a few possible methods of proactively taking actions if there are errors in Planning/Essbase loads.

ODI Series - Planning 11.1.1.3 enhancements

- An overview of the list of enhancements when using Planning 11.1.1.3 or later with ODI.

ODI Series - Planning 11.1.1.3 enhancements continued

- There wasn’t enough time to go through all the enhancements in the first instalment so in this part I go through the remaining ones.

ODI Series - Renaming planning members

- There is currently no direct method of renaming planning members using the adaptor so in this post I go through a possible solution to achieve the renaming.

ODI Series - Deleting planning members

- Step by step process overview for deleting planning members and some of the issues that may be faced.

ODI Series - When is a planning refresh not a refresh

- Even though an interface may have completed successfully in the Operator there may be a possible issue with the planning refresh which I highlight and give a possible solution to the issue.

Planning 11.1.2 - additional ODI functionality mixed in with a helping of ODI 11G

- A look at the additional features available when using Planning 11.1.2+ and ODI.

ODI - Question on loading planning Smart List data directly into essbase

- Covers an alternative way to load smart list data by loading directly to essbase instead of going through the planning later.

ODI Series - Loading Smart List data into planning

- The official way of loading smart list data by loading through the planning layer.

ODI Series - Loading Essbase Metadata

- Loading metadata from a flat file source into an essbase database.

ODI Series - Loading data into essbase

- The title pretty much describes the post which is all about loading data into an essbase database.

ODI Series - Extracting data from essbase

- Covering the three different methods to extract data from essbase databases.

ODI Series - Essbase Outline Extractor

- Covers the process of extracting metadata from an essbase database.

ODI / Essbase challenge - Extracting alternate alias member information

- At the time of writing this post there was no method to extract alternate alias member information using the essbase adaptor, I cover a method of customising the adaptor code to make it achievable. The code that I developed was later used by Oracle and a patch release of ODI made it possible to extract the information, I covered the patch here.

ODI Series - Loading SQL Metadata into essbase and its problems

- A run through of an issue that was highlighted on the Oracle forums when loading essbase metadata from an rdbms source and changing the IKM rules separator options to values other than the default comma.

ODI Series - Extracting essbase formula issue

- Highlights and provides a solution to an issue that maybe encountered extracting essbase member formulas that span over multiple lines.

ODI Series - Essbase to Planning

- As there is currently no adaptor which allows the extraction of metadata from planning I go through one method to get around and this and extract the information from an essbase database and then transform and load into a planning application.

ODI Series - Deleting essbase members part 1

- First of a three part series on deleting essbase members, this part goes through the standard method of using the remove unspecified option in a load rule.

ODI Series - Deleting essbase members part 2

- In this second part I go through a method to delete members where the source file only contains the members that need to be removed.

ODI Series - Deleting essbase members part 3

- In the final part I use a combination of Jython, essbase Java API and a customised KM to delete members based on members supplied from a source file.

ODI Series - Renaming essbase members

- Based on the final part of the deleting essbase members, modifications to the Java code allow for renaming members based on a source containing the original and new member names.

ODI Series - Essbase/Planning - Automating changes in data

- Using Change Data Capture (CDC) and the simple journalizing method to automate the process of loading data into an essbase database.

ODI Series - Essbase/Planning - Automating changes in data - Part 2

- Using Change Data Capture (CDC) and the consistent set journalizing method to automate the process of loading metadata to a planning application.

ODI Series - Release Update

- Highlights important patch updates to fix bugs with the essbase adaptors, please note this was 10.1.3.4.7_01 so no doubt you are already running a newer release than that.

ODI Series - Patch Update - Essbase data load issue resolved

- A review of a patch released to fix a bug with loading data to essbase.

ODI Series - Problem with latest essbase patch release

- The patch released to fix a bug with loading data to essbase did fix the original issue but now generates a new bug which I log with Oracle.

ODI Series - Patch Update - 10.1.3.5.5

- A patch is released that finally does fix the issues with loading data to essbase.

ODI Series - Essbase related bug fixes

- A delve into a couple of essbase related bug fixes, one of them is based around the order of members when extracting essbase metadata and the other allowing to extract alias information not only from the default table.

- A look at a couple of issues relating to 11.1.1.5 and loading metadata to essbase and loading metadata to planning using memory engine as the staging area.

ODI Series - Will the real ODI-HFM blog please stand up

- Covers the configuration required to start using the HFM adaptor, some of the errors that may be encounter and finally successfully reversing a HFM application.

ODI Series - Loading HFM metadata

- An overview of the knowledge modules available for HFM and then a step by step process to load metadata to a HFM application from a flat file source.

ODI Series - Loading HFM data

- Step by step process to load data to HFM and a description of each of the IKM options available.

ODI Series - Extracting data from HFM

- Overview of how to extract data from HFM and a description of the KM options available.

ODI Series - Putting it all together

- So you have a number of interfaces, procedures, variables and you are looking to automate your processes, this post covers putting it all together with the use of a package.

ODI Series - A few extra tips

- Covers loading multiple UDAs to an essbase database and how to sum up flat file source data when loading through the planning layer and not direct to essbase.

ODI Series - The final instalment

- Definitely not the final instalment of the ODI series and in this post I go through generating scenarios and scheduling them in the Operator, I also go through how to execute a scenario using the Java SDK in 10g.

ODI Series - Excel functionality

- A look at the process of extracting data from an excel file and loading into a relational table.

ODI Series - Reversing a collection of files from an excel template

- An overview of using the Knowledge module “RKM File (FROM Excel” , this RKM provides the ability to reverse files and maintain the structure definition based on the information held in an excel template.

ODI Series - Web Services

- In the first of a four part series looking at Web Services in 10g I go through setting up Axis2 and how to upload the ODI public web service to it, this allows the use of the OdiInvoke service and its five operations with one of them being the execution of scenarios using a SOAP request.

ODI Series - Web Services Part 2

- Using Java to interact directly with the ODI web services.

ODI Series - Web Services Part 3

- Step by Step configuration of Data Services and an example of how they could be used to load data to essbase.

ODI Series - Web Services Part 4

- A look at the ODI tool - ODIInvokeWebService and putting it to practice by invoking EPMA web services.

ODI Series - Processing all files in a directory

- Step through of a method to process all files in a directory and load them to a target relational table.

ODI Series - Quick look at user functions

- Ever wondered what are user functions and how to use them, if so have a read of this post.

ODI Series - Executing Hyperion Business Rules

- An in-depth look at using ODI to execute business rules.

ODI Series - Executing Hyperion Business Rules Part 2

- If part 1 didn’t satisfy you then in this part I go through another method to execute business rules by customising a knowledge module and using Jython

Using Lifecycle Management (LCM) with ODI

- A look at the possibilities of using ODI and LCM, in the example I go through the loading Cell Text to Planning

Managing ODI 11g standalone agents using OPMN with EPM 11.1.2

- Step by step process of configuring a standalone agent to be controlled by OPMN, the agent is configured on an EPM hosted environment.

If you are looking for all other areas of ODI not covered here then I can recommend these excellent blogs -

Still can't find what you are looking for or need help then the ODI forum is your next stop