In my last blog I went through one of the many new features included in the 16.07 EPM Cloud release and today it is time for another.

A quick snippet from the new features documentation:

“Data Management can now be used as a primary gateway to move data from one service instance to another. A service instance is a self-contained unit often containing the web server and the database application”

Before I start it is worth pointing out this new functionality works in exactly the same way as FDMEE on-premise hybrid which I have covered in great detail in previous posts, if you have not done so already then read them have a read of the following:

FDMEE Hybrid and the REST Part 2

FDMEE Hybrid and the REST Part 3

Instead of covering old ground I am going to hopefully make it a little more interesting by testing out moving data between an PBCS application and an FCCS one, as usual I will try and keep it as simple as possible.

I know it is early days for FCCS but it is a little disappointing that already there is a difference between accessing Data Management in FCCS and PBCS.

Using the PBCS simplified interface, Data Management is accessed using the navigator under Integration.

In FCCS it is under “Manage” and for some reason it is named “Data Integration”

Maybe in the future it will change so they are aligned

Also the way Data Management is opened is slightly different to PBCS as it goes through a cut down workspace route instead of direct.

When registering a target application, you will now have the option of “Cloud”

This will open a new window where the cloud credentials are entered for the target application.

You will then be presented with the types of applications available.

My initial intention was to add a FCCS target application to a PBCS instance so I selected “Consolidation”

Unfortunately, this returned no applications even though there is a registered FCCS application.

I think I must be a little eager and this looks like it might be available in the August release (update 6th August -there are not going to be any updates in the August release, so hopefully September)

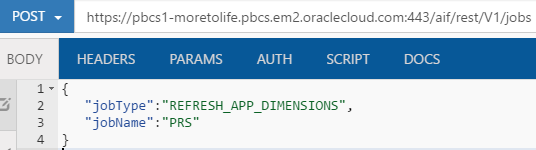

To populate the application names a REST resource is called on the target EPM Cloud instance.

I will be demonstrating any REST calls using a REST client.

When calling the REST resource against an FCCS instance no results are returned which is why the applications list is empty in Data Management.

Anyway I will update this post once the functionality is there.

I decided to flip it round and add a PBCS application as a target in a FCCS Data Management instance, I knew this should return applications as it does when using FDMEE hybrid functionality.

This time more success and a planning application is available.

So now I have two target applications in Data Management, a local FCCS application and a cloud PBCS application.

Now you can probably see that the functionality is just going to be like using hybrid because in this example there is a local application and a cloud application, yes they are both in the cloud but it is going operate in the same way as if it was on-premise.

You will notice that there are buttons to “Refresh Metadata” and “Refresh Members” available for the local and cloud target applications.

Let me briefly go through what happens when these clicked for a cloud target application, this was covered in more detail in my FDMEE hybrid post.

First “Refresh Metadata”, once clicked this will fire off a process which can be viewed in “Process Details”

From the steps you will notice more evidence that this functions in exactly the same way as on-premise hybrid.

A REST resource is called which updates the applications metadata in the target applications Data Management instance.

This will execute a job on the target instance and the local instance will keep checking for a success status.

Once a success state has been retrieved another REST resource is called against the target instance to return the applications metadata.

The target applications dimensions and options are then updated in the local instance.

If you click the “Refresh Members” button another process will be executed which can be viewed in “Process Details”

Once again this is all handled by REST resources.

A REST resource is called on the target instance to first refresh the members from the target application in this case PBCS and then push them into data management.

This means that the data management in the target instance has the latest member information.

Once that is successful another REST resource is called return all the member information for each dimension from the target instance.

This information is then stored in the local Data Management instance and can be used for things like creating member mappings between source and target.

The process from now on is the same as when moving data between applications in on-premise.

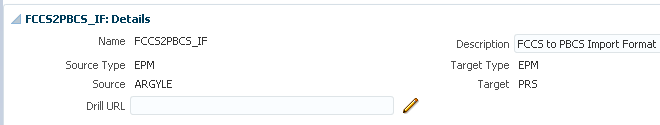

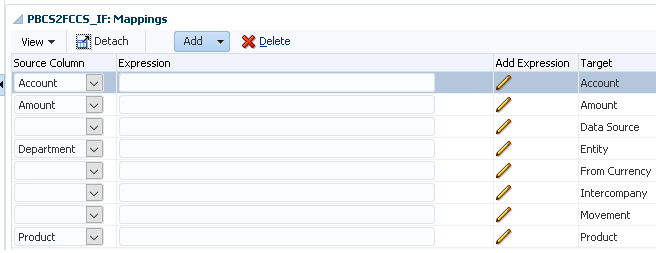

So am import format is created to map where possible the source FCCS dimensions to the target PBCS application.

A location is created and the import format selected.

A Data Load Rule is created category, period mapping type and source/target plan types are set.

If you are not aware FCCS is just like PBCS in many ways as it is just a web application sat on top of Essbase databases, that is why so many of the properties are similar like plan types.

So the load rule is going to extract actual data from the “Consol” plan type in the local FCCS application and then load into the PBCS target applications “IncStmt” plan type.

A number of source filters were applied to define the data that is required to be extracted.

Data Load Mappings are created to map the source FCCS members to target PBCS members.

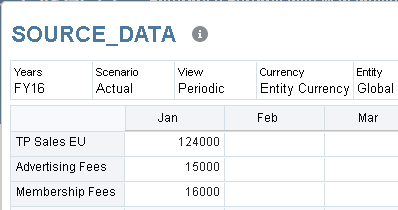

Before running the integration, I created two forms one in the source FCCS application which matches the source filters created in the data load rule.

And a form in the target PBCS application containing the equivalent mapped members from the above form.

Now to run the import and validate steps of the rule in the workbench.

The process behind these steps is first the data is first extracted from the FCCS plan type, as this is a BSO application an Essbase calculation script is generated using the DATAEXPORT command and the extracted data is written to a file, in my example the following is calculation script is generated:

SET DataExportOptions

{

DATAEXPORTCOLFORMAT ON;

DATAEXPORTDIMHEADER ON;

DATAEXPORTDRYRUN OFF;

DataExportRelationalFile OFF;

DataExportNonExistingBlocks OFF;

DataExportLevel ALL;

DATAEXPORTCOLHEADER "Period";

DATAEXPORTOVERWRITEFILE ON;

DataExportDynamicCalc OFF;

};

FIX ("410001","610001","660002","FCCS_Entity Input","EUR","FCCS_Managed Data","FCCS_Global Assumptions","FCCS_No Intercompany","FCCS_No Movement","Jan","No Product","Actual","FCCS_Periodic","FY16")

DATAEXPORT "File" "," "/u03/inbox/data/ARGYLE_Consol_26.txt" "#";

ENDFIX

Once the data file has been created it is then loaded into the data management database and mapped based on the data load mappings.

There were no issues with mappings as the validate step is displaying as successful.

On to the export step.

The export was successfully meaning the data has been loaded into the target application, the process that goes into this step is:

The data in Data Management is extracted to a flat file in column format based on the dimensions in the target application.

The file name format is based on target application name and then underscore plus the local Data Management process id e.g. PRS_30.dat

A REST resource is used to upload the file from local FCCS instance to the target PBCS instance, if you want to read about how the file is uploaded using REST then you can read my previous posts here and here

Once the file has been uploaded another REST resource is called to load the file into the target applications plan type (Essbase database)

As part of the REST resource process the uploaded file is moved and renamed, a load rule created and the file then loaded into the Essbase database using the load rule.

To verify the data has been loaded successfully the form that was created earlier is run.

Map where possible the PBCS dimensions to the FCCS application.

Create a new location and set the import format.

A Data Load Rule is created.

This time the target is called a cube instead of plan type, not sure why they would be both called plan types on the previous example but this way round it is a cube.

Source filters are added to define what data to extract from the PBCS plan type.

Data Load mapping are added to map the source PBCS members to the target FCCS members.

This time around the source PBCS plan type has data against the intersection defined in the data load rule source filters.

The equivalent members in the target FCCS plan type have no data.

So time to execute the load rule in the workbench.

The import validation steps were successful and a summary of the process is contained in “Process Details”

To achieve the above steps the following process is followed:

A REST resource is called on the target PBCS instance which extracts the data from the plan type, once again as this is a BSO plan type a calculation script is created using the DATAEXPORT command to extract the data, if it was an ASO plan type then MDX would be generated.

The calculation script is generated in the local data management and then included in the body of the REST post.

Once the data has been extracted to a file it is renamed and moved to the LCM directory ready to be downloaded.

The file is then downloaded using another REST resource, to find out more information on the resource have a read of my previous post here.

Once downloaded the file is then loaded to the local Data Management database and mapped based on the data load mappings.

The final step is to export the data from Data Management and load to the local FCCS plan type.

This is done using the standard process of exporting the data to a flat file in column format, an Essbase load rule is generated and then the data in the file is loaded using the load rule.

Just to be sure the data was loaded successfully the form that was created earlier was run.

So that completes FCCS to PBCS and PBCS to FCCS, there is another option and that is to load data from a flat file into a local Data Management instance, map and then export and load to a target cloud instance.

This could be useful if you have files which contain data for both cloud instances and instead of having to load it to multiple instances it can be loaded to one, alternatively if you want to carry out all your mappings in one instance then this method could be chosen.

I am not going to go through the process as it is not really any different than what I have already covered.

The only difference is when you create the import format you select file as the source and the target cloud application as the target.

I have tested out this method and it worked as expected.

So with Data Management (FDMEE) you now you have the integration options of on-premise to on-premise, on-premise to cloud, cloud to on-premise, cloud to cloud

A quick snippet from the new features documentation:

“Data Management can now be used as a primary gateway to move data from one service instance to another. A service instance is a self-contained unit often containing the web server and the database application”

Before I start it is worth pointing out this new functionality works in exactly the same way as FDMEE on-premise hybrid which I have covered in great detail in previous posts, if you have not done so already then read them have a read of the following:

FDMEE Hybrid and the REST Part 2

FDMEE Hybrid and the REST Part 3

Instead of covering old ground I am going to hopefully make it a little more interesting by testing out moving data between an PBCS application and an FCCS one, as usual I will try and keep it as simple as possible.

I know it is early days for FCCS but it is a little disappointing that already there is a difference between accessing Data Management in FCCS and PBCS.

Using the PBCS simplified interface, Data Management is accessed using the navigator under Integration.

In FCCS it is under “Manage” and for some reason it is named “Data Integration”

Maybe in the future it will change so they are aligned

Also the way Data Management is opened is slightly different to PBCS as it goes through a cut down workspace route instead of direct.

FCCS

PBCS

When registering a target application, you will now have the option of “Cloud”

This will open a new window where the cloud credentials are entered for the target application.

You will then be presented with the types of applications available.

My initial intention was to add a FCCS target application to a PBCS instance so I selected “Consolidation”

Unfortunately, this returned no applications even though there is a registered FCCS application.

I think I must be a little eager and this looks like it might be available in the August release (update 6th August -there are not going to be any updates in the August release, so hopefully September)

To populate the application names a REST resource is called on the target EPM Cloud instance.

I will be demonstrating any REST calls using a REST client.

When calling the REST resource against an FCCS instance no results are returned which is why the applications list is empty in Data Management.

Anyway I will update this post once the functionality is there.

I decided to flip it round and add a PBCS application as a target in a FCCS Data Management instance, I knew this should return applications as it does when using FDMEE hybrid functionality.

This time more success and a planning application is available.

So now I have two target applications in Data Management, a local FCCS application and a cloud PBCS application.

Now you can probably see that the functionality is just going to be like using hybrid because in this example there is a local application and a cloud application, yes they are both in the cloud but it is going operate in the same way as if it was on-premise.

You will notice that there are buttons to “Refresh Metadata” and “Refresh Members” available for the local and cloud target applications.

Let me briefly go through what happens when these clicked for a cloud target application, this was covered in more detail in my FDMEE hybrid post.

First “Refresh Metadata”, once clicked this will fire off a process which can be viewed in “Process Details”

From the steps you will notice more evidence that this functions in exactly the same way as on-premise hybrid.

A REST resource is called which updates the applications metadata in the target applications Data Management instance.

This will execute a job on the target instance and the local instance will keep checking for a success status.

Once a success state has been retrieved another REST resource is called against the target instance to return the applications metadata.

The target applications dimensions and options are then updated in the local instance.

If you click the “Refresh Members” button another process will be executed which can be viewed in “Process Details”

Once again this is all handled by REST resources.

A REST resource is called on the target instance to first refresh the members from the target application in this case PBCS and then push them into data management.

This means that the data management in the target instance has the latest member information.

Once that is successful another REST resource is called return all the member information for each dimension from the target instance.

This information is then stored in the local Data Management instance and can be used for things like creating member mappings between source and target.

The process from now on is the same as when moving data between applications in on-premise.

So am import format is created to map where possible the source FCCS dimensions to the target PBCS application.

A location is created and the import format selected.

A Data Load Rule is created category, period mapping type and source/target plan types are set.

If you are not aware FCCS is just like PBCS in many ways as it is just a web application sat on top of Essbase databases, that is why so many of the properties are similar like plan types.

So the load rule is going to extract actual data from the “Consol” plan type in the local FCCS application and then load into the PBCS target applications “IncStmt” plan type.

A number of source filters were applied to define the data that is required to be extracted.

Data Load Mappings are created to map the source FCCS members to target PBCS members.

Before running the integration, I created two forms one in the source FCCS application which matches the source filters created in the data load rule.

And a form in the target PBCS application containing the equivalent mapped members from the above form.

Now to run the import and validate steps of the rule in the workbench.

The process behind these steps is first the data is first extracted from the FCCS plan type, as this is a BSO application an Essbase calculation script is generated using the DATAEXPORT command and the extracted data is written to a file, in my example the following is calculation script is generated:

SET DataExportOptions

{

DATAEXPORTCOLFORMAT ON;

DATAEXPORTDIMHEADER ON;

DATAEXPORTDRYRUN OFF;

DataExportRelationalFile OFF;

DataExportNonExistingBlocks OFF;

DataExportLevel ALL;

DATAEXPORTCOLHEADER "Period";

DATAEXPORTOVERWRITEFILE ON;

DataExportDynamicCalc OFF;

};

FIX ("410001","610001","660002","FCCS_Entity Input","EUR","FCCS_Managed Data","FCCS_Global Assumptions","FCCS_No Intercompany","FCCS_No Movement","Jan","No Product","Actual","FCCS_Periodic","FY16")

DATAEXPORT "File" "," "/u03/inbox/data/ARGYLE_Consol_26.txt" "#";

ENDFIX

Once the data file has been created it is then loaded into the data management database and mapped based on the data load mappings.

There were no issues with mappings as the validate step is displaying as successful.

On to the export step.

The export was successfully meaning the data has been loaded into the target application, the process that goes into this step is:

The data in Data Management is extracted to a flat file in column format based on the dimensions in the target application.

The file name format is based on target application name and then underscore plus the local Data Management process id e.g. PRS_30.dat

A REST resource is used to upload the file from local FCCS instance to the target PBCS instance, if you want to read about how the file is uploaded using REST then you can read my previous posts here and here

Once the file has been uploaded another REST resource is called to load the file into the target applications plan type (Essbase database)

As part of the REST resource process the uploaded file is moved and renamed, a load rule created and the file then loaded into the Essbase database using the load rule.

To verify the data has been loaded successfully the form that was created earlier is run.

All good with the FCCS to PBCS integration, so let us reverse the process and extract data from the PBCS application and load into the local FCCS application.

No changes are needed to the target applications as they have already been configured, the setup follows the same logic, create an import format but this time the PBCS application is the source and the FCCS application is the target.

Map where possible the PBCS dimensions to the FCCS application.

Create a new location and set the import format.

A Data Load Rule is created.

This time the target is called a cube instead of plan type, not sure why they would be both called plan types on the previous example but this way round it is a cube.

Source filters are added to define what data to extract from the PBCS plan type.

Data Load mapping are added to map the source PBCS members to the target FCCS members.

This time around the source PBCS plan type has data against the intersection defined in the data load rule source filters.

The equivalent members in the target FCCS plan type have no data.

So time to execute the load rule in the workbench.

The import validation steps were successful and a summary of the process is contained in “Process Details”

To achieve the above steps the following process is followed:

A REST resource is called on the target PBCS instance which extracts the data from the plan type, once again as this is a BSO plan type a calculation script is created using the DATAEXPORT command to extract the data, if it was an ASO plan type then MDX would be generated.

The calculation script is generated in the local data management and then included in the body of the REST post.

Once the data has been extracted to a file it is renamed and moved to the LCM directory ready to be downloaded.

The file is then downloaded using another REST resource, to find out more information on the resource have a read of my previous post here.

Once downloaded the file is then loaded to the local Data Management database and mapped based on the data load mappings.

The final step is to export the data from Data Management and load to the local FCCS plan type.

This is done using the standard process of exporting the data to a flat file in column format, an Essbase load rule is generated and then the data in the file is loaded using the load rule.

Just to be sure the data was loaded successfully the form that was created earlier was run.

So that completes FCCS to PBCS and PBCS to FCCS, there is another option and that is to load data from a flat file into a local Data Management instance, map and then export and load to a target cloud instance.

This could be useful if you have files which contain data for both cloud instances and instead of having to load it to multiple instances it can be loaded to one, alternatively if you want to carry out all your mappings in one instance then this method could be chosen.

I am not going to go through the process as it is not really any different than what I have already covered.

The only difference is when you create the import format you select file as the source and the target cloud application as the target.

So with Data Management (FDMEE) you now you have the integration options of on-premise to on-premise, on-premise to cloud, cloud to on-premise, cloud to cloud

Hello John, excellent and informative blog post as ever.

ReplyDeleteOne question, I am trying create cloud PBCS to cloud PBCS but cannot see my other PBCS pod Production to Test is there any reason that this could be happening when it is all in cloud and not on premise?