In the last post I went through two new REST API resources for running data load rules and batches which are available from FDMEE 11.1.2.4.210, these resources have been available for some time in the cloud which I wrote about here.

In this post I am going to continue where I left off and look at another three REST resources which were available in Data Management in the cloud from the 10.16 release and for on-premise from FDMEE 11.1.2.4.210.

I will also cover the equivalent commands using the EPM Automate utility which is currently only cloud based.

The first piece of functionality I am going to look is the ability to import data member mappings and let us start with how this can be achieved using the EPM Automate utility.

If you are using the web UI to import mappings you would go to “Data Load Mapping”, select import for either a single dimension or all dimensions and then select or upload a file with the mappings in.

In reality you want to keep manual tasks down to a minimum so to do this using the EPM Automate utility the command you will need “importmapping” and the syntax is:

epmautomate importmapping DIMENSION_NAME|ALL FILE_NAME IMPORT_MODE VALIDATION_MODE LOCATION

DIMENSION_NAME|ALL - The dimension name for a specific dimension to import, such as ACCOUNT, or ALL to import all dimensions

FILE_NAME - The file and path from which to import mappings. The file format can be .CSV, .TXT, .XLS, or .XLSX. The file must be uploaded prior to importing, either to the inbox or to a sub-directory of the inbox.

IMPORT_MODE - The import mode: MERGE to add new rules or replace existing rules, or REPLACE to clear prior mapping rules before import

VALIDATION_MODE - Whether to use validation mode, true or false, an entry of true validates the target members against the target application; false loads the mapping file without any validations.

LOCATION – The location where the mapping rules should be loaded to.

For an example I am going to load the following account member mappings in csv format using the utility.

First the mapping file needs to be uploaded to the Data Management inbox using the utility.

Next the mappings are imported using the “importMapping” command.

A quick check in the UI confirms the mappings are definitely available for the specified location.

So with two commands the process of loading mappings can be automated.

To do this using a REST resource is an easy task as well, the resource is available for both EPM Cloud and FDMEE from 11.1.2.4.210.

The URL for the resource is the same as when running rules or batches and follows the format:

On-premise : http(s)://<webserver>:<port>/aif/rest/V1

Cloud: https://<cloud_instance/aif/rest/V1

The resource uses a POST method and has a number of input parameters which are required to be in JSON format in the body of the request.

For an example I am going to import the mappings in following csv file for all dimensions.

The mapping file has already been uploaded to the inbox directory.

In this example I am importing mappings to an on-premise FDMEE instance but it would be exactly the same for cloud.

The “jobType” is always “MAPPINGIMPORT” and the “jobName” will be either the name of the dimension or ALL, I think the rest of the parameters in the body of the POST should be self-explanatory and follow the same logic as the EPM Automate utility.

Once the request has been sent a response should be returned in JSON format which will contain information about the import mapping process.

The job status has been returned as running, to keep checking the status a GET request can be made to the URL held in the href parameter.

If you take a look at process details in the FDMEE UI you can see that the import mapping process was indeed successful

The imported mappings are now available in the data load mapping area.

As usual I also like to convert this into the scripting world and with a little bit of PowerShell member mappings can be imported.

To keep checking the status, the URL in the returned href parameter can be stored and then a request made using the URL.

Hopefully you agree that it is not too difficult to script the process but feel free to use a scripting language you feel most comfortable with.

So let us now reverse the process and export mappings from FDMEE/Data Management, first with help from the EPM Automate utility.

The command to use this time is “exportmapping” and the syntax is similar to using the import functionality.

epmautomate exportmapping DIMENSION_NAME|ALL FILE_NAME LOCATION

DIMENSION_NAME|ALL - The dimension name for a specific dimension to export or ALL to export all dimensions

FILE_NAME - The file and path from which to export mappings. The file format can be .CSV, .TXT, .XLS, or .XLSX.

LOCATION – The location where the mapping rules should be exported from.

So for this example let use export all mappings for the location “DelimitedLoc”

The exported mapping file can then be downloaded.

Now we have local access to the exported mapping file.

To export mappings using REST then the concept is pretty much the same as when importing.

The resource has the same URL and POST method.

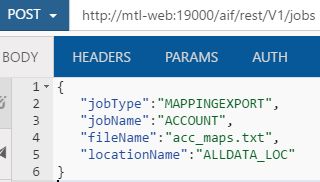

This time I am going to export account dimension mappings to a text file for the “ALLDATA_LOC” location.

The “jobType” will always be “MAPPINGEXPORT” and the remaining parameters shouldn’t need any explanation.

The returned response once again contains the job information.

Process details in the web UI will show that an exporting mapping process has been run.

If no path was included in the REST request, the exported mapping file will be available in the root directory.

Another new feature in FDMEE 11.1.2.4.210 is the ability to select the delimiter for the mapping export file.

The delimiter can be set at system, application or user setting level.

The options beside the default of comma are exclamation mark, semi-colon or pipe.

So if I select pipe and send the same REST call again the output mapping file should now honour that setting.

The only difference to import/export from/to excel format is to change the file extension.

The mappings will then be in the excel template format.

In scripting terms there is not much change to the import example I gave, a quick update to the payload parameters and you are ready to run an export.

On to the final command for today and that is ability to run predefined reports in FDMEE/Data Management, once the report has run it can be downloaded from the “outbox/reports” directory.

The EPM Automate utility command is “runDMReport” and has the following syntax:

epmautomate runDMReport REPORT_NAME PARAMETER=Value "Report Output Format=[PDF|HTML|XLS|XLSX]"

REPORT_NAME is the name of the report to executed.

PARAMETER=Value are the report parameters if any and their values.

Report Output Format is the report output format and valid options are PDF, HTML,XLS, and XLSX.

The syntax for the EPM Automate utility can be generated by going to “Report Execution” in Data Management.

Select the report and then “Create Report Script” and a window will be displayed with the full command to run.

This can be copied and then executed using the utility, if a report has parameters then you can select them and they will be included in the generated command.

Using the first example after copying and pasting the command the report can be generated.

Unfortunately, the report is named after the process number which you are not informed at generation time.

If you take a look in process details you can see the process id and that will be of the report and the extension depends on the output format.

The report can then be downloaded using the “downloadfile” command.

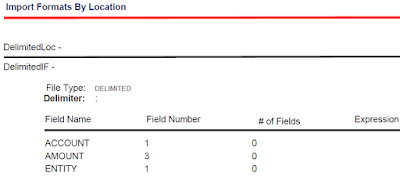

The “Import Formats By Location” report is now available locally in PDF format.

Let us move on to the REST resource which has an advantage of providing the name for the generated report.

For an example I am going to be running “Dimension Map (Dimension)” report with the following parameters.

The URL and method for the resource is the same as the previously examples, the above report translates into the following request.

The “jobType” is set as “REPORT” and “jobName” is the name of the report.

Just like with the other resources the response contains information about the job.

As the process is still running the output filename is not available, the status can be repeatedly checked until the status has been updated.

Once the process is complete the output filename is generated in the response, the report can then be accessed from “outbox/reports” directory.

Converting this into a script only requires a minimal amount of change to the previous example.

The status can then be checked by posting a request to the URL held in the href parameter.

The advantage of using the REST resource is the output filename is returned in the response which means the report can be accessed and then processed further if need be.

Well I think that covers as much as I need to on this new functionality, hopefully you found it useful, until next time…

In this post I am going to continue where I left off and look at another three REST resources which were available in Data Management in the cloud from the 10.16 release and for on-premise from FDMEE 11.1.2.4.210.

I will also cover the equivalent commands using the EPM Automate utility which is currently only cloud based.

The first piece of functionality I am going to look is the ability to import data member mappings and let us start with how this can be achieved using the EPM Automate utility.

If you are using the web UI to import mappings you would go to “Data Load Mapping”, select import for either a single dimension or all dimensions and then select or upload a file with the mappings in.

In reality you want to keep manual tasks down to a minimum so to do this using the EPM Automate utility the command you will need “importmapping” and the syntax is:

epmautomate importmapping DIMENSION_NAME|ALL FILE_NAME IMPORT_MODE VALIDATION_MODE LOCATION

DIMENSION_NAME|ALL - The dimension name for a specific dimension to import, such as ACCOUNT, or ALL to import all dimensions

FILE_NAME - The file and path from which to import mappings. The file format can be .CSV, .TXT, .XLS, or .XLSX. The file must be uploaded prior to importing, either to the inbox or to a sub-directory of the inbox.

IMPORT_MODE - The import mode: MERGE to add new rules or replace existing rules, or REPLACE to clear prior mapping rules before import

VALIDATION_MODE - Whether to use validation mode, true or false, an entry of true validates the target members against the target application; false loads the mapping file without any validations.

LOCATION – The location where the mapping rules should be loaded to.

For an example I am going to load the following account member mappings in csv format using the utility.

First the mapping file needs to be uploaded to the Data Management inbox using the utility.

Next the mappings are imported using the “importMapping” command.

A quick check in the UI confirms the mappings are definitely available for the specified location.

So with two commands the process of loading mappings can be automated.

To do this using a REST resource is an easy task as well, the resource is available for both EPM Cloud and FDMEE from 11.1.2.4.210.

The URL for the resource is the same as when running rules or batches and follows the format:

On-premise : http(s)://<webserver>:<port>/aif/rest/V1

Cloud: https://<cloud_instance/aif/rest/V1

The resource uses a POST method and has a number of input parameters which are required to be in JSON format in the body of the request.

For an example I am going to import the mappings in following csv file for all dimensions.

The mapping file has already been uploaded to the inbox directory.

In this example I am importing mappings to an on-premise FDMEE instance but it would be exactly the same for cloud.

The “jobType” is always “MAPPINGIMPORT” and the “jobName” will be either the name of the dimension or ALL, I think the rest of the parameters in the body of the POST should be self-explanatory and follow the same logic as the EPM Automate utility.

Once the request has been sent a response should be returned in JSON format which will contain information about the import mapping process.

The job status has been returned as running, to keep checking the status a GET request can be made to the URL held in the href parameter.

If you take a look at process details in the FDMEE UI you can see that the import mapping process was indeed successful

The imported mappings are now available in the data load mapping area.

As usual I also like to convert this into the scripting world and with a little bit of PowerShell member mappings can be imported.

To keep checking the status, the URL in the returned href parameter can be stored and then a request made using the URL.

Hopefully you agree that it is not too difficult to script the process but feel free to use a scripting language you feel most comfortable with.

So let us now reverse the process and export mappings from FDMEE/Data Management, first with help from the EPM Automate utility.

The command to use this time is “exportmapping” and the syntax is similar to using the import functionality.

epmautomate exportmapping DIMENSION_NAME|ALL FILE_NAME LOCATION

DIMENSION_NAME|ALL - The dimension name for a specific dimension to export or ALL to export all dimensions

FILE_NAME - The file and path from which to export mappings. The file format can be .CSV, .TXT, .XLS, or .XLSX.

LOCATION – The location where the mapping rules should be exported from.

So for this example let use export all mappings for the location “DelimitedLoc”

The exported mapping file can then be downloaded.

Now we have local access to the exported mapping file.

To export mappings using REST then the concept is pretty much the same as when importing.

The resource has the same URL and POST method.

This time I am going to export account dimension mappings to a text file for the “ALLDATA_LOC” location.

The “jobType” will always be “MAPPINGEXPORT” and the remaining parameters shouldn’t need any explanation.

The returned response once again contains the job information.

Process details in the web UI will show that an exporting mapping process has been run.

If no path was included in the REST request, the exported mapping file will be available in the root directory.

Another new feature in FDMEE 11.1.2.4.210 is the ability to select the delimiter for the mapping export file.

The delimiter can be set at system, application or user setting level.

The options beside the default of comma are exclamation mark, semi-colon or pipe.

So if I select pipe and send the same REST call again the output mapping file should now honour that setting.

The only difference to import/export from/to excel format is to change the file extension.

The mappings will then be in the excel template format.

In scripting terms there is not much change to the import example I gave, a quick update to the payload parameters and you are ready to run an export.

On to the final command for today and that is ability to run predefined reports in FDMEE/Data Management, once the report has run it can be downloaded from the “outbox/reports” directory.

The EPM Automate utility command is “runDMReport” and has the following syntax:

epmautomate runDMReport REPORT_NAME PARAMETER=Value "Report Output Format=[PDF|HTML|XLS|XLSX]"

REPORT_NAME is the name of the report to executed.

PARAMETER=Value are the report parameters if any and their values.

Report Output Format is the report output format and valid options are PDF, HTML,XLS, and XLSX.

The syntax for the EPM Automate utility can be generated by going to “Report Execution” in Data Management.

Select the report and then “Create Report Script” and a window will be displayed with the full command to run.

This can be copied and then executed using the utility, if a report has parameters then you can select them and they will be included in the generated command.

Using the first example after copying and pasting the command the report can be generated.

Unfortunately, the report is named after the process number which you are not informed at generation time.

If you take a look in process details you can see the process id and that will be of the report and the extension depends on the output format.

The report can then be downloaded using the “downloadfile” command.

The “Import Formats By Location” report is now available locally in PDF format.

Let us move on to the REST resource which has an advantage of providing the name for the generated report.

For an example I am going to be running “Dimension Map (Dimension)” report with the following parameters.

The URL and method for the resource is the same as the previously examples, the above report translates into the following request.

The “jobType” is set as “REPORT” and “jobName” is the name of the report.

Just like with the other resources the response contains information about the job.

As the process is still running the output filename is not available, the status can be repeatedly checked until the status has been updated.

Once the process is complete the output filename is generated in the response, the report can then be accessed from “outbox/reports” directory.

Converting this into a script only requires a minimal amount of change to the previous example.

The status can then be checked by posting a request to the URL held in the href parameter.

The advantage of using the REST resource is the output filename is returned in the response which means the report can be accessed and then processed further if need be.

Well I think that covers as much as I need to on this new functionality, hopefully you found it useful, until next time…

This comment has been removed by a blog administrator.

ReplyDeleteHi,

ReplyDeleteJust wonder if you know if it is possible to use REST OAUTH instead of Basic authentication to get it more secure?

I believe only basic authentication is currently supported

ReplyDelete