If you are using FDMEE to load data to a planning application then great but what about metadata, not so great at the moment, yes you can load metadata from a supported ERP source but still that is pretty limited.

Wouldn’t it be nice to be able to initiate a metadata load to a planning application from FDMEE before loading data, with a custom solution this can be made possible.

In this post I am going to concentrate on loading metadata from a relational source but the concept is the same and can be achieved if the source is file based.

Loading metadata to planning from a relational source has been available in the outline load utility (OLU) since 11.1.2.2.300, I wrote a detailed blog about the functionality which you can read about here.

I think the functionality is great as there are many situations where you want to load from a source system or a staging area instead of relying on flat files.

The problem is that it has never been incorporated into the planning UI like with flat files and has to be run from command line using the outline load utility, I didn’t want the FDMEE custom solution to have to resort to calling batch scripts on the file system as that would just over complicate matters.

The main requirements for the FDMEE solution were:

• Ability to load metadata from a relational source to planning application.

• Allow selection of dimension to load metadata to.

• Option to perform a database refresh if required.

• Non planning administrators should be able to run the metadata load.

• Be able to run from client machine without the need to log into FDMEE

• No calling of batch scripts on the file system

• No changes to the default deployment of FDMEE like adding jars.

• No hard coding of clear text passwords

• Log records loaded and rejected.

So let me go through the solution I came up with in FDMEE and then move on Planning.

You are probably aware that FDMEE allows custom Jython scripts to be registered and executed so this seemed like the logical route for the solution, after lots of research I found it is possible to use Jython to directly integrate with the OLU so no need for additional configuration or access to the file system to run the utility, it is pretty much the same concept that already exists within FDMEE.

Before getting on to the Jython script I registered a new custom script in FDMEE.

The custom script definition was set up with two query parameters, one SQL query to return the target planning application dimensions from the FDMEE repository.

The other query was to determine whether a planning refresh should be run by the simple selection of ‘Yes’ or ‘No’.

Moving on to the Jython script, now I am not going to provide every line of the script because what would be the point of trying to copy what I have written, if you are interested in this type of solution please feel free to get in touch.

The next section of the script stores the target application name, retrieve the values for the dimension to load to and whether to run a refresh from the execution parameters.

Part of the SQL is dynamically generated from the dimension name; in this example the SQL is extremely simple for demo purposes.

The process log full path and file name is generated from a combination of the FDMEE outbox, target application, dimension name and the current process ID.

What I have not shown above is the SSO token for a planning application administrator is generated at run time which means the password is not hard coded in the script, the SSO token is required when calling the outline load utility, this also allows one of my requirements for non-planning admins to be able to run the metadata load.

The next section sets the properties for the outline load utility, such as database connection, authentication, SQL and dimension information, if you want to understand in more detail about these properties then take a look a previous blog on the outline load utility.

The last section of the script calls a Jython function to update the FDMEE process log to a status of running.

A function is called to run the OLU with passed in parameters for the SSO token, application name, OLU properties and log file information.

The function returns the status of metadata load with number of records loaded and rejected.

If no records were loaded or there were rejected records, then the FDMEE process log is updated to warning status.

Finally, a link to the OLU log is added to the FDMEE process ID so it can be downloaded from process details.

I know you are not interested in the code and much prefer to see the solution in action :)

To start with I have as basic as it gets database table containing account member information for parent, member name, alias and data storage, obviously it would have additional properties in a real life scenario.

The aim is to load the members in the above table as children of the following member in the account dimension.

As the script has been registered it is now available from the script execution area in FDMEE.

Executing the script opens up a window with the option to select the target planning application dimension and whether to refresh the database.

Selecting the magnifying glass for target dimension will run the predefined SQL query to return the available dimensions in the target application.

Selecting the refresh database will also run a SQL query to populate the Yes/No values.

In my example I have selected “Account” as the target dimension and not to refresh the database.

Once executed the process will be started and the Jython script called.

One of the process steps includes details that account dimension members have successfully been loaded to the planning application.

You will notice that there is an option to download a file, this is the OLU log which I will show a little later.

The main process log contains details on the target application, dimension, whether a refresh was performed and more importantly the number of rows loaded and rejected.

If I run the custom script again but this time set to refresh the database.

The OLU will perform a database refresh from Planning to Essbase and this can be viewed in OLU log which can be downloaded from process details.

If I go back into the planning application I can confirm the account members have definitely been loaded.

All very nice but what if you want to run the metadata load outside of FDMEE, well from 11.1.2.4.210 you have the option to use the REST API to run FDMEE custom scripts.

If you want to understand all about REST and FDMEE then have a look at the two-part series I wrote, part 1 and part 2.

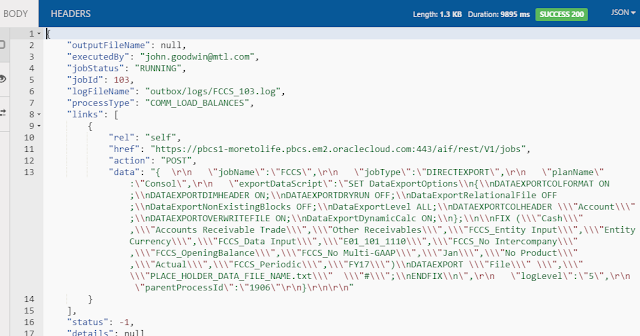

With a REST client it is easy to run a custom script with parameters, the URL format for the REST resource is:

http(s)://<webserver>:<port>/aif/rest/V1/jobs

The response will include the process ID and current status.

it is possible to keep checking the status of the job using the format:

http(s)://<webserver>:<port>/aif/rest/V1/jobs/<jobid>

One of the requirements for the solution was the ability to run the load from a client machine without the need to log into the FDMEE UI, well with REST this is now possible but you wouldn’t really want a power user to start messing with REST clients so with a little help from PowerShell this can be made possible.

I have displayed some the code for the script but the end user would not need to get involved with that and they would only need to run the script, they would be prompted to enter the dimension to load and whether to run a refresh, the script would then keep checking the status until the load was complete.

Looking back my original set of requirements I believe that all of them have been covered with this solution.

Moving on to the next piece and that is the ability to call the FDMEE custom script from within planning, in a previous post I wrote about running an FDMEE data load rule from a planning form using Groovy.

I am going to use the same concept here but things have moved on a little, at the time of writing the blog the REST API was not available in FDMEE so I had to call a servlet, I have now updated the Groovy script to call the REST API instead of the servlet.

I am not going to go into detail as much about how Groovy can be implemented in planning so please refer to my other post.

What I did was create two business rules which will attached to a planning form, the first rule was to run the FDMEE custom script to load metadata and the second to check the status of the process.

The business to run the custom script basically calls a Groovy script and passes in variables to define the dimension to load data to, perform a refresh or not and to let the script know this was metadata load, this is so I could use the same Groovy script when checking the status of the job by just changing the value of the variable.

The main section of the rule has a FIX to narrow down the intersection of data on the planning form.

Member ‘FDMEE_Run_Date’ will store the current date.

‘FDMEE_Load_Status’ will hold the value returned from the Groovy script, the value determines the status of running the FDMEE rule, in planning the member has a Smart List assigned to provide a friendly status name instead of a number.

‘FDMEE_Process_ID’ will contain the FDMEE process ID returned from the Groovy script.

In my example I am using the @CalcMgrGroovyNumber function which will return a number, the number is then processed to store the correct value for both the load status and process ID members.

The dimension variable will be picked up from the form and passed into the Groovy script, the ‘runRefresh’ variable is a runtime prompt and the value is entered when the rule is run.

The rule to check to the status of the FDMEE process is very similar except it passes in “C” for check and the FDMEE process ID into the Groovy script.

As for the Groovy script, once again there is no need for me to provide every line as only an understanding of what it is doing is important.

User credentials are encrypted into base64 to be sent in the header of the REST request, the URL to the FDMEE REST resource is defined, the jobID is included if the script is being to run to check the status of the FDMEE process.

If the script is being run to load metadata, then the payload of the REST request it built using JSON including the parameters for the target planning dimension and whether to refresh database, the method for the request is set to POST, if the script is being to check the status then the method is set as GET.

Next a Groovy method is called to execute the REST request, the REST URL, method, payload and content type as passed in.

The response of the REST request is stored and then the JSON parsed.

Finally, the status of the FDMEE process is stored and converted into a format that can be returned to the business rule.

I convert to a format so I can pass back both job the ID and status of the job as one value, the format is jobid.jobstatus so say the job ID was 2520 and has a status of -1 meaning in process then 2520.01 is passed back into the business rule.

The business rule would then take 2520 and store it against member 'FDMEE_Process_ID' and the '01' value would be converted in the business rule and stored against member 'FDMEE_Load_Status', this member has a Smart List attached so the number is displayed as meaningful text in the form.

You may be wondering what the hell is that all about so it is much nicer to see a simple demonstration.

I created the following read only form, security has been applied so the user will only have access to dimension loads that they should be allowed to run.

Two business rules have been attached to the form, one to run the metadata load and one to check the status.

If I select to run the metadata load the option to run a refresh will be displayed in the runtime prompts window.

The rule ran successfully so hopefully the account dimension build will have been started.

The form is now populated with the load status, run date and the process ID

As the status was shown as running the second rule can be run to check the status.

This time the form has been updated with a successful status.

To prove it is working as expected I can cross check the process ID in the form to the one in FDMEE.

All good, so what about when there rejected records in the load, to show this I have updated the database table to set an invalid parent member in the account dimension.

If I run the load metadata rule again and then check the status, this time it is shown as a warning.

In FDMEE the process step for loading members to the member is shown as warning, this is because in the Jython script I set the process status to warning if any records are rejected.

Checking the main process log confirms that one record has been rejected.

As I mentioned earlier, in the Jython script I attach the OLU log so it can be downloaded from the output file column in process details.

The OLU log contains the reason to why the record was rejected.

So there we go, I know there was a lot to take in but if it is the sort of solution you would like to know more about then please feel free to contact me.

Wouldn’t it be nice to be able to initiate a metadata load to a planning application from FDMEE before loading data, with a custom solution this can be made possible.

In this post I am going to concentrate on loading metadata from a relational source but the concept is the same and can be achieved if the source is file based.

Loading metadata to planning from a relational source has been available in the outline load utility (OLU) since 11.1.2.2.300, I wrote a detailed blog about the functionality which you can read about here.

I think the functionality is great as there are many situations where you want to load from a source system or a staging area instead of relying on flat files.

The problem is that it has never been incorporated into the planning UI like with flat files and has to be run from command line using the outline load utility, I didn’t want the FDMEE custom solution to have to resort to calling batch scripts on the file system as that would just over complicate matters.

The main requirements for the FDMEE solution were:

• Ability to load metadata from a relational source to planning application.

• Allow selection of dimension to load metadata to.

• Option to perform a database refresh if required.

• Non planning administrators should be able to run the metadata load.

• Be able to run from client machine without the need to log into FDMEE

• No calling of batch scripts on the file system

• No changes to the default deployment of FDMEE like adding jars.

• No hard coding of clear text passwords

• Log records loaded and rejected.

So let me go through the solution I came up with in FDMEE and then move on Planning.

You are probably aware that FDMEE allows custom Jython scripts to be registered and executed so this seemed like the logical route for the solution, after lots of research I found it is possible to use Jython to directly integrate with the OLU so no need for additional configuration or access to the file system to run the utility, it is pretty much the same concept that already exists within FDMEE.

Before getting on to the Jython script I registered a new custom script in FDMEE.

The custom script definition was set up with two query parameters, one SQL query to return the target planning application dimensions from the FDMEE repository.

The other query was to determine whether a planning refresh should be run by the simple selection of ‘Yes’ or ‘No’.

Moving on to the Jython script, now I am not going to provide every line of the script because what would be the point of trying to copy what I have written, if you are interested in this type of solution please feel free to get in touch.

The next section of the script stores the target application name, retrieve the values for the dimension to load to and whether to run a refresh from the execution parameters.

Part of the SQL is dynamically generated from the dimension name; in this example the SQL is extremely simple for demo purposes.

The process log full path and file name is generated from a combination of the FDMEE outbox, target application, dimension name and the current process ID.

What I have not shown above is the SSO token for a planning application administrator is generated at run time which means the password is not hard coded in the script, the SSO token is required when calling the outline load utility, this also allows one of my requirements for non-planning admins to be able to run the metadata load.

The next section sets the properties for the outline load utility, such as database connection, authentication, SQL and dimension information, if you want to understand in more detail about these properties then take a look a previous blog on the outline load utility.

The last section of the script calls a Jython function to update the FDMEE process log to a status of running.

A function is called to run the OLU with passed in parameters for the SSO token, application name, OLU properties and log file information.

The function returns the status of metadata load with number of records loaded and rejected.

If no records were loaded or there were rejected records, then the FDMEE process log is updated to warning status.

Finally, a link to the OLU log is added to the FDMEE process ID so it can be downloaded from process details.

I know you are not interested in the code and much prefer to see the solution in action :)

To start with I have as basic as it gets database table containing account member information for parent, member name, alias and data storage, obviously it would have additional properties in a real life scenario.

The aim is to load the members in the above table as children of the following member in the account dimension.

As the script has been registered it is now available from the script execution area in FDMEE.

Executing the script opens up a window with the option to select the target planning application dimension and whether to refresh the database.

Selecting the magnifying glass for target dimension will run the predefined SQL query to return the available dimensions in the target application.

In my example I have selected “Account” as the target dimension and not to refresh the database.

Once executed the process will be started and the Jython script called.

One of the process steps includes details that account dimension members have successfully been loaded to the planning application.

You will notice that there is an option to download a file, this is the OLU log which I will show a little later.

The main process log contains details on the target application, dimension, whether a refresh was performed and more importantly the number of rows loaded and rejected.

If I run the custom script again but this time set to refresh the database.

The OLU will perform a database refresh from Planning to Essbase and this can be viewed in OLU log which can be downloaded from process details.

If I go back into the planning application I can confirm the account members have definitely been loaded.

All very nice but what if you want to run the metadata load outside of FDMEE, well from 11.1.2.4.210 you have the option to use the REST API to run FDMEE custom scripts.

If you want to understand all about REST and FDMEE then have a look at the two-part series I wrote, part 1 and part 2.

With a REST client it is easy to run a custom script with parameters, the URL format for the REST resource is:

http(s)://<webserver>:<port>/aif/rest/V1/jobs

The response will include the process ID and current status.

it is possible to keep checking the status of the job using the format:

http(s)://<webserver>:<port>/aif/rest/V1/jobs/<jobid>

One of the requirements for the solution was the ability to run the load from a client machine without the need to log into the FDMEE UI, well with REST this is now possible but you wouldn’t really want a power user to start messing with REST clients so with a little help from PowerShell this can be made possible.

I have displayed some the code for the script but the end user would not need to get involved with that and they would only need to run the script, they would be prompted to enter the dimension to load and whether to run a refresh, the script would then keep checking the status until the load was complete.

Looking back my original set of requirements I believe that all of them have been covered with this solution.

Moving on to the next piece and that is the ability to call the FDMEE custom script from within planning, in a previous post I wrote about running an FDMEE data load rule from a planning form using Groovy.

I am going to use the same concept here but things have moved on a little, at the time of writing the blog the REST API was not available in FDMEE so I had to call a servlet, I have now updated the Groovy script to call the REST API instead of the servlet.

I am not going to go into detail as much about how Groovy can be implemented in planning so please refer to my other post.

What I did was create two business rules which will attached to a planning form, the first rule was to run the FDMEE custom script to load metadata and the second to check the status of the process.

The business to run the custom script basically calls a Groovy script and passes in variables to define the dimension to load data to, perform a refresh or not and to let the script know this was metadata load, this is so I could use the same Groovy script when checking the status of the job by just changing the value of the variable.

The main section of the rule has a FIX to narrow down the intersection of data on the planning form.

Member ‘FDMEE_Run_Date’ will store the current date.

‘FDMEE_Load_Status’ will hold the value returned from the Groovy script, the value determines the status of running the FDMEE rule, in planning the member has a Smart List assigned to provide a friendly status name instead of a number.

‘FDMEE_Process_ID’ will contain the FDMEE process ID returned from the Groovy script.

In my example I am using the @CalcMgrGroovyNumber function which will return a number, the number is then processed to store the correct value for both the load status and process ID members.

The dimension variable will be picked up from the form and passed into the Groovy script, the ‘runRefresh’ variable is a runtime prompt and the value is entered when the rule is run.

The rule to check to the status of the FDMEE process is very similar except it passes in “C” for check and the FDMEE process ID into the Groovy script.

As for the Groovy script, once again there is no need for me to provide every line as only an understanding of what it is doing is important.

User credentials are encrypted into base64 to be sent in the header of the REST request, the URL to the FDMEE REST resource is defined, the jobID is included if the script is being to run to check the status of the FDMEE process.

If the script is being run to load metadata, then the payload of the REST request it built using JSON including the parameters for the target planning dimension and whether to refresh database, the method for the request is set to POST, if the script is being to check the status then the method is set as GET.

Next a Groovy method is called to execute the REST request, the REST URL, method, payload and content type as passed in.

The response of the REST request is stored and then the JSON parsed.

Finally, the status of the FDMEE process is stored and converted into a format that can be returned to the business rule.

I convert to a format so I can pass back both job the ID and status of the job as one value, the format is jobid.jobstatus so say the job ID was 2520 and has a status of -1 meaning in process then 2520.01 is passed back into the business rule.

The business rule would then take 2520 and store it against member 'FDMEE_Process_ID' and the '01' value would be converted in the business rule and stored against member 'FDMEE_Load_Status', this member has a Smart List attached so the number is displayed as meaningful text in the form.

You may be wondering what the hell is that all about so it is much nicer to see a simple demonstration.

I created the following read only form, security has been applied so the user will only have access to dimension loads that they should be allowed to run.

Two business rules have been attached to the form, one to run the metadata load and one to check the status.

If I select to run the metadata load the option to run a refresh will be displayed in the runtime prompts window.

The rule ran successfully so hopefully the account dimension build will have been started.

The form is now populated with the load status, run date and the process ID

As the status was shown as running the second rule can be run to check the status.

This time the form has been updated with a successful status.

To prove it is working as expected I can cross check the process ID in the form to the one in FDMEE.

All good, so what about when there rejected records in the load, to show this I have updated the database table to set an invalid parent member in the account dimension.

If I run the load metadata rule again and then check the status, this time it is shown as a warning.

In FDMEE the process step for loading members to the member is shown as warning, this is because in the Jython script I set the process status to warning if any records are rejected.

Checking the main process log confirms that one record has been rejected.

As I mentioned earlier, in the Jython script I attach the OLU log so it can be downloaded from the output file column in process details.

The OLU log contains the reason to why the record was rejected.

So there we go, I know there was a lot to take in but if it is the sort of solution you would like to know more about then please feel free to contact me.