In the last part I took at look at new hybrid functionality added to FDMEE in 11.1.2.4.210 to allow integration between on-premise FDMEE and Oracle FCCS.

The post covered in detail exporting data from an on-premise planning application and loading into a target FCCS application, the aim today is to reverse that process and extract data from a FCCS application and then load into an on-premise planning application.

I am going to assume you have read through the previous post and the FCCS application has been added as a target application.

Technically in this scenario it is the going to be the source but it does need to exist as a target application.

Once again I am going to keep things as simple as possible and the goal is to export data from the intersection shown in the following form:

To start with an import format is created with the source being the FCCS application and the target set as a planning application.

Where applicable the FCCS dimensions are then mapped to the planning application dimensions.

No surprises with the location and the import format is selected.

On to the load rule and there is a slight difference between when FCCS is the source and when it is the target.

If you take a look at when loading from on-premise planning to FCCS the target Essbase database is called “Cube”.

When extracting from a FCCS application the source Essbase database is called “Plan Type”, like I said in my previous post I hope that a decision is made and implemented where only one naming convention is used to remove the confusion.

Within the load rule source filters are added to define intersection to extract data from the FCCS application.

At this point it is a good opportunity to go back to the target application and click “Refresh Members”

I went into detail in the last post on how this works but basically it will extract the current list of members for each dimension from the target FCCS application and push them into FDMEE, this will allow target members to be populated in the member selector.

I did notice that after refreshing members the currency dimension member list was still blank so they had to be entered manually into the source filter definition.

It is worth mentioning that the method that FDMEE uses to extract data from a BSO database is the Essbase calculation command DATAEXPORT, currently there is no way in FDMEE to change any of the options being used with the DATAEXPORT command so there are a number of limitations, an example being if you need to extract data from any sparse dynamic calc members then it is not possible to do so at the moment.

That leads me on to the target options in the load rule, there is the option to set different types of load methods.

The values define whether to load numeric data using an Essbase load rule with a file or directly with SQL, the “All Data Types” option is for loading text data with the Outline Load Utility.

Please note when the source is a EPM cloud application the setting makes no difference to the way it extracts data, it only controls the way FDMEE will load data from the FDMEE repository to the target on-premise application.

Currently only numeric data can be extracted from a source EPM application so there is not much point in changing the load method from the default, maybe extracting all data types will be available in a future release.

Next the data load mappings are defined which I don’t need to cover as I simply created one to one explicit mapping against the members that data was being extracted.

On to running the rule and to begin with I am only selecting to import from the source FCCS application.

The on-premise FDMEE process details show that the data was extracted from the FCCS application, the exported file is then loaded to the FDMEE repository and mapped.

There is an equivalent process within FCCS data management that shows the step of extracting data from the application.

At this point in the on-premise FDMEE workbench the extracted data has been imported and mappings applied.

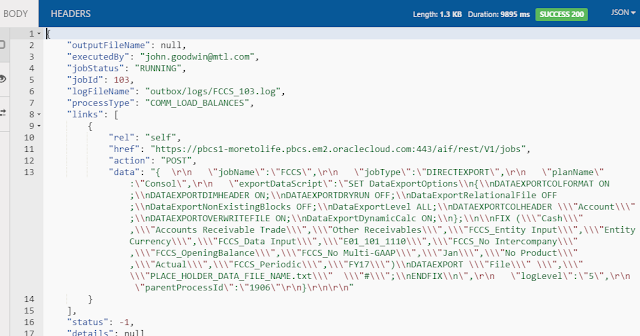

So what is going on behind the scenes to extract the data from the FCCS application, well this is where a REST comes into play.

A POST request against a data management REST resource.

The resource URL is the same one that it used in most of the interactions with data management in the cloud.

https://<cloud_instance>/aif/rest/jobs

It is the JSON input payload of the request that defines what data to extract.

The jobName value is the name of the FCCS application.

The jobType value for a data export is always “DIRECTEXPORT”

The planName is the plan type (cube) name.

The exportDataScript value contains an Essbase calculation script using the DATAEXPORT command, the FIX in the calc script will be generated from the source filters in the data load rule.

If the source was an ASO database, then MDX would be generated for the exportDataScript property value.

In my example the formatted calc script would look like:

SET DataExportOptions

{

DATAEXPORTCOLFORMAT ON;

DATAEXPORTDIMHEADER ON;

DATAEXPORTDRYRUN OFF;

DataExportRelationalFile OFF;

DataExportNonExistingBlocks OFF;

DataExportLevel ALL;

DATAEXPORTCOLHEADER "Account";

DATAEXPORTOVERWRITEFILE ON;

DataExportDynamicCalc ON;

};

FIX ("Cash","Accounts Receivable Trade","Other Receivables","FCCS_Entity Input","Entity Currency","FCCS_Data Input","E01_101_1110","FCCS_No Intercompany","FCCS_OpeningBalance","FCCS_No Multi-GAAP","Jan","No Product","Actual","FCCS_Periodic","FY17")

DATAEXPORT "File" "," PLACE_HOLDER_DATA_FILE_NAME.txt " "#";

ENDFIX

The JSON response includes details on the job that is being executed including the status and ID.

The process is then repeatedly checked with a REST call using the job ID in the format:

https://<cloud_instance>/aif/rest/jobs/<jobID>

In the original REST request the DATAEXPORT filename was set as “PLACE_HOLDER_DATA_FILE_NAME.txt”, when the script is run in the cloud instance the filename is updated to the format:

<target_app_name>_<cloud_dm_process_id>.dat

So in my example because the process id in the cloud DM was 45 and the target application name is called FCCS the filename generated is FCCS_45.dat

One the export has completed the exported file is moved into the lcm folder ready to be downloaded.

Now one of the migration REST resources is called to download the file, the URL format for the resource is

https://<cloud_instance>/interop/rest/11.1.2.3.600/applicationsnapshots/<filename>/contents

The file is downloaded to the on-premise FDMEE data folder and the file is renamed using the format

<target_app_name>_<fdmee_process_id>.pbcs.dat

Even though this is FCCS the same process as if it was PBCS is being followed hence the filename containing pbcs, this is also noticeably in the process logs with many references to PBCS, maybe the future was never considered when first developed.

Next the file is deleted from the cloud instance using the same URL but with a DELETE method.

From this point standard FDMEE functionality is resumed to load the file into the FDMEE repository and then map.

Data can then be exported from FDMEE and loaded into the target application.

For good measure I created a form to show the data had been successfully loaded to the on-premise planning application.

So that concludes this hybrid journey from FCCS to on-premise.

The post covered in detail exporting data from an on-premise planning application and loading into a target FCCS application, the aim today is to reverse that process and extract data from a FCCS application and then load into an on-premise planning application.

I am going to assume you have read through the previous post and the FCCS application has been added as a target application.

Technically in this scenario it is the going to be the source but it does need to exist as a target application.

Once again I am going to keep things as simple as possible and the goal is to export data from the intersection shown in the following form:

To start with an import format is created with the source being the FCCS application and the target set as a planning application.

Where applicable the FCCS dimensions are then mapped to the planning application dimensions.

No surprises with the location and the import format is selected.

On to the load rule and there is a slight difference between when FCCS is the source and when it is the target.

If you take a look at when loading from on-premise planning to FCCS the target Essbase database is called “Cube”.

When extracting from a FCCS application the source Essbase database is called “Plan Type”, like I said in my previous post I hope that a decision is made and implemented where only one naming convention is used to remove the confusion.

Within the load rule source filters are added to define intersection to extract data from the FCCS application.

At this point it is a good opportunity to go back to the target application and click “Refresh Members”

I went into detail in the last post on how this works but basically it will extract the current list of members for each dimension from the target FCCS application and push them into FDMEE, this will allow target members to be populated in the member selector.

I did notice that after refreshing members the currency dimension member list was still blank so they had to be entered manually into the source filter definition.

It is worth mentioning that the method that FDMEE uses to extract data from a BSO database is the Essbase calculation command DATAEXPORT, currently there is no way in FDMEE to change any of the options being used with the DATAEXPORT command so there are a number of limitations, an example being if you need to extract data from any sparse dynamic calc members then it is not possible to do so at the moment.

That leads me on to the target options in the load rule, there is the option to set different types of load methods.

The values define whether to load numeric data using an Essbase load rule with a file or directly with SQL, the “All Data Types” option is for loading text data with the Outline Load Utility.

Please note when the source is a EPM cloud application the setting makes no difference to the way it extracts data, it only controls the way FDMEE will load data from the FDMEE repository to the target on-premise application.

Currently only numeric data can be extracted from a source EPM application so there is not much point in changing the load method from the default, maybe extracting all data types will be available in a future release.

Next the data load mappings are defined which I don’t need to cover as I simply created one to one explicit mapping against the members that data was being extracted.

On to running the rule and to begin with I am only selecting to import from the source FCCS application.

The on-premise FDMEE process details show that the data was extracted from the FCCS application, the exported file is then loaded to the FDMEE repository and mapped.

There is an equivalent process within FCCS data management that shows the step of extracting data from the application.

At this point in the on-premise FDMEE workbench the extracted data has been imported and mappings applied.

So what is going on behind the scenes to extract the data from the FCCS application, well this is where a REST comes into play.

A POST request against a data management REST resource.

The resource URL is the same one that it used in most of the interactions with data management in the cloud.

https://<cloud_instance>/aif/rest/jobs

It is the JSON input payload of the request that defines what data to extract.

The jobName value is the name of the FCCS application.

The jobType value for a data export is always “DIRECTEXPORT”

The planName is the plan type (cube) name.

The exportDataScript value contains an Essbase calculation script using the DATAEXPORT command, the FIX in the calc script will be generated from the source filters in the data load rule.

If the source was an ASO database, then MDX would be generated for the exportDataScript property value.

In my example the formatted calc script would look like:

SET DataExportOptions

{

DATAEXPORTCOLFORMAT ON;

DATAEXPORTDIMHEADER ON;

DATAEXPORTDRYRUN OFF;

DataExportRelationalFile OFF;

DataExportNonExistingBlocks OFF;

DataExportLevel ALL;

DATAEXPORTCOLHEADER "Account";

DATAEXPORTOVERWRITEFILE ON;

DataExportDynamicCalc ON;

};

FIX ("Cash","Accounts Receivable Trade","Other Receivables","FCCS_Entity Input","Entity Currency","FCCS_Data Input","E01_101_1110","FCCS_No Intercompany","FCCS_OpeningBalance","FCCS_No Multi-GAAP","Jan","No Product","Actual","FCCS_Periodic","FY17")

DATAEXPORT "File" "," PLACE_HOLDER_DATA_FILE_NAME.txt " "#";

ENDFIX

The JSON response includes details on the job that is being executed including the status and ID.

The process is then repeatedly checked with a REST call using the job ID in the format:

https://<cloud_instance>/aif/rest/jobs/<jobID>

In the original REST request the DATAEXPORT filename was set as “PLACE_HOLDER_DATA_FILE_NAME.txt”, when the script is run in the cloud instance the filename is updated to the format:

<target_app_name>_<cloud_dm_process_id>.dat

So in my example because the process id in the cloud DM was 45 and the target application name is called FCCS the filename generated is FCCS_45.dat

One the export has completed the exported file is moved into the lcm folder ready to be downloaded.

Now one of the migration REST resources is called to download the file, the URL format for the resource is

https://<cloud_instance>/interop/rest/11.1.2.3.600/applicationsnapshots/<filename>/contents

The file is downloaded to the on-premise FDMEE data folder and the file is renamed using the format

<target_app_name>_<fdmee_process_id>.pbcs.dat

Even though this is FCCS the same process as if it was PBCS is being followed hence the filename containing pbcs, this is also noticeably in the process logs with many references to PBCS, maybe the future was never considered when first developed.

Next the file is deleted from the cloud instance using the same URL but with a DELETE method.

From this point standard FDMEE functionality is resumed to load the file into the FDMEE repository and then map.

Data can then be exported from FDMEE and loaded into the target application.

For good measure I created a form to show the data had been successfully loaded to the on-premise planning application.

So that concludes this hybrid journey from FCCS to on-premise.

No comments:

Post a Comment

Note: only a member of this blog may post a comment.