If you are looking to extend the default functionality of the integration agent, then events and scripting are there to provide just this.

It could be that you want to override the SQL or bind variables that have been defined in Data Integration. You may want to extract data from a database that is not currently available with standard functionality such as SAP Hana. You may want to pull data from a cloud provider or other data sources. It could be that you wish to initiate other processes or send email notifications. This can all be achieved with the custom scripting option.

Groovy or Jython are the scripting languages supported which allow this extended functionality. I went through details of how to configure them with the agent in Part 2 of this series.

If you are familiar with on-premise FDMEE then you will no doubt be aware of events and scripting. If you are not, basically during different stages of the integration process a custom script will be executed. With the integration agent there are currently four events and scripts.

Before Extract - This script is executed before the agent connects to the data source and executes the SQL query.

After Extract - This script is executed after the agent has extracted the data and produced an extract data file.

Before Upload - This script is executed before the data file is uploaded to EPM Cloud.

After upload – This script is executed after the data file has been uploaded to EPM Cloud.

If Groovy has been installed and the home location been defined in the agent initialisation file,

when an integration is run, the agent tries to execute each Groovy event script. As I have not yet created any scripts, they are not found.

17:47:14:094 :: --------------- Executing Custom Event :BefExtract ---------------

17:47:14:407 :: Script: E:\EPMAgent\appdata\scripts\BefExtract.groovy Not found.

17:47:14:705 :: Writing extract output to file: E:\EPMAgent\appdata\data\1016.dat

17:47:14:720 :: --------------- Completed execution for sessionId:1016 ---------------

17:47:14:720 :: --------------- Executing Custom Event :AftExtract ---------------

17:47:14:720 :: Script: E:\EPMAgent\appdata\scripts\AftExtract.groovy Not found.

17:47:14:720 :: --------------- Executing Custom Event :BefUpload ---------------

17:47:14:720 :: Script: E:\EPMAgent\appdata\scripts\BefUpload.groovy Not found.

17:47:14:720 :: Commencing file upload to cloud for file:E:\EPMAgent\appdata\data\1016.dat

17:47:15:182 :: File Upload successfully complete

17:47:15:182 :: --------------- Executing Custom Event :AftUpload ---------------

17:47:15:182 :: Script: E:\EPMAgent\appdata\scripts\AftUpload.groovy Not found.

If Jython has been installed and the home location defined in the agent initialisation file,

the log will contain a Jython script execution for each event.

17:51:28:869 :: --------------- Executing Custom Event :BefExtract ---------------

17:51:30:682 :: Script: E:\EPMAgent\appdata\scripts\BefExtract.py Not found.

17:51:31:404 :: Writing extract output to file: E:\EPMAgent\appdata\data\1015.dat

17:51:31:417 :: --------------- Completed execution for sessionId:1015 ---------------

17:51:31:417 :: --------------- Executing Custom Event :AftExtract ---------------

17:51:31:417 :: Script: E:\EPMAgent\appdata\scripts\AftExtract.py Not found.

17:51:31:417 :: --------------- Executing Custom Event :BefUpload ---------------

17:51:31:417 :: Script: E:\EPMAgent\appdata\scripts\BefUpload.py Not found.

17:51:31:417 :: Commencing file upload to cloud for file:E:\EPMAgent\appdata\data\1015.dat

17:51:32:215 :: File Upload successfully complete

17:51:32:215 :: --------------- Executing Custom Event :AftUpload ---------------

17:51:32:215 :: Script: E:\EPMAgent\appdata\scripts\AftUpload.py Not found.

If I create a before extract script and place it in the application data scripts folder

this time in the log the script will be found and executed.

17:58:40:412 :: --------------- Executing Custom Event :BefExtract ---------------

17:58:40:412 :: Executing script:E:\EPMAgent\appdata\scripts\BefExtract.py

For each scripting type there are API methods available to perform actions. These include writing to the process log, fetching and updating SQL queries and bind variables. There is also the ability to skip the step to extract the data, this allows you to override the default extract process and create your own data file. So you could skip the extract step, write your own code to connect to a different data source, extract data and produce a file that is uploaded to EPM Cloud. The file must contain the exact columns that have been defined in EPM Cloud and be named with the correct format of <process_id>.dat. More on this later.

Similar to on-premise FDMEE there are context functions available. These provide information such as the agent application data directory, Job ID (process id), data filename and delimiter.

In on-premise FDMEE you would access these functions with fdmeeContext[“FUNCTION”], with the agent you use agentContext[“FUNCTION”].

In addition to the context functions there is also the API. If you have worked with the API in on-premise then you will know that methods are called with fdmAPI.method. With the agent it is agentAPI.method.

To demonstrate the available contexts, in the before extract Jython script I added code to iterate through them and print them to the agent command window. The API “logInfo” method is used to write the information to the process log. There is an API method “logError” to log error messages to the process log.

In the above script I have also provided an example of accessing a context directly.

When the integration is executed, in the command window the contexts functions and values are outputted.

As well as the command window, the context information has been written to the process log.

So now let us look at using the API to retrieve the SQL query, then update it before it is executed.

In Data Integration, the following query has been defined to extract data.

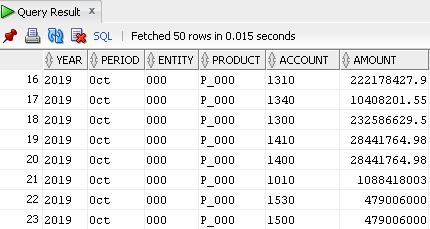

As an example, the source data includes year and period.

The query could be updated to include the year and period or have bind variables defined and set in the load rule, but as I want to demonstrate the API functionality, I am going to retrieve the SQL query in the before extract script using the API method “getQuery”.

When the integration is run, the “BefExtract” script will be executed and the SQL query stored and displayed.

Let us expand on that. This time I am going generate the current month and year. Retrieve the SQL query and enhance it to filter the data by current month and year. The API method “updateQuery” is then called passing in the updated query, the extract will then be run using the modified query.

I could have just as easily ignored the query defined in Data Integration. Created a new query and then used the “updateQuery” method to override the original query.

Running the previous example shows the original query has been updated to filter by current month and year. The updated query is then executed.

Moving on to retrieving and updating bind variables using the API methods “getBindVariables” and “setBindVariables”.

A new query was created in Data Integration to include bind variables for year and period. The bind variables were added as application filters in Data Management, please refer to part 3 if you are not clear on bind variables.

The query was defined at data load rule level and set along with the bind variables in the source filters of the load rule.

To be complete, in Data Integration these are set in the integration option filters.

Having to manually update the variables is not great and prone to error, so let us go through an example of overriding them with custom scripting.

Overriding the bind variables defined in the integration can be achieved in the before extract event script.

The following script generates the current month and year. The bind variables are retrieved using the API method “getBindVariables” and then updated with the current month/year values using the method “setBindVariables”.

If the integration is run, you can see the original bind variables values have been updated with the current month and year.

The example provided a way of updating the variables but does not offer much flexibility, they will always be overridden in the before extract script.

How about being able to use the values defined in the integration or override them. Also, instead of generating the year and period in the script let them be controlled by substitution variables.

Let us go through an example of how to achieve this. The following substitution variables for current month and year exist in the target application. These values will be retrieved using the REST API resource for substitution variables.

Let me break the before extract script into chunks and go through it.

The first section prints the SQL query which will be executed to extract the data.

The bind variables are retrieved, stored and printed.

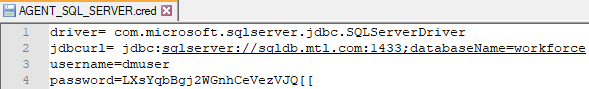

An empty dictionary is created which will hold the EPM Cloud details, these will be read from the agent initialisation file.

The target application name is defined.

The modules that are required in the script are imported. urrllb2 for making the REST API call, base64 to generate the encoded authorisation header and json to process the response from the REST API call.

At this point, if the integration is run the query and bind variables will be printed.

The next section iterates through each of the bind variables.

If the bind variable starts and ends with @ then this means that substitution variable values should be retrieved.

If the Cloud details dictionary is empty, then call a function to extract this information from the agent initialisation file. I have not included the function for this example.

The REST API URL to retrieve sub var information is generated.

As the bind variables start and end with @, the first iteration through the variables will retrieve and display the Cloud details. The REST API will be generated and outputted.

The final section of the script generates the REST API request to retrieve the sub var details.

The Cloud credentials are base64 encoded and added to the authorisation header.

The JSON response from the REST call is stored. An example of the JSON response is:

If the sub var value starts “FY” it is converted into the correct format for the SQL query, for example FY19 is converted to 2019.

The bind variables are updated with the sub var values and printed. The updated bind variables values will be used in the SQL extract query.

Displaying the full output shows that the original bind variables have been overridden with substitution variable values. This means the data extract can be driven by sub vars in the target application.

On to the final API method “skipAction” which basically allows you to bypass the data extract query being executed in the “BefExtract” script or the file being uploaded in the “BefUpload” script.

Being able to skip the data extract gives you the power with custom scripting to connect to any data source, for example it could be a database other than Oracle or SQL Server. It could be that data resides within a cloud service and you want to use a web service to extract it.

The rules around skipping the data extract are that you must produce a data file in the application data directory, it must contain the column headers that have been defined in EPM Cloud and the file should have the naming convention of <process_id>.dat. This is so the remaining steps of agent integration process can flow through as normal.

I am going to go through an example of bypassing the extract step. I will connect to a Web Service API to extract exchange rates. The exchange rates will be returned in JSON format, these will be processed and written to a data file. The agent will upload the data file and the standard integration process will continue to load the exchange rates to the target application.

The concept will be similar to the one I recently blogged about: using Groovy, enterprise planning and web services. If you want the full details, you can read all about here.

Up to now, my examples in this post have all been Jython based so I thought I would flip over to Groovy to demo this next one.

I am going to keep the objective as simple as possible and load latest exchange rates to a target application so they will be visible in the following form.

This means I will only need to create a data file with the currency code and exchange rate value which will be like:

The next step is to create the application data source in Data Management which will be based on the headings in the file above. Please refer to part 3 or part 4 in this series to get in-depth details about creating a data source and setting up an agent integration.

A new “Data Source” is added.

The file containing the headers is uploaded.

You still must select the source system as an on-premise database even though it is not. I don’t agree with some of the set up when overriding the default functionality, it may change in the future.

The application data source name will be based on the selected filename.

The header columns in the file have been converted into dimension names.

You still need to assign a query in the application filters. It doesn’t matter what it is if you are overriding. I am going to use the query to provide the details of the column headers that will be generated in the data extract file.

A JDBC Driver must be selected and URL populated even though they are not going to be used. Something else I don’t really agree with. Any value can be defined in the JDBC URL though.

I defined a query that will just return the header names I need in the extract file. Any query string can be entered as the query because it is not validated.

The remaining steps for the integration are just the same as a standard file based one so there is no need for me to go over it. I now have the following integration ready.

The next part of the objective is to retrieve current exchange rates for a list of input currencies through a web service API.

I am using a free API which requires the following URL format:

https://free.currconv.com/api/v7/convert?q=<CURRENCY_CODES>&compact=ultra&apiKey=<API_KEY>

The currency conversion section of the URL needs to follow:

<FROM_CURRENCY>_<TO_CURRENCY>

The base currency is GBP so to convert from GBP to Euro would be “GBP_EUR”.

Based on the input currencies the URL I need to generate will be:

https://free.currconv.com/api/v7/convert?q=GBP_CAD,GBP_EUR,GBP_SEK,GBP_USD&compact=ultra&apiKey=<API_KEY>

This will return a JSON response of:

This means the before extract Groovy script will need to generate the URL, make a request using the URL, process the response and write to a file.

I will give an overview to the script I came up with.

The base currency, input currencies, API key, and web service mode are defined.

The data extract filename is generated from the context function “DATAFILENAME”.

The currencies are then converted into the correct format for the Web Service API.

The URL to return the exchange rates values is created.

A request is made to the URL and the JSON response stored.

The extract data file is created, the headers in the file are based on the query that is retrieved using the API method “getQuery”.

The rows in the file are created by iterating through the JSON response and writing out the currency code and exchange rate value.

Finally, and most importantly, the API method “skipAction” is called, which stops the standard extract functionality from being run.

Usually I would be writing to the process log and not using print. I have only done this for demoing.

If the integration is run, you can see clearly from the output what is happening in the process.

The extract file has been created in the correct location with the required naming convention. The context function pretty much handles all of that.

The data extract file contains the header, currency codes and exchange rate values.

The file is automatically uploaded by the agent and the remaining steps of the integration are carried out.

The process log also shows that the data extract step was skipped.

13:40:38:308 :: --------------- Executing Custom Event :BefExtract ---------------

13:40:38:620 :: Executing script:C:\EPMAgent\appdata\scripts\BefExtract.groovy

13:40:39:464 :: ---------------Completed execution for sessionId:1101 with extract skipped.

Process details confirms the integration was successful.

Opening the data form again shows that exchange rates have been loaded.

I think that covers events, custom scripting, context functions and all the currently available API methods.

Next up…running multiple agents and cluster assignments.

It could be that you want to override the SQL or bind variables that have been defined in Data Integration. You may want to extract data from a database that is not currently available with standard functionality such as SAP Hana. You may want to pull data from a cloud provider or other data sources. It could be that you wish to initiate other processes or send email notifications. This can all be achieved with the custom scripting option.

Groovy or Jython are the scripting languages supported which allow this extended functionality. I went through details of how to configure them with the agent in Part 2 of this series.

If you are familiar with on-premise FDMEE then you will no doubt be aware of events and scripting. If you are not, basically during different stages of the integration process a custom script will be executed. With the integration agent there are currently four events and scripts.

Before Extract - This script is executed before the agent connects to the data source and executes the SQL query.

After Extract - This script is executed after the agent has extracted the data and produced an extract data file.

Before Upload - This script is executed before the data file is uploaded to EPM Cloud.

After upload – This script is executed after the data file has been uploaded to EPM Cloud.

If Groovy has been installed and the home location been defined in the agent initialisation file,

when an integration is run, the agent tries to execute each Groovy event script. As I have not yet created any scripts, they are not found.

17:47:14:094 :: --------------- Executing Custom Event :BefExtract ---------------

17:47:14:407 :: Script: E:\EPMAgent\appdata\scripts\BefExtract.groovy Not found.

17:47:14:705 :: Writing extract output to file: E:\EPMAgent\appdata\data\1016.dat

17:47:14:720 :: --------------- Completed execution for sessionId:1016 ---------------

17:47:14:720 :: --------------- Executing Custom Event :AftExtract ---------------

17:47:14:720 :: Script: E:\EPMAgent\appdata\scripts\AftExtract.groovy Not found.

17:47:14:720 :: --------------- Executing Custom Event :BefUpload ---------------

17:47:14:720 :: Script: E:\EPMAgent\appdata\scripts\BefUpload.groovy Not found.

17:47:14:720 :: Commencing file upload to cloud for file:E:\EPMAgent\appdata\data\1016.dat

17:47:15:182 :: File Upload successfully complete

17:47:15:182 :: --------------- Executing Custom Event :AftUpload ---------------

17:47:15:182 :: Script: E:\EPMAgent\appdata\scripts\AftUpload.groovy Not found.

If Jython has been installed and the home location defined in the agent initialisation file,

the log will contain a Jython script execution for each event.

17:51:28:869 :: --------------- Executing Custom Event :BefExtract ---------------

17:51:30:682 :: Script: E:\EPMAgent\appdata\scripts\BefExtract.py Not found.

17:51:31:404 :: Writing extract output to file: E:\EPMAgent\appdata\data\1015.dat

17:51:31:417 :: --------------- Completed execution for sessionId:1015 ---------------

17:51:31:417 :: --------------- Executing Custom Event :AftExtract ---------------

17:51:31:417 :: Script: E:\EPMAgent\appdata\scripts\AftExtract.py Not found.

17:51:31:417 :: --------------- Executing Custom Event :BefUpload ---------------

17:51:31:417 :: Script: E:\EPMAgent\appdata\scripts\BefUpload.py Not found.

17:51:31:417 :: Commencing file upload to cloud for file:E:\EPMAgent\appdata\data\1015.dat

17:51:32:215 :: File Upload successfully complete

17:51:32:215 :: --------------- Executing Custom Event :AftUpload ---------------

17:51:32:215 :: Script: E:\EPMAgent\appdata\scripts\AftUpload.py Not found.

If I create a before extract script and place it in the application data scripts folder

17:58:40:412 :: --------------- Executing Custom Event :BefExtract ---------------

17:58:40:412 :: Executing script:E:\EPMAgent\appdata\scripts\BefExtract.py

For each scripting type there are API methods available to perform actions. These include writing to the process log, fetching and updating SQL queries and bind variables. There is also the ability to skip the step to extract the data, this allows you to override the default extract process and create your own data file. So you could skip the extract step, write your own code to connect to a different data source, extract data and produce a file that is uploaded to EPM Cloud. The file must contain the exact columns that have been defined in EPM Cloud and be named with the correct format of <process_id>.dat. More on this later.

Similar to on-premise FDMEE there are context functions available. These provide information such as the agent application data directory, Job ID (process id), data filename and delimiter.

In on-premise FDMEE you would access these functions with fdmeeContext[“FUNCTION”], with the agent you use agentContext[“FUNCTION”].

In addition to the context functions there is also the API. If you have worked with the API in on-premise then you will know that methods are called with fdmAPI.method. With the agent it is agentAPI.method.

To demonstrate the available contexts, in the before extract Jython script I added code to iterate through them and print them to the agent command window. The API “logInfo” method is used to write the information to the process log. There is an API method “logError” to log error messages to the process log.

In the above script I have also provided an example of accessing a context directly.

When the integration is executed, in the command window the contexts functions and values are outputted.

So now let us look at using the API to retrieve the SQL query, then update it before it is executed.

In Data Integration, the following query has been defined to extract data.

As an example, the source data includes year and period.

The query could be updated to include the year and period or have bind variables defined and set in the load rule, but as I want to demonstrate the API functionality, I am going to retrieve the SQL query in the before extract script using the API method “getQuery”.

When the integration is run, the “BefExtract” script will be executed and the SQL query stored and displayed.

Let us expand on that. This time I am going generate the current month and year. Retrieve the SQL query and enhance it to filter the data by current month and year. The API method “updateQuery” is then called passing in the updated query, the extract will then be run using the modified query.

I could have just as easily ignored the query defined in Data Integration. Created a new query and then used the “updateQuery” method to override the original query.

Running the previous example shows the original query has been updated to filter by current month and year. The updated query is then executed.

Moving on to retrieving and updating bind variables using the API methods “getBindVariables” and “setBindVariables”.

A new query was created in Data Integration to include bind variables for year and period. The bind variables were added as application filters in Data Management, please refer to part 3 if you are not clear on bind variables.

The query was defined at data load rule level and set along with the bind variables in the source filters of the load rule.

To be complete, in Data Integration these are set in the integration option filters.

Having to manually update the variables is not great and prone to error, so let us go through an example of overriding them with custom scripting.

Overriding the bind variables defined in the integration can be achieved in the before extract event script.

The following script generates the current month and year. The bind variables are retrieved using the API method “getBindVariables” and then updated with the current month/year values using the method “setBindVariables”.

If the integration is run, you can see the original bind variables values have been updated with the current month and year.

The example provided a way of updating the variables but does not offer much flexibility, they will always be overridden in the before extract script.

How about being able to use the values defined in the integration or override them. Also, instead of generating the year and period in the script let them be controlled by substitution variables.

Let us go through an example of how to achieve this. The following substitution variables for current month and year exist in the target application. These values will be retrieved using the REST API resource for substitution variables.

In the integration filters, if the values start and end with @ then that means the values should be derived from substitution variables. The sub var name is between the @ signs. If the variable does not start and end with @ then the values in the integration will not be overridden.

Let me break the before extract script into chunks and go through it.

The first section prints the SQL query which will be executed to extract the data.

The bind variables are retrieved, stored and printed.

An empty dictionary is created which will hold the EPM Cloud details, these will be read from the agent initialisation file.

The target application name is defined.

The modules that are required in the script are imported. urrllb2 for making the REST API call, base64 to generate the encoded authorisation header and json to process the response from the REST API call.

At this point, if the integration is run the query and bind variables will be printed.

The next section iterates through each of the bind variables.

If the bind variable starts and ends with @ then this means that substitution variable values should be retrieved.

If the Cloud details dictionary is empty, then call a function to extract this information from the agent initialisation file. I have not included the function for this example.

The REST API URL to retrieve sub var information is generated.

As the bind variables start and end with @, the first iteration through the variables will retrieve and display the Cloud details. The REST API will be generated and outputted.

The final section of the script generates the REST API request to retrieve the sub var details.

The Cloud credentials are base64 encoded and added to the authorisation header.

The JSON response from the REST call is stored. An example of the JSON response is:

If the sub var value starts “FY” it is converted into the correct format for the SQL query, for example FY19 is converted to 2019.

The bind variables are updated with the sub var values and printed. The updated bind variables values will be used in the SQL extract query.

Displaying the full output shows that the original bind variables have been overridden with substitution variable values. This means the data extract can be driven by sub vars in the target application.

On to the final API method “skipAction” which basically allows you to bypass the data extract query being executed in the “BefExtract” script or the file being uploaded in the “BefUpload” script.

Being able to skip the data extract gives you the power with custom scripting to connect to any data source, for example it could be a database other than Oracle or SQL Server. It could be that data resides within a cloud service and you want to use a web service to extract it.

The rules around skipping the data extract are that you must produce a data file in the application data directory, it must contain the column headers that have been defined in EPM Cloud and the file should have the naming convention of <process_id>.dat. This is so the remaining steps of agent integration process can flow through as normal.

I am going to go through an example of bypassing the extract step. I will connect to a Web Service API to extract exchange rates. The exchange rates will be returned in JSON format, these will be processed and written to a data file. The agent will upload the data file and the standard integration process will continue to load the exchange rates to the target application.

The concept will be similar to the one I recently blogged about: using Groovy, enterprise planning and web services. If you want the full details, you can read all about here.

Up to now, my examples in this post have all been Jython based so I thought I would flip over to Groovy to demo this next one.

I am going to keep the objective as simple as possible and load latest exchange rates to a target application so they will be visible in the following form.

This means I will only need to create a data file with the currency code and exchange rate value which will be like:

The next step is to create the application data source in Data Management which will be based on the headings in the file above. Please refer to part 3 or part 4 in this series to get in-depth details about creating a data source and setting up an agent integration.

A new “Data Source” is added.

The file containing the headers is uploaded.

You still must select the source system as an on-premise database even though it is not. I don’t agree with some of the set up when overriding the default functionality, it may change in the future.

The application data source name will be based on the selected filename.

The header columns in the file have been converted into dimension names.

You still need to assign a query in the application filters. It doesn’t matter what it is if you are overriding. I am going to use the query to provide the details of the column headers that will be generated in the data extract file.

A JDBC Driver must be selected and URL populated even though they are not going to be used. Something else I don’t really agree with. Any value can be defined in the JDBC URL though.

I defined a query that will just return the header names I need in the extract file. Any query string can be entered as the query because it is not validated.

The remaining steps for the integration are just the same as a standard file based one so there is no need for me to go over it. I now have the following integration ready.

The next part of the objective is to retrieve current exchange rates for a list of input currencies through a web service API.

I am using a free API which requires the following URL format:

https://free.currconv.com/api/v7/convert?q=<CURRENCY_CODES>&compact=ultra&apiKey=<API_KEY>

The currency conversion section of the URL needs to follow:

<FROM_CURRENCY>_<TO_CURRENCY>

The base currency is GBP so to convert from GBP to Euro would be “GBP_EUR”.

Based on the input currencies the URL I need to generate will be:

https://free.currconv.com/api/v7/convert?q=GBP_CAD,GBP_EUR,GBP_SEK,GBP_USD&compact=ultra&apiKey=<API_KEY>

This will return a JSON response of:

This means the before extract Groovy script will need to generate the URL, make a request using the URL, process the response and write to a file.

I will give an overview to the script I came up with.

The base currency, input currencies, API key, and web service mode are defined.

The data extract filename is generated from the context function “DATAFILENAME”.

The currencies are then converted into the correct format for the Web Service API.

The URL to return the exchange rates values is created.

A request is made to the URL and the JSON response stored.

The extract data file is created, the headers in the file are based on the query that is retrieved using the API method “getQuery”.

The rows in the file are created by iterating through the JSON response and writing out the currency code and exchange rate value.

Finally, and most importantly, the API method “skipAction” is called, which stops the standard extract functionality from being run.

Usually I would be writing to the process log and not using print. I have only done this for demoing.

If the integration is run, you can see clearly from the output what is happening in the process.

The extract file has been created in the correct location with the required naming convention. The context function pretty much handles all of that.

The data extract file contains the header, currency codes and exchange rate values.

The file is automatically uploaded by the agent and the remaining steps of the integration are carried out.

The process log also shows that the data extract step was skipped.

13:40:38:308 :: --------------- Executing Custom Event :BefExtract ---------------

13:40:38:620 :: Executing script:C:\EPMAgent\appdata\scripts\BefExtract.groovy

13:40:39:464 :: ---------------Completed execution for sessionId:1101 with extract skipped.

Process details confirms the integration was successful.

Opening the data form again shows that exchange rates have been loaded.

I think that covers events, custom scripting, context functions and all the currently available API methods.

Next up…running multiple agents and cluster assignments.