In the last part I went through extracting data from a source Oracle database using the integration agent. I am going to continue with the same theme, but this time look at extracting data from a source SQL Server database. If you have not read through the last post, then I recommend doing so as I am not going to go into as much detail.

I am going to extract workforce type data which spans across data types like Dates, Smart Lists and Text.

The data resides in a table named WFDATA within the workforce database.

I confirmed the machine running the agent could connect to the source SQL Server database.

Until there is parity between Data Management and Data Integration then it will be a combination of both to work with the integration agent. This means I will be flipping between them and where possible I will demo in Data Integration.

The SQL query to execute is defined in Data Integration. A new query was defined to extract the workforce data.

I created a comma separated file based on the columns that are going to be returned from the query. This is to generate the dimension names when adding a new data source application in Data Management. Remember that the filename defines the application name in Data Management.

In Data Management a new “Data Source” can now be added.

Select “On Premise Database”, upload the file with the column names defined.

The filename should now be populated as the application name. A prefix can be added if there is already an application with the same name.

This will create a new application with the dimensions that match those in the csv file and query.

The application filters will define the connection details and the query can be populated.

As mentioned in the last post, there are two possible values for the Credential Store.

In the previous blog I went through how to use the Cloud option. This time I am going to go down the route of using a credential file. This means the JDBC Driver, URL, Username and Password do not need to be populated in Data Management. These will be defined in a file which will be stored in the application data config folder.

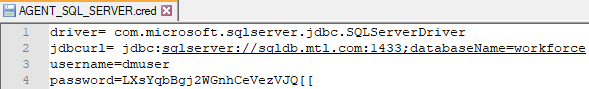

The credential file should be named:

<application_data_source_name>.cred

The file will need to contain properties – driver, jdbcurl, username and password.

The password should be encrypted using the “encryptpassword” script in the agent bin folder.

The encrypted password will need to be manually copied to the credential file.

An example of a completed credential file for connecting to the source SQL Server database:

The credential file should be saved in the application data config folder.

In this example I am going to stick with the query being assigned at application level. The query does not contain bind variables so no need to add any additional application filters.

The rest of the integration is built up in the same way as a file-base integration. The data source application is added as the source, as the data being extracted is non-numeric the type is set as “All Data Type” and the delimiter should match to one set in the application filters section of the data source application.

The load method in the rule is defined as “All data types with security”.

So on to running the integration. As a test I removed the credential file and the following error was generated.

The credential file was restored to the application data config folder and the integration run again.

The integration failed because the agent only contains JDBC drivers for Oracle database and the error means the SQL Server drivers cannot be found.

The SQL Server JDBC drivers can easily be located and downloaded. The driver file should be placed in the agent lib directory.

In order for the agent to pick up the drivers, the CUSTOM_CLASS_PATH property in the agent initialisation file should be updated to include the driver location.

The file can be explicitly defined or all files in the lib directory can be added to the class path.

After making changes the agent will need to be restarted to pick them up.

Running the integration again proves to be successful.

The data has been imported and mapped.

I know I could have shown the workbench in Data Integration but it is not the best for demoing.

The data is available in the target application.

So that now covers both Oracle and SQL Server database integrations. Next on to events and custom scripting.

I am going to extract workforce type data which spans across data types like Dates, Smart Lists and Text.

The data resides in a table named WFDATA within the workforce database.

I confirmed the machine running the agent could connect to the source SQL Server database.

Until there is parity between Data Management and Data Integration then it will be a combination of both to work with the integration agent. This means I will be flipping between them and where possible I will demo in Data Integration.

The SQL query to execute is defined in Data Integration. A new query was defined to extract the workforce data.

I created a comma separated file based on the columns that are going to be returned from the query. This is to generate the dimension names when adding a new data source application in Data Management. Remember that the filename defines the application name in Data Management.

In Data Management a new “Data Source” can now be added.

Select “On Premise Database”, upload the file with the column names defined.

The filename should now be populated as the application name. A prefix can be added if there is already an application with the same name.

This will create a new application with the dimensions that match those in the csv file and query.

The application filters will define the connection details and the query can be populated.

As mentioned in the last post, there are two possible values for the Credential Store.

In the previous blog I went through how to use the Cloud option. This time I am going to go down the route of using a credential file. This means the JDBC Driver, URL, Username and Password do not need to be populated in Data Management. These will be defined in a file which will be stored in the application data config folder.

The credential file should be named:

<application_data_source_name>.cred

The file will need to contain properties – driver, jdbcurl, username and password.

The password should be encrypted using the “encryptpassword” script in the agent bin folder.

The encrypted password will need to be manually copied to the credential file.

An example of a completed credential file for connecting to the source SQL Server database:

The credential file should be saved in the application data config folder.

In this example I am going to stick with the query being assigned at application level. The query does not contain bind variables so no need to add any additional application filters.

The rest of the integration is built up in the same way as a file-base integration. The data source application is added as the source, as the data being extracted is non-numeric the type is set as “All Data Type” and the delimiter should match to one set in the application filters section of the data source application.

The load method in the rule is defined as “All data types with security”.

So on to running the integration. As a test I removed the credential file and the following error was generated.

The credential file was restored to the application data config folder and the integration run again.

The integration failed because the agent only contains JDBC drivers for Oracle database and the error means the SQL Server drivers cannot be found.

The SQL Server JDBC drivers can easily be located and downloaded. The driver file should be placed in the agent lib directory.

In order for the agent to pick up the drivers, the CUSTOM_CLASS_PATH property in the agent initialisation file should be updated to include the driver location.

The file can be explicitly defined or all files in the lib directory can be added to the class path.

After making changes the agent will need to be restarted to pick them up.

Running the integration again proves to be successful.

The data has been imported and mapped.

I know I could have shown the workbench in Data Integration but it is not the best for demoing.

The data is available in the target application.

No comments:

Post a Comment

Note: only a member of this blog may post a comment.